Introduction

Soft hyphens

In the modern post-Unicode era, a soft

hyphen

is typically defined as a spot where you,

the word processor, may break this word across a line break, if

needed

But even as recently as ISO 8859

a soft hyphen was for use when a line break has been

established within a word

Although

not called a soft

hyphen back then, this use of

the hyphen has been around for centuries. E.g., the OED cites NWEW as saying

Hyphen … is used … when one part of a word

concludes the former Line, and the one begins the next.

It is this latter (older) definition with which we are concerned

here: a computer character (or other XML construct) used in a

transcription to indicate where an end-of-line hyphen was

printed in the source text to indicate this word is

continued on the next line

.

The use of such characters (hyphen to indicate word

continued on next line

) is nearly ubiquitous in printed

works (at least in English). For example, I searched Google

Books for the word balisage

, and looked at the

first book listed. Even though I cannot read it because it

is in French, there are obviously four soft hyphens on the first

page of printed prose alone (i.e., ignoring the title page,

etc.); that page has just over 200 words spread over 20 lines.

In the first full chapter of Michael Kay’s

book I counted 67 soft

hyphens in roughly 17,760 words over roughly 1410 lines.

Recording lineation and end-of-line hyphens

The Text Encoding Initiative Guidelines for Electronic Text Encoding and

Interchange contain a discussion of how to handle

these extant typographic indicators.

One common solution is to ignore the soft hyphens, and to simply

transcribe the word that has been broken across a line break as

a single word. Consider the following example.

This passage might be encoded

as

so far they’d been smart enough to keep quiet about it. I’d never seen any

posts about the Tomb of Horrors on any gunter message boards. I realized,

of course, that this might be because my theory about the old D&D

module was completely lame and totally off base.</p>

or even

so far they’d been smart enough to keep quiet about it. I’d

never seen any posts about the Tomb of Horrors on any

gunter message boards. I realized, of course, that this might

be because my theory about the old D&D module was

completely lame and totally off base.</p>

or, if encoding original lineation

<lb/>so far they’d been smart enough to keep quiet about it. I’d never seen any

<lb/>posts about the Tomb of Horrors on any gunter message boards. I realized,

<lb/>of course, that this might be because my theory about the old D&D

<lb/>module was completely lame and totally off base.</p>

In all three of the above encodings, the word

realized

has been silently reconstituted from its

constituent parts, the initial portion immediately prior to the

soft hyphen, and the final portion shortly after the soft hyphen.

In the first and third examples the soft hyphen is resolved by

moving the final portion of the word up from the begining of its

line to the end of the previous line (which I call

finalUp

). It could just as easily have been

resolved by moving the initial portion of the word from the end of

its line to the beginning of the next line (which I call

initDown

). For most of this paper I will discuss

resolution in only the finalUp

direction, but the

issues generally apply equally well to both directions.

Personally, I do not like that third (last) encoding. It

explicitly asserts there was a line break in the source document

between realized,

and of course

,

which is not true. But nonetheless, the practice is not uncommon.

The first encoded example is not nearly so bad, as its implication

that there was a line break at that spot is implicit, not

explicit. The middle of the three encoding possibilities is not

objectionable in assertion of line breaks at all, since it makes

no such assertions. (One could infer them, but they are not

implied.) However, many projects will find it a disadvantage to

transcribe prose completely irrespective of original lineation.

Keeping track of original lineation is very helpful when trying to

align the source document with the transcription (or the output of

processing the transcription). Even if a project does not think

the users of its transcribed texts will appreciate this alignment,

the project proofreaders will — a lot.

Another common approach is to explicitly record both the

hyphen character and original lineation. Consider the following example.

It turns out that the most important voice in the Su‐

preme Court nomination battle is not the American peo‐

ple’s, as Senate Republicans have insisted from the mo‐

ment Justice Antonin Scalia died last month. It is not even

that of the senators. It’s the National Rifle Association’s.

That is what the majority leader, Mitch McConnell,

said the other day when asked about the possibility of con‐

sidering and confirming President Obama’s nominee,

Judge Merrick Garland, after the November elections. “I

can’t imagine that a Republican majority in the United

States Senate would want to confirm, in a lame-duck ses‐

sion, a nominee opposed by the National Rifle Associa‐

tion,” he told “Fox News Sunday.”

This excerpt from a

New York

Times editorial might be encoded as follows.

<p>It turns out that the most important voice in the Su<pc force="weak">-</pc>

<lb break="no"/>preme Court nomination battle is not the American peo<pc force="weak">-</pc>

<lb break="no"/>ple’s, as Senate Republicans have insisted from the mo<pc force="weak">-</pc>

<lb break="no"/>ment Justice Antonin Scalia died last month. It is not even

<lb/>that of the senators. It’s the National Rifle Association’s.</p>

<p>That is what the majority leader, Mitch McConnell,

<lb/>said the other day when asked about the possibility of con<pc force="weak">-</pc>

<lb break="no"/>sidering and confirming President Obama’s nominee,

<lb/>Judge Merrick Garland, after the November elections. “I

<lb/>can’t imagine that a Republican majority in the United

<lb/>States Senate would want to confirm, in a lame-duck ses<pc force="weak">-</pc>

<lb break="no"/>sion, a nominee opposed by the National Rifle Associa<pc force="weak">-</pc>

<lb break="no"/>tion,” he told “Fox News Sunday.”</p>

Here it is explicit that the hyphen character is not a word

separator (

force="weak"), and that the line break

does not imply the end of an orthographic token

(

break="no"). It is worth noting that many TEI projects

choose to use either

<pc force="weak"> or

<lb break="no">, but not both.

At my project we encode soft hyphens using the

Unicode character SOFT HYPHEN (U+00AD). Given that this

character is explicitly of the a word processor may

insert a hyphen here if needed

variety, in some sense it

is technically incorrect to use it for this purpose.

Furthermore, the TEI Guidelines do not

recommend this use. In our defense, we chose this path back in

the ISO 8859 days, and when ­ was an SGML

SDATA reference that did not necessarily mean code-point 0xAD.

But more importantly, the detail of which character is used to

represent the this word is continued on the next

line

glyph that was on the source page does not matter,

so long as it is not also used for some other purpose in the

same file. So, given that we are encoding early modern printed

books, we could just as well have used the EURO SIGN (U+20AC)

for this purpose. In either case it is character abuse; however

the abuse of SOFT HYPHEN seems much less dramatic than would be

the abuse of EURO SIGN: this has something

to do with hyphenation, and nothing to do

with currency.

So our encoding of the excerpt from the New York Times editorial would be as

follows.

<p>It turns out that the most important voice in the Su­

<lb/>preme Court nomination battle is not the American peo­

<lb/>ple's, as Senate Republicans have insisted from the mo­

<lb/>ment Justice Antonin Scalia died last month. It is not even

<lb/>that of the senators. It's the National Rifle Association's.</p>

<p>That is what the majority leader, Mitch McConnell,

<lb/>said the other day when asked about the possibility of con­

<lb/>sidering and confirming President Obama's nominee,

<lb/>Judge Merrick Garland, after the November elections. <said>I

<lb/>can't imagine that a Republican majority in the United

<lb/>States Senate would want to confirm, in a lame-duck ses­

<lb/>sion, a nominee opposed by the National Rifle Associa­

<lb/>tion,</said> he told <title>Fox News Sunday.</title></p>

Desired output

Encoding texts serves little purpose unless some sort of

analysis or output generation (or both) is undertaken. If all we

wanted to do was read the text, scanned images of the pages

would do.

Consider the following snippet of an encoded text:

<p>Whatever has been ſaid

<lb n="14"/>by Men of more Wit than

<lb n="15"/>Wiſdom, and perhaps of

<lb n="16"/>more malice than either,

<lb n="17"/>that Women are natural­

<lb n="18"/>ly Incapable of acting Pru­

<lb n="19"/>dently, or that they are

<lb n="20"/>neceſſarily determined to

<lb n="21"/>folly, …

For most analyses we would prefer the words

naturally

and

Prudently

to occur

in our data, and the the tokens

ly

,

Pru

, and

dently

not to occur. That

is, we would like the soft hyphens resolved. The exception is

the physical bibliographer who is interested in the phenomena of

breaking a word across a line.

As with analyses, for most purposes we would prefer to

read the text with as few interruptions to words as possible.

The obvious exception is when we want to align reading of the

processed output with the physical source page or a facsimile

thereof. This alignment makes proofreading

much easier.

Thus for proofreading we might like to see something like

the following.

13: Whatever has been ſaid

14: by Men of more Wit than

15: Wiſdom, and perhaps of

16: more malice than either,

17: that Women are natural-

18: ly Incapable of acting Pru-

19: dently, or that they are

20: neceſſarily determined to

21: folly, …

Whereas for casual reading, we might prefer:

Whatever has been said by Men of more Wit than Wisdom, and

perhaps of more malice than either, that Women are naturally

Incapable of acting Prudently, or that they are necessarily

determined to folly, …

The question is, of course, how to get that

resolved

output.

When I’m wrong, I can be really wrong

Famous last words

: (figuratively,

expressing sarcasm) A statement which is overly optimistic,

results from overconfidence, or lacks realistic

foresight.

— FLWs

Like many people, I make good predictions and bad ones.

But sometimes I make truly horrible predictions. E.g., in early

1995 or thereabouts I infamously said something like

remember, the web is not our friend, it is our

enemy

. Hard to be more wrong than that. But when it came

to soft hyphens, it may turn out I was. You see, sometime during

the early days of the Women Writers Project I asserted that

software could read our documents (in which soft hyphens were

encoded using first the ­. Waterloo Script

set symbol (essentially a variable), and later the

­ SGML SDATA entity reference), and

resolve

the soft hyphen for creating full-text

searchable word lists or reading output for undergraduates. I

said this with a how hard can it be?

attitude,

I’m sure.

Well, as will be discussed in the rest of this paper, it

has turned out to be quite hard.

Seems easy …

At first blush, this does not seem like it would be a

difficult programming task. Basically, when you find a soft

hyphen, drop it and replace it with the first token from the next

line. Correspondingly, in order to avoid duplicating the first

token from the next line, when you find a text node

whose immediately preceding text node ended in soft hyphen, drop

the first token. For example, consider the following passage.

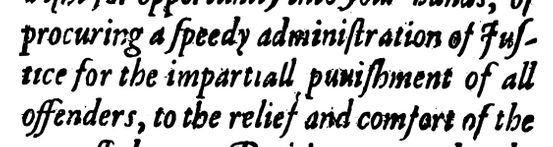

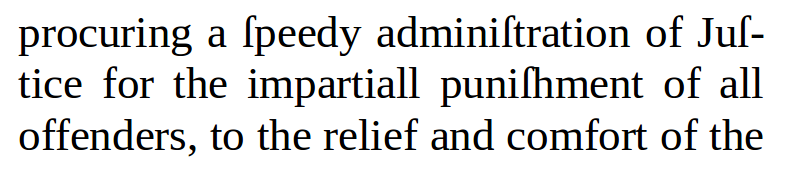

Or, in modern typography,

If this passage is transcribed as

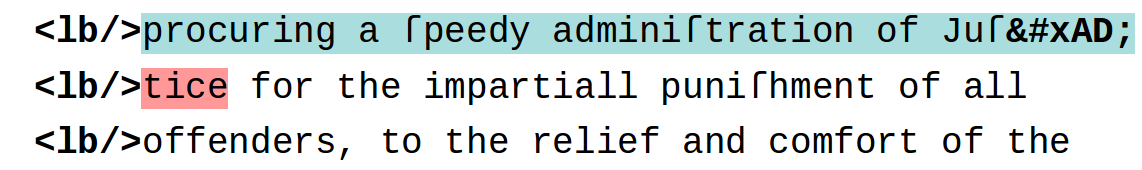

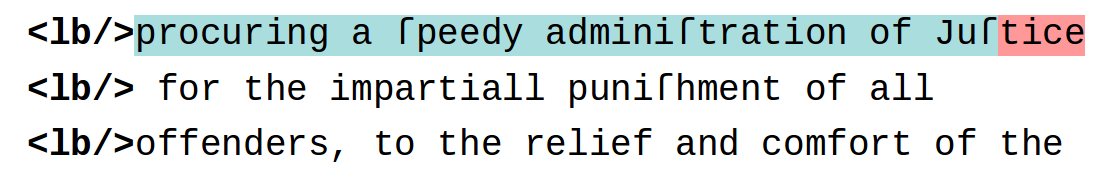

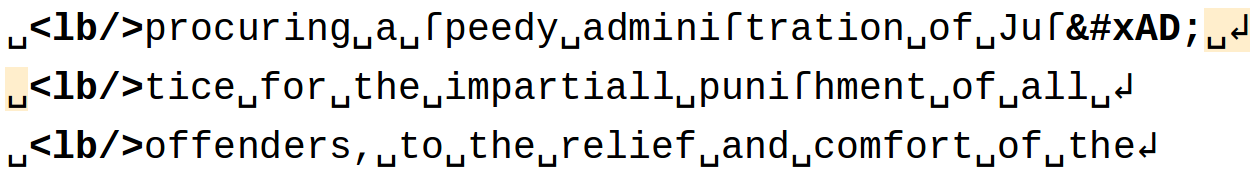

<lb/>procuring a ſpeedy adminiſtration of Juſ­

<lb/>tice for the impartiall puniſhment of all

<lb/>offenders, to the relief and comfort of the

then to

resolve

the soft hyphen, it needs to be

replaced by the first text token of the line that immediately

follows.

In an XSLT context, this means that the template that matches the

blue portion above needs to strip off the

­

character and replace it with the red portion in the above; and

the template that matches the text node that includes the red

portion needs to strip off said red portion (since it has already

been put into the output stream by the template that matched the

blue portion).

Whitespace

That doesn’t sound too tough. Of course it is obviously a

little harder than the diagrams above make it look, for they

ignore the whitespace between the blue and red portions:

In order to handle that whitespace we need to

-

ignore whitespace at the end-of-line when looking for soft hyphens

-

ensure that the end-of-line whitespace is not

inserted between the two parts of the broken word, either by

stripping end-of-line whitespace off along with the

­ character, or by carefully replacing

only that character (such that the whitespace comes after the

re-constituted word)

Of course we cannot just normalize whitespace using

XPath’s built-in normalize-space() function, as in

many cases leading and trailing space are important. E.g., given

the following fragment,

<p rend="first-indent(1)">How far the passages of scripture

<lb/>she mentions were applicable to the

<lb/>conduct of <persName>Mr B</persName> it is not our prov­

<lb/>ince to determine; but it is not

using

normalize-space() on

␣it␣is␣not␣our␣prov­↲

would lead to

…conduct of Mr. Bit is not our province to

determine; …

, because the space in front of

it

would be lost.

But even with this whitespace concern, this is not particularly

difficult. And if that’s all there was to it, well, I wouldn’t be

writing this paper.

Further complications

’Twixt

First thing to keep in mind is that XML constructs other

than just the <lb> element may come between the

text node that ends in SOFT HYPHEN and the text node that

contains the representation of the continued word. Besides the

obvious (XML comments and XML processing instructions), first

and foremost the feature that forced the typographer to break

the word in the first place may have been a page break, not a

line break. Page breaks usually have other information

associated with them (page numbers, catch words, signature

marks, running titles) that are generally encoded where

they lie

such that they further interrupt the word that

has been split. E.g.

<p>Whoever may come out in any society as Mis­

<pb n="247"/>

<milestone unit="sig" n="M4r"/>

<mw type="pageNum">247</mw>

<lb/>sionaries or teachers, whether here or at <placeName>Sierra-

<lb/>Leone</placeName>, had need to guard against assimilating too

<lb/>much in habit or sentiment with other <rs type="properAdjective">European</rs>

<lb/>residents, …

Notice that included among those things that follow the soft

hyphen is a text node (

247

) which is not part of

the split word

Missionaries

.

(Note also that the

hyphen glyph in

Sierra-Leone

looks exactly the

same in the source as the hyphen glyph in

Mis-sionaries

, but the encoding asserts it is a

hard hyphen even though it occurs at end-of-line. This is

because other occurrences of

Sierra-Leone

have a

hyphen, even when it is in the middle of a typographic line. The

hard hyphen is probably best encoded with a HYPHEN character

(U+2010), but is typically recorded with a HYPHEN-MINUS

character (U+002D).)

But sadly, it is not only the obvious and predictable (XML

comments, XML processing instructions, line breaks, column

breaks, and page breaks with their apparatuses) that may come

between a soft hyphen and the final portion of a word. The most

common culprits here are annotations and figures, but

handwritten additions (either authorial or by a later hand)

could also occur.

In the following example an entire tipped-in

plate sits between the soft hyphen and the final portion of the

word.

<p>

<label>I.</label> God spoke of Be-he-moth. What ani­

<pb n="facing 48"/>

<pb n="facing 49"/>

<figure>

<figDesc>An engraving of a “behemoth” (resembles the

elephant) standing on a grassy bank drinking from a body

of water, vegitation in background</figDesc>

<ab type="caption">To face page 49.</ab>

</figure>

<pb n="49"/>

<milestone n="E5r" unit="sig"/>

<mw rend="align(outside)" type="pageNum">49</mw>

<lb/>mal is that?

Sibling of Overlap

In all of the examples so far, the initial and final

portions of the word divided by a soft hyphen are at least at

the same hierarchical level of encoding. That is (in XPath

terms) from the text node that contains the soft hyphen, the

final portion of the word is on the

following-sibling:: axis, even if it is not the

first text node, or even the first non-whitespace-only text

node, on that axis.

However, we are not always so lucky. Here is a modern

diplomatic transcription of a heading.

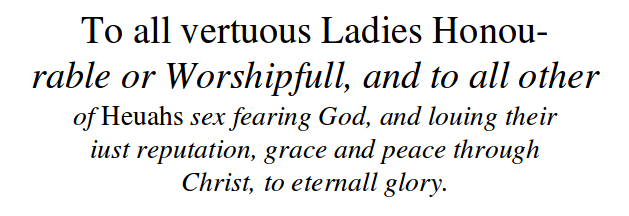

The word

Honourable

is half in roman (or

upright

) type and half in italics. To account for this font shift,

the encoding uses the TEI

<hi> element and the global

@rend

attribute to indicate that while the entire heading is (in general) in italics, the

first

typographic line is highlighted by being in roman typeface.

<head rend="slant(italic)"><hi rend="slant(upright)">To all vertuous Ladies Honou­</hi>

<lb/>rable or Worſhipfull, and to all other

<lb/>of <persName rend="slant(upright)">He<vuji>u</vuji>ahs</persName> ſex fearing God, and lo<vuji>u</vuji>ing their

<lb/><vuji>i</vuji>uſt reputation, grace and peace through

<lb/><persName>Chriſt</persName>, to eternall glory.

</head>

It would be reasonable to think this

phenomenon pernicious, not particularly important, and rare.

But a different manifestation of the same hierarchical problem

is anything but. When a book is damaged (e.g., by a coffee

spill, or torn or mouse-eaten edges of pages), it is common for

the damage to be on only one side of the page. Such damage will

cause a problem reading either the initial portion (if it is on

the right edge) or the final portion (if it is ontFIXME!! he left edge)

of a word split across a line break.

In the following example, the encoder

has indicated that she cannot read a few characters at the

beginning of each of four lines due to damage, but that either

from context alone or from looking at a different edition of the

same book she has been able to surmise what must have been

printed.

<lb/>not your own. It is a miſe­

<lb/><supplied reason="damaged">ra</supplied>ble thing for any Wo­

<lb/><supplied reason="damaged">ma</supplied>n, though never ſo great,

<lb/><supplied reason="damaged">not</supplied> to be able to teach her

<lb/><supplied reason="damaged">ſerv</supplied>ants; …

This is a particularly thorny case, because in order to

resolve the soft hyphen, software will have to recognize that

not only should the following

<supplied>

element be moved from the beginning of its line to the end of

the previous line (replacing the

­

character), but also the first token of the text node

immediately following the

<supplied> needs to

move with it.

Text that is not there

If the text that is damaged cannot be read at all, the TEI

Guidelines recommend using the

<gap> element. While the <gap>

element may have content, if it does that content does not

provide a transcription of the source text, but rather provides

a description of or information about what was not transcribed

from the source text; and more often than not

<gap> is empty. In the following example, the

encoder is asserting that she could not read a significant

portion of the last line.

<lb/>And alſo ge<vuji>u</vuji>eth them grace to <vuji>v</vuji>ſe in his

<lb/>glorye, po<vuji>u</vuji>ertie, ignomine, infamie, in­

<lb/>firmitie, with all ad<vuji>u</vuji>erſitie, and the pri­

<lb/><gap extent="over one third of the line" reason="flawed-reproduction"/>tes,

e<vuji>u</vuji>en to the death<unclear>,</unclear>

When a

<gap> occurs after a soft hyphen, but

before any non-ignorable content, we have a case for which it is

particularly difficult to resolve the soft hyphen; thankfully,

it is also a case for which it is particularly unimportant to do

so.

It is difficult to do so for two main reasons. First,

because (unlike most other empty elements we would encounter

after a soft hyphen: <cb>, <lb>,

<milestone>, and <pb>) the

<gap> represents content, it would have to be

moved as if it were the first token of content. Second, because

a <gap> may represent less than a single word, a

single word, or more than a single word, the software will need

to parse its attributes (and perhaps content) to determine

whether or not the first token of an immediately following text

node (that does not start with whitespace) needs to be moved

along with the <gap>.

It is unimportant because under no circumstances can the

soft hyphen resolution process meet the goal of reconstituting

the entire word. Whether for spell checking, for indexing for

search, or for generating an easy-to-read display, having

pri<gap extent="rest of word"/><lb/><gap

extent="roughly one third of the line minus roughly one half of

the first word"/>

is no better than what you had

to begin with.

Choosing the shy

The TEI uses a parallel elements

mechanism

for recording a variety of editorial interventions. Here I will

discuss the correction of apparent errors

(<choice>, <sic>, and

<corr>), but the same issues hold true for the

simple expansion of abbreviations (<choice>,

<abbr>, and <expan>), the

substitution of one bit of text for another

(<subst>, <del>, and

<add>), the regularization of archaic or

eccentric spelling or typography (<choice>,

<orig>, and <reg>), and the

simultaneous encoding of multiple variant witnesses

(<app>, <rdg>, and

<lem>).

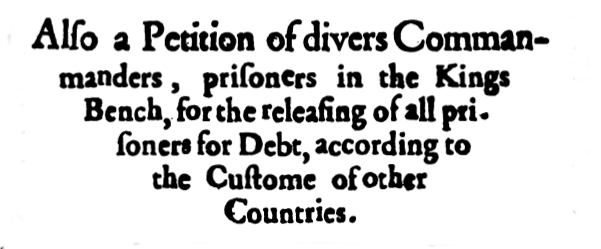

The following example demonstrates two

errors in one title, each of which is directly involved in the

use of soft hyphens. I will discuss the second error here, and

the first one in the next subsection.

If you look carefully at the end of the

3rd line, you will see that the soft

hyphen character is not a hyphen at all. In this reproduction

you may find it hard to figure out what it is, but in other

editions (I am told) it is more obvious that the character there

is a

period.

Presuming the encoding project would like to record both

the error as it appears in the source text and a modern

correction of it, there are two likely TEI encodings of this:

letter-level

and word-level

.

<lb/>Bench</placeName>, for the releaſing of all pri<choice><sic>.</sic><corr>­</corr></choice>

<lb/>ſoners for Debt, according to

The above letter-level encoding makes resolving the soft hyphen

potentially quite a bit more difficult. The difficulty lies in

the fact that if we were to apply the simple algorithm discussed

above — namely to replace the soft hyphen character with the

first token of the following line, we would suddenly be

asserting that the partial word

soners

was

somehow a correction of a period:

<lb/>Bench</placeName>, for the releaſing of all pri<choice><sic>.</sic><corr>ſoners</corr></choice>

<lb/>for Debt, according to

In many, if not the vast majority, of situations this would not

really be a problem. When performing soft hyphen resolution for

the purpose of generating word lists or indices, we generally do

not care about simultaneously handling both the source text and

the editorial correction. We usually just want the corrected

version, in which case the entire

<choice>

construct is itself resolved to the content of

<corr>. Whether this is done before or after

soft hyphen resolution, we end up with the desired words.

In rare cases we might be interested in the uncorrected

source text. In which case soft hyphen resolution software has

to be smart enough to perform the resolution on the text in

<sic> based on the content of

<corr>. In theory a project may want to perform

soft hyphen resolution in both the uncorrected source and the

editorially corrected text. I do not address this particular

situation here, as I have never even heard this idea

entertained.

Word-level correction is a bit easier for soft hyphen

resolution, as the simple algorithm yields a perfectly

acceptable result.

<lb/>Bench</placeName>, for the releaſing of all <choice>

<sic>pri.<lb/>ſoners</sic>

<corr>pri­<lb/>ſoners</corr>

</choice> for Debt, according to

However, it has a different drawback: with this system counting

lines on the page — a common and important task — is harder, in

that the counter has to know that the

choice/sic/lb

and the

choice/corr/lb together need to be counted

as only one line break.

Shy of the choice

Anything more than a cursory or rapid read of the first

two lines reveals an egregious error, probably by the

typesetter: the word commanders

is spelled

commanmanders

, as the medial letters

man

are not only in the initial portion of the

word, but are also repeated after the soft hyphen. Multiple

possible encodings jump to mind. The editor may consider the

man

at the end of the first line as the correct

one, and thus the man

at the beginning of the

second line as the one error; or vice-versa. And in each case

the encoder may use letter-level or word-level encoding.

<titlePart type="second">Alſo a Petition of divers Comman­

<lb/><choice><sic>man</sic><corr/></choice>ders, priſoners in the <placeName>Kings

<titlePart type="second">Alſo a Petition of divers <choice>

<sic>Comman­<lb/>manders</sic>

<corr>Comman­<lb/>ders</corr>

</choice>, priſoners in the <placeName>Kings

<titlePart type="second">Alſo a Petition of divers Com<choice><sic>man</sic><corr/></choice>­

<lb/>manders, priſoners in the <placeName>Kings

<titlePart type="second">Alſo a Petition of divers <choice>

<sic>Comman­<lb/>manders</sic>

<corr>Com­<lb/>manders</corr>

</choice>, priſoners in the <placeName>Kings

Furthermore, when using word-level encoding, project editorial

policy may allow elision of the soft hyphen and line break in

the corrected version:

<titlePart type="second">Alſo a Petition of divers <choice>

<sic>Comman­<lb/>manders</sic>

<corr>Commanders</corr>

</choice>, priſoners in the <placeName>Kings