Background

Symbolic representation

Most of us probably at some point in our school days encountered the idea of the Cartesian plane with an x-axis running horizontally and a y-axis running vertically, and the concept of plotting x,y pairs as points on the plane, and the idea that you could represent a set of points as a set of dots on a piece of paper or a line, and the further idea that there is an equation over x and y that describes that line, and the idea that if it’s a more complicated equation what it describes may not be a line but a curve of some kind.[1] And then the further idea that if you have an independent variable that you can plot as x and a dependent variable you can plot as y, you can plot a curve that you derive empirically and create an equation to describe that curve. And if you consider, not just the case of an independent variable x and a dependent variable y, but two independent variables x and y and a third dependent variable, then you get a plane or surface of some kind. And those too can be described by equations. Now, having equations, to describe curves or surfaces and so on, is nifty because we can look at them, we can see them, and we can change them.

We can perform transformations on them — simple or more complicated transformations — that have sometimes predictable — sometimes unpredictable — and interesting effects, just like the transformations that we can perform on images. Actually, at some deep level, they are quite often exactly the same transformations that we perform on images on our machines today or like the transformations that we can perform on music, the kind that Evan Lenz demonstrated in the first dress rehearsal talk for Balisage on Saturday [Lenz].

Now, in music, those kinds of transformations are not particularly new. Transpositions, inversions, retrogrades, rotation, augmentation, diminution, and so on have been known for a long time — some perhaps longer than the standard transformations that we perform on equations. One very important transformation for equations, for example, is taking its derivative. But the idea of taking a derivative could not well be defined until after the introduction of the Cartesian plane and the calculus. Musical transformations were known well before that time.

Now, when trying to describe an empirically given curve and in particular when trying to calculate the derivative of a curve, a discontinuity in the curve is a matter of some concern because at a point of discontinuity, you can’t take the derivative. There is no definable slope to the line. And some of us remember with varying degrees of uneasiness the long discussions of Taylor expansions and the huge lengths — inordinate lengths I remember thinking as an undergraduate — that mathematicians were willing to go to in order to avoid having an equation for a curve for which they couldn’t calculate a derivative.

I’m not going to attempt a correct technical description of continuity, but the basic idea is simple enough: a little bit of a change in one dimension leads to a little bit of a change in the other direction. And a discontinuity is a case where a little bit of a change in one variable may lead to a very big change in another level. For example, if I take a book [shows a book resting on his left palm, supported by his right index finger], and I move my right hand a little bit [pushes the book towards the vertical], the book moves a little bit until there comes a point when I move my hand a little bit, but the book moves a lot [pushes book past the vertical and it falls over]; that is a discontinuity. A tipping point of any kind is a discontinuity. An avalanche is a discontinuity.

Catastrophe theory

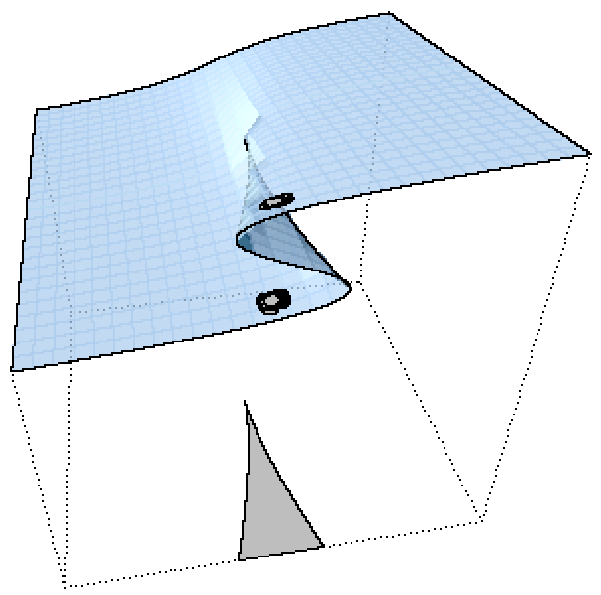

Now, for things that are quantifiable, there are mathematical descriptions of how discontinuities can be described. And, in some cases, you can get discontinuous behavior — even though the surface being described is completely continous and smooth. Some of you will have read, years ago, Popular Science articles on catastrophe theory with diagrams like the one that I am sharing with you now.[2]

You notice that in most parts of this surface, if you move a little bit in a horizontal direction, you’ll move a little bit perhaps in the vertical direction. But if position yourself on the near right corner, as we look at it, of the surface and proceed left, you will move a little bit in the vertical direction for every little bit that you move in the horizontal direction until you reach a point where for that particular pair of x,y values, there is more than one value in the vertical dimension. And if we assume that there is a certain amount of inertia in the system, you’ll probably stay on the upper surface. But if you continue left, ultimately you can’t stay on the upper surface because if we assume there is something like gravity in the virtual world inhabited by this system, you’re going to fall off the edge.

This is the simplest of what I have read are seven topologically distinct forms of surface that can lead to the kind of catastrophic change that I was just talking about or, in fact, describing. I’m not going to go into the details of those other seven shapes because they really work only for things that can be quantified reliably, and many of the things that we’re interested in here today — markup quality, technical complexity, elegance, correctness — are tricky to quantify, which is a polite way of saying I don’t think any of us has the foggiest idea how to quantify them usefully.

Catastrophic complexity in markup theory

But metaphorically the surface that you’re looking at seems to me to be relevant because it describes an important feature of the systems we build. Often, if we need a little bit more functionality, we can get it by adding a little bit more markup; and by adding a little bit more markup, we can get a little bit more functionality and so forth, and that is part of the way many of our systems grow. But sometimes adding just a little bit more markup or changing what looks at least from some points of view like a relatively small thing about the system will lead to a catastrophic failure of the markup system. People will vote with their feet, and they won’t use the system after it passes some threshold value of complexity.

Now, when I speak about catastrophic failure, I should point out I’m trying to use those terms in what you might call a technical sense. My father was a researcher working in the tire and rubber industry, and he spent years studying tire failure. And when we say that a tire fails, we are not engaging in a moral judgement of the tire. We’re simply observing that the tire has ceased to function as designed, possibly because it blew out, possibly because it fell apart. There are many, many different forms of tire failure, and one of the jobs of a researcher in that industry is to investigate them and see if different manufacturing techniques can minimize the likelihood of various kinds of failure. Some failures are gradual, and some are quite sudden. The sudden ones are referred to as catastrophic. So, when I say something is catastrophic, I mean that it happens very quickly and not necessarily that it makes us unhappy, although if we want people to use a markup system, and the system that we build suffers catastrophic failure, we may experience it emotionally as a catastrophe. And that is one reason why we may be interested in finding ways to avoid catastrophic failures.

Now, I’ve been thinking about this largely because of several papers in this year’s conference, especially the report by Tracey El Hajj and Janelle Jenstad of their work on the conversion and remediation of the editions from the Internet Shakespeare Editions project [Jenstad and El Hajj] and also Jonathan Robie’s account of markup usage in the Bible translation community [Robie]. Now, in both of those cases, the central focus of the paper is somewhat broader and somewhat less gloomy, but I was struck by parallels in the two accounts. There are people doing intensive work with documents, and they work very happily with embedded markup. But they find full XML markup just too complex to use, and they don’t use it. In the case of the Internet Shakespeare Editions, I found it very poignant that when the Internet Shakespeare Editions project tried to take better control over its metadata and moved all of the metadata out of the in-document header into a separate system where they could have stricter and more explicit markup and better controls over the markup, basically it meant that none of the editors interacted with the metadata for their editions anymore, which, of course, had the unfortunate effect of diminishing the accuracy of the metadata and over time making it worse rather than better [Jenstad and El Hajj].

Michael Gryk’s discussion of the Star File Format for chemistry [Gryk] illustrates a similar point and provides a useful parallel to Jonathan Robie’s description of USFM (Universal Standard Format Markers) [Robie]. We have in both cases a domain-specific data format that meets the felt needs of the community and for which the relevant community feels a certain ownership. In all three cases, if I understand correctly, those who do not want to work with XML object that the XML is not human-readable and also — this may or may not be exactly the same thing — that the XML representation is too complex.

Now, complexity is sometimes in the eye of the beholder. Liam Quin observed the other

day that being a programmer, he was lazy, and being lazy, he wanted to avoid as much

work as possible, and therefore whenever he declares a variable in XSLT or declares

a named template that has a type other than item*, he uses the @as attribute of XSLT 2.0 and 3.0 to declare the type [Quin]. Why? Because then the XSLT engine provides better feedback and calls Liam’s attention

to errors that he has made in the code so that it’s easier to find the errors and

he doesn’t have to go looking for them quite so hard.

Of course, we have probably all encountered programmers who tell us that because they’re

programmers they are lazy, and because they are lazy, they are never going to use

the @as attribute because it’s more to type, it’s more verbose, and it’s more work for them.

They prefer one kind of simplicity; Liam prefers another kind of simplicity. And

we don’t have to take sides in this in order to observe that there is more than one

subjective notion of simplicity and more than one subjective notion of complexity.

But however we perceive it and however the users of the systems we build perceive

it, we need to deal with complexity. We need to control it. We need to find ways

to minimize it.

I can think of several ways we try to control complexity. Some of them are clearly distinct from each other, and others sort of bleed into each other and there may be multiple sliding scales for classifying these methods, so don’t take the categorization that follows too seriously.

Just say no: Bounding complexity by reducing functionality or generality

One of the simplest ways to control complexity is what might be thought of, at least

by Americans of a certain age, as the Just Say ‘No’

approach. We reduce complexity by reducing functionality or reducing generality.

Jonathan Robie’s account of those engaged in Bible translation illustrates that.

So do Evan Lenz’ stylesheets for performing musical transformations. The USFM markup

developed by the Bible translation community achieves simplicity of a kind by focusing

on one kind of document or, more specifically, on one specific collection of documents

[Robie]. The Bible, as we know it in various Christian traditions and the Jewish tradition,

shares some properties with other scriptural texts, but not all. So I would be a

little surprised if USFM worked without any change or extension even for other scriptural

texts like the Koran or the Buddhist canon, let alone something like the U.S. Code

of statutory law.

In Evan Lenz’ proof of concept, he wrote transformations which would work successfully on the Bach Prelude he was working on [Lenz], but I don’t really think there is any chance — and Evan certainly didn’t say there would be any chance — that they would work on a piece by John Gage or even on a Bach Toccata and Fugue or a different Bach Prelude. By focusing on the document he was specifically worried about, he was able to simplify the task well enough to make the formulation of the transformations a tractable task.

That is perhaps one reason that at the beginning of the use of XML, it was really not uncommon for people to write a new stylesheet for each document. The XML spec was written, we wrote a DTD for that spec, we wrote stylesheets for that spec, and later we tried to generalize the DTD and those stylesheets. But the initial task was: it has to work for this document. And that simplified the task significantly.

The sketch for a query language that I presented the other day similarly attempts

to keep things simple by providing very limited functionality, even less than XPath

1.0 [Sperberg-McQueen]. In some cases, this technique of just saying ‘no’

resembles that of Procrustes, as described by Wendell Piez: If you can fit in our

beds, you can sleep in our hotel [Piez].

Bounding complexity by drawing boundaries, keeping local

The second method of keeping complexity within bounds is to draw boundaries. Drawn

boundaries restrict your attention to the area within a particular set of boundaries

and keep things local. Distributed systems get great mileage from division of labor

of this kind. Clear boundaries work; as Robert Frost said, Good fences make good neighbors [Frost 1914].

Of course, this approach also has the property that one way for me to make my part of the task simpler is by taking a firm hold on all the complexities I can find within it and heaving them over the wall into the next plot to make them somebody else’s problems. Fred Brooks describes numerous cases of this happening in various ways during the development of System 360, with people arguing over whether a particular hump in the carpet belongs over there or over there [Brooks 1975].

Wendell Piez illustrated both of these techniques in his demonstrations of tools for processing local files in the browser [Piez]; by doing the processing on the user’s machine and not on the central server, he gives himself a sort of a protected space where work can be done in blissful isolation or at least relatively contented isolation, even though distributed systems overall will tend to be more complex than equivalent monolithic systems, by most measures. And second, Wendell describes systems that attempt to reduce complexity for users by meeting them where they are. If the user has XML, the system will process and validate the XML. If the user has JSON, the system will process and validate the JSON. That does simplify things for the user, although it hands Wendell and his collaborators a more complicated task to solve, so we have to give Wendell credit: He picked up the problems and heaved them over the boundary into a plot for which he is also responsible, so he didn’t actually just palm the complexities off on somebody else.

The power of drawing boundaries — the power of modularization — is also illustrated vividly by the paper by Pietro Liuzzo on serving image data and providing distributed text services by implementing implementations as specified in IIIF and distributed text services [Liuzzo].

Liam Quin’s talk this morning seemed to me to illustrate a slightly different form of boundary drawing [Quin]. He adapts the principle of locality of reference to subjectively very similar principle of locality of focus by adopting useful conventions that allow the relevant CSS to be specified inside the XSLT template that produces the output elements that the CSS should describe rather than outside that template, even right next to it or in a different template. He makes the boundaries of syntax and the boundaries of the region of the stylesheet to which the maintenance programmer is required to pay attention coincide, and that is enormously helpful.

Hiding complexity (behind abstractions)

It’s also an example of encapsulation, which is one of the basic, essential techniques for making complex programming possible in the first place. Named functions are a way of encapsulating bits of functionality and allowing the caller of the function to ignore as far as possible the details of the implementation. And when we group named functions together, we get libraries. And I think everyone interested in working with descriptive markup has to be grateful to the people who described at this conference the libraries that they are building, either for use in XSLT and XQuery, or for use inside an XSLT and XQuery engine: Mary Holstege’s paper on her implementation of the Aho-Corasick algorithm [Holstege], Joel Kalvesmaki’s description of his string comparison functions in the Text Alignment Network [Kalvesmaki], and Michael Kay’s discussion of ZenoStrings this morning [Kay]. Mary Holstege described the creation of a library as choosing to light the candle rather than to curse the darkness, and I think I can speak for many of the auditors when I say I felt personally a little blinded by the sheer candlepower of those talks. We owe those people an enormous debt.

And that leads me to the question: Is there an XSLT/XQuery — XML in general — equivalent

to the Comprehensive Perl Archive Network (CPAN), or the Comprehensive TeX Archive

Network (CTAN), or any of the comprehensive blank

archive networks that have been set up for other programming languages and environments?

If so, let’s make sure that we get those libraries into it. And if not, shouldn’t

we ask ourselves, Isn’t it time to put one together?

As Mary [Holstege] said, there are not as many of us as there are Perl hackers in

the world, so we need to make it as easy as possible to reuse and build on each other’s

work.

Reducing complexity by delegation

Another way to reduce complexity is by delegation, by setting up a better process — a better workflow — that works reliably, so that you can just turn the crank on the workflow and have things work, and have it not require your full attention. One of the time-honored ways of learning better ways to make things work is by looking over the shoulders of other people and learning from them. So we can often improve our skills by listening attentively to case studies.

Martin Latterner and his co-authors illustrated that point beautifully with their

paper on the re-engineering of the XML conversion and normalization workflow at PubMed

Central® [Latterner et al.]. Alan Bickel, Elisa Beshero-Bondar, and Tim Larson similarly showed how a lot of

hard work and libraries can help us grapple with very difficult, complicated problems

[Bickel, Beshero-Bondar, and Larson]. Fotini Koidaki and Katerina Tiktopoulou showed how much can be done by applying

tools that already exist and using markup that is already specified and combining

them into an intelligent workflow [Koidaki and Tiktopoulou]. I note in passing that until the TEI provided a container labeled stand-off,

, as far as I know very few people ever actually used the TEI’s facilities for stand-off

markup and stand-off links; I’ve heard a number of talks that claimed they didn’t

exist. It is amazing how much mileage we can get just by providing a named location

and saying, You can put that stuff here.

Now, when the tools that we’re using to build the workflow are on the new side, the experience of using them to build a workflow can be challenging, as described by Geert Bormans the other day in an inspiring talk [Bormans]. His choice of doing his most complex cases first is remarkably brave; when it works, I have seen that approach be remarkably effective, but it sure takes a lot of nerve. My hat is off to Geert.

Sometimes it’s just one particular step in the workflow that requires special attention. Sometimes we can provide library functions for it that hide all of the details, but sometimes a lot of the messy details must remain visible due to the nature of the problem. The techniques described by David Birnbaum and Charlie Taylor this afternoon for calculating the length of text in SVG [Birnbaum and Taylor] — this is certainly a problem I have faced and one for which I never found a solution half as good as theirs — those techniques can hardly hide all the messiness of the problem from the user, but they can at least help us understand where and how the messiness arises, and that in turn can help prepare us to deal with it effectively and as calmly as our circumstances allow.

One of the differences between reliable, mature tools and unreliable, immature tools is that the reliable tools have been exposed to more edge cases and more complex situations, and more of their bugs and imperfections have been removed. To get our code to that point, it’s helpful to stress test our tools, and one way to do that is illustrated by Joel Kalvesmaki’s 1% to 100% test cases [Kalvesmaki].

Another way is illustrated by Tony Graham’s take-no-prisoners approach to replicating

the typography of the first edition of Moby Dick [Graham], in which he effectively says to his formatting engine: I do not wish to discuss with you whether you like this typography or not; I wish

you, my tool, to lay it out that way.

And whenever we work with tools, it is useful to know when there are alternatives

and what alternatives there are; one of the things I like best about the work Norm

Walsh and I reported on in the second dress rehearsal of Balisage on Sunday is that there are viable alternatives to doing things in Javascript [Walsh and Sperberg-McQueen]. (Very helpful for those of us who prefer not to have to wash our hands every time

we code.)

Better markup as a tool for dealing with complexity

One technique for reducing complexity that we shouldn’t lose sight of is descriptive markup. By making the digital representation of our information match more closely our understanding of its nature, it reduces the likelihood of an impedance mismatch between our goals and our tools. It provides an opportunity for separation of concerns. Identifying the boundaries of elements, identifying the types of elements, and identifying what we want to do with those elements when we process the document are all separate concerns.

It’s also an opportunity for delegation. We avoid the task, when writing the document, of deciding when a particular word needs to be italic and when it doesn’t, by dividing the tasks, delegating to the author the task of saying what the word is, delegating to the designer the task of deciding how it should be styled, and delegating to the formatting engine, the task of actually styling it when the document is laid out.

Peter Flynn gave us a master class in the analysis of requirements, in devising markup to satisfy them, and in ways to provide the required functionality. It impressed me that he used such extremely minimal tools, up to and including the task of providing kinds of re-sequencing of information that I would, before yesterday, have said were beyond any normal capacity of CSS [Flynn].

Patrick Durusau’s proposal for change markup takes a radical approach to a vexing problem that faces many of us [Durusau]; now, since I worked for years with change markup that is inline, I will need to think for a long time to decide whether his form of stand-off change markup is provocative (which is what I expect from Patrick) or merely provoking or brilliant. Certainly, it is worth careful thought, and I plan to give it careful thought.

Hugh Cayless, Thibault Clérice, and Jonathan Robie also described a proposal for improved markup, to provide improved functionality [Cayless, Clérice, and Robie], and they provide an exception to the general rule I suggested earlier. To get a little bit more functionality, they provide less markup — more compact markup. It makes you think, doesn’t it?

Sometimes before you can build a tool or make a good design decision, you feel the need for more information — for more data. Gerrit Imsieke and Nina Linn Reinhardt presented a tour de force of sheer doggedness [Imsieke and Reinhardt]. When you’re planning for a subset exercise by examining documents in numbers that require six digits or more to write down, my hat is off to you. I wish I thought I were ever as thorough as that.

Now, working towards semantic markup is not always simple or straight-forward, and it doesn’t always work out the way we might have hoped. Especially its reception by other people may not always be what we had hoped. Simon St.Laurent showed us what a long and twisting road we have traveled in that regard over the last twenty-five years [St.Laurent]. And as he said, it is a bittersweet story.

When complexity comes out of left field

It’s also bittersweet sometimes — sometimes it’s just infuriating — when we get blind-sided by things that should be simple in theory but aren’t simple in practice. Because we need to remember hiding complexity only helps if what we’re hiding is the complexity of a solution to a problem. It doesn’t help if what we’re hiding is the existence of the problem.

Tommie Usdin started the conference with a firm reminder that using descriptive markup is not, in the current state of the universe, an invest-and-forget kind of operation [Usdin]. Software-independent descriptive markup eliminates some kinds of data obsolescence, but it does not in itself guarantee data longevity. To achieve data longevity, active work is needed.

Ari Nordström illustrated this point vividly with his account of work on using topic-based techniques with SGML-encoded data [Nordström]. The encoding designed years ago turns out not to be a clear, crystalline representation of the absolute information content, but also to contain a generous admixture of assumptions about the surrounding technologies and the way they will work or should work. To take the simple example of image embedding, there was a time when lots of people would feel it more natural to have declaration before use, so the idea of declaring lists of entity declarations, before using them in the document by inserting entity references, would feel utterly natural. Since the popularity of the World Wide Web, of course, most of us are more used now to being able to refer to any URI without any prior declaration. And those will represent two different styles of markup and require two different styles of processing. We can still read the old data; it’s just that current tools aren’t set up to deal with data encoded that way because nobody does it that way any more.

Similarly, some people want declarations before use because it makes it at least theoretically

possible that a system can read the declarations and say, You know, I’m not successful in retrieving these entities; I’m going to fail now and

not burn a lot of CPU time performing work that cannot be completed.

Early failure is very important in some contexts. But again, some people want it;

it is now perhaps more common for people to say, Well, I’d really rather that the system try to muddle through, because frankly I may

stop paging through the document before I reach that figure anyway.

So, in that case it really doesn’t matter whether you retrieve the image entity

or not.

Now, some of the problems identified by Tommie Usdin and Ari Nordström are not peculiar to descriptive markup. Digital resources of all kinds require much more active interventions by libraries and archives than paper materials normally do. Some of the best preserved collections of older books in Europe are in out-of-the-way libraries that lacked funding, where the stacks were unheated and sometimes the windows didn’t even have glass; as long as the windows were far enough away that the snow could not blow in and fall on the books, the books were just fine in the cold. Actually, it turns out that is one of the best ways to store books for longevity. It’s also good if they’re not disturbed for years on end because nobody actually goes to this library because it’s a small, out-of-the-way library.

Reducing complexity by better abstractions

If something turns out to be hard in practice although in theory it should be easy,

then really what those of us interested in theory and practice ought to do is rethink

our theory. That is hard, but it’s an important task. Sometimes, our best efforts

to be guided by our understanding of the problem can misfire. Josh Lubell gave us

a concrete example in his talk about how the model made him do it

[Lubell01]; the implication Josh discussed illustrates an important point about markup and

about IT design in general, and also, it seems to me, about ontology though you will

have to ask Allen Renear about that.

There is often more than one way to organize the information in a system and very often, more than one way to identify the entities that need to be represented by objects or things or XML elements in the system. Case in point: Josh created a tool for tailoring security baselines, which had no single — no unitary — representation of a baseline anywhere in its XML representations. Now, that design did not lead to any logical contradiction; the only symptom of something amiss was that some operations that the user needed to perform were more awkward than Josh would have liked.

Robin La Fontaine helped expand our minds in a similar way by his discourse on the fact that fixing a sequence for elements can be viewed as a signal that the sequence of elements carries no information and does not matter [La Fontaine]. And conversely, that leaving the sequence free is a signal that the order can carry information and therefore does matter. And he went further; he tied the issue of significant or insignificant variation in sequence to the concrete application of document comparison, and in so doing, he made me aware of aspects of the issue and complexities in the issue that otherwise had never occurred to me. I’m grateful to him for that.

Steven Pemberton showed us that if you think hard enough about it, you will find that what look at first glance looked like quite different problems — constraints on atomic values and constraints on document structure — can in fact be reduced to a common foundation [Pemberton], which gives hope to those who appreciate the declarative power of XForms and hope that it can be given even more declarative power.

One of the reasons that philosophers sometimes have the reputation of being gadflies is their propensity for trying to rethink things that the rest of us do not regard as being in particular need of rethinking. This effort is particularly fraught when it’s not clear that the understanding that satisfies the rest of us was in fact the result of much serious thinking in the first place. Is it possible to rethink something that hadn’t been thought out properly first?

Allen Renear and Bonnie Mak have posed a difficult question by asking what is going on in presentational markup [Renear and Mak]. One of the challenges of the question, it seems to me, is perhaps that it’s not entirely clear what counts as a solution, so that in order to answer the question, you both have to answer the question and you have to invent the rules of a game which will make your answer count as a satisfactory answer to the question.

I mean no disrespect to Allen and Bonnie, or to any of the other authors who presented their very good work here, when I say that the rethinking that I think may have the greatest implications for document representation and document processing is the rethinking described by Elli Bleeker, Ronald Haentjens Dekker, and Bram Buitendijk in their paper about thinking about text in trees and graphs [Bleeker, Haentjens Dekker, and Buitendijk]. One of the most important lessons I think we can learn from their work on Text-as-graph, TagML, and Alexandria is that as Elli Bleeker said, we should not take the electronic representation of our documents for granted. We need to bear in mind that they invariably involve choices which could be made in other ways and that choices that seem perfectly reasonable at the time can, as Josh Lubell’s paper illustrated, make it harder to do some things with our data and thus impose an unexpected tax on those who need to do those things. That is potentially a problem in any application domain, but it is perhaps particularly intolerable in scholarly research.

So we have a sort of contradiction or complementarity: one way to reduce complexity in our lives is to make certain things routine — make them automatic so they require no thought from us; we can do them mindlessly. But another way to reduce complexity, equally essential, is to remain mindful of our choices and to remain open to learning from experience and making other choices.

Thank you all for making this a Balisage with such excellent lessons about how to achieve both routine reliability and mindful contemplation of the information we work with.

References

[Bickel, Beshero-Bondar, and Larson] Bickel, Alan Edward, Elisa E. Beshero-Bondar and Tim Larson. A Linked-Data Method to Organize an XML Database for Mathematics Education.

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.Beshero-Bondar01.

[Birnbaum and Taylor] Birnbaum, David J., and Charlie Taylor. How Long is My SVG

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.Birnbaum01.

<text> Element?

[Bleeker, Haentjens Dekker, and Buitendijk] Bleeker, Elli, Ronald Haentjens Dekker and Bram Buitendijk. Hyper, Multi, or Single? Thinking about Text in Graphs and Trees.

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.Bleeker01.

[Bormans] Bormans, Geert. The Adventures of an Early Adopter.

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.Bormans01.

[Brooks 1975] Brooks, Frederick P., Jr. The Mythical Man-month: Essays on Software Engineering. Reading, MA: Addison-Wesley, 1975. Reprt. 1982 (corrections), 1995 (anniversary edition).

[Cayless, Clérice, and Robie] Cayless, Hugh, Thibault Clérice and Jonathan Robie. Introducing Citation Structures.

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.Cayless01.

[Durusau] Durusau, Patrick. Deferred Well-Formedness and Validity: Change.log, Collaboration, Immutability, XML,

UUIDs.

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.Durusau01.

[Flynn] Flynn, Peter. Printing Recipes: Continuing Adventures in XML and CSS for Recipe Data.

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.Flynn01.

[Frost 1914] Frost, Robert. Mending Wall

. In North of Boston. London: David Nutt; New York: Holt, 1914. Available at The Poetry Foundation, https://www.poetryfoundation.org/poems/44266/mending-wall.

[Graham] Graham, Tony. Call Me Pastichemael: Recreating the Moby-Dick First Edition.

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.Graham01.

[Gryk] Gryk, Michael R. Deconstructing the STAR File Format.

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.Gryk01.

[Holstege] Holstege, Mary. Fast Bulk String Matching.

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.Holstege01.

[Imsieke and Reinhardt] Imsieke, Gerrit, and Nina Linn Reinhardt. JATS Blue Lite: The Quest for a Compact Consensus Customization.

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.Imsieke01.

[Jenstad and El Hajj] Jenstad, Janelle, and Tracey El Hajj. Converting an SGML/XML Hybrid to TEI-XML: The Case of the Internet Shakespeare Editions.

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.Jenstad01.

[Kalvesmaki] Kalvesmaki, Joel. String Comparison in XSLT with

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.Kalvesmaki01.

tan:diff().

[Kay] Kay, Michael. ZenoString: A Data Structure for Processing XML Strings.

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.Kay01.

[Kira et al. 2019] Kira, Ibrahim,

et al. Cumulative Stressors and Traumas and Suicide: A Non-Linear Cusp Dynamic Systems Model.

Psychology, vol. 10(15) (2019). doi:https://doi.org/10.4236/psych.2019.1015128.

[Koidaki and Tiktopoulou] Koidaki, Fotini, and Katerina Tiktopoulou. Encoding Semantic Relationships in Literary Texts: A Methodological Proposal for Linking

Networked Entities into Semantic Relations.

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.Koidaki01.

[La Fontaine] La Fontaine, Robin. Element Order Is Always Important in XML, Except When It Isn’t.

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.LaFontaine01.

[Latterner et al.] Latterner, Martin, Dax Bamberger, Kelly Peters and Jeffrey D. Beck. Attempts to Modernize XML Conversion at PubMed Central.

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.Latterner01.

[Lenz] Lenz, Evan. Visualizing Musical Transformations.

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.Lenz01.

[Liuzzo] Liuzzo, Pietro Maria. Serving IIIF and DTS APIs Specifications from TEI Data via XQuery with Support from

a SPARQL Endpoint.

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.Liuzzo01.

[Lubell01] Lubell, Joshua. The Model Made Me Do It! A Cautionary Tale from a Security Control Baseline Tool Developer.

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.Lubell01.

[Nordström] Nordström, Ari. Topic-based SGML? Really?

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.Nordstrom01.

[Pemberton] Pemberton, Steven. Structural Invariants in XForms.

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.Pemberton01.

[Piez] Piez, Wendell. Client-side XSLT, Validation and Data Security.

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.Piez01.

[Quin] Quin, Liam. CSS Within: An Application of the Principle of Locality of Reference to CSS and XSLT.

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.Quin01.

[Renear and Mak] Renear, Allen H., and Bonnie Mak. Presentational Markup: What’s Going On?

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.Renear01.

[Robie] Robie, Jonathan. Scriptural Markup in the Bible Translation Community.

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.Robie01.

[Sperberg-McQueen] Sperberg-McQueen, C. M. Ariadne’s Thread: A Design for a User-facing Query Language for Texts and Documents.

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.Sperberg-McQueen01.

[St.Laurent] St.Laurent, Simon. Semantics and the Web: An Awkward History.

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.StLaurent01.

[Usdin] Usdin, B. Tommie. The (unspoken) XML Gotcha.

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.Usdin01.

[Walsh and Sperberg-McQueen] Walsh, Norman, and C. M. Sperberg-McQueen. Interactivity Three Ways.

Presented at Balisage: The Markup Conference 2021, Washington, DC, August 2 - 6,

2021. In Proceedings of Balisage: The Markup Conference 2021. Balisage Series on Markup Technologies, vol. 26 (2021). doi:https://doi.org/10.4242/BalisageVol26.Walsh01.

[1] The author is grateful to Tonya Gaylord for undertaking the task of transcribing these remarks. They have been copy edited to make them a little easier to read but otherwise are as presented at the closing of the conference.

[2] The image is from Kira et al. 2019 and is used under the Creative Commons Attribution 4.0 International license.