Introduction

Today, literary criticism frequently focuses on the quantitative aspect of modern literary studies, talking about the primacy of big-data over the qualitative and interpretive goals of humanities research. However, computer-aided text analysis methods have shown that the line between the quantitative and the qualitative is often blurred, because determining even the simplest quantitative feature requires interpretative skills and deep content knowledge. Likewise, modeling semantic relationships in literary texts is a quite challenging endeavour because it requires not only technical expertise but also in-depth knowledge of the subject. Semantic relationships in literary texts often do not have neither clear nor standard linguistic structure and usually they are highly implicit and they overlap each other. As a result, it is difficult not only to design patterns of semantic relations and define their components, but also to identify them in the literary text and encode them without any loss of information.

This paper examines issues related to modeling and encoding semantic relationships through the case of ECARLE[1] project annotation campaign aimed to generate a dataset in order to train a classifier for Relation Extraction from literary texts. The technique that has been developed within the framework of the ECARLE project was specifically designed to extract cultural knowledge from 19th century Greek polytonic texts related to ancient, medieval and modern Greek Literature. The overall project objective was to develop an integrated Software as a Service (SaaS) which will enable users to effortlessly digitize Greek polytonic humanities texts and enrich their content with metadata about the logical structure of the documents, the publishing details, as well as the included named entities and semantic relations. In simplified terms, users will be able to upload scanned images of printed sources and receive for each of them an XML file on which the bibliographic description, the content structure as well as the named entities and their relations would have been labeled. All the information extracted and captured in XML files will be encoded according to the international markup standard of the Text Encoding Initiative (TEI) consortium. However, the user will be able to select between various export formats, since the extracted information would be exported to CSV, RDF, XLS, METS etc.

To this end the annotation campaign was specifically designed to create the human-labeled dataset on which the training and the evaluation of the Named Entity and Relation Extraction systems were subsequently based.[2] Since the main objective of the project is the digitization of Greek 19th century humanities documents as well as the discovery of cultural and literature related knowledge, below we will discuss the technique developed in order to achieve the most useful dataset in terms of semantic content and data capturing, as well as the various parameters that had to be considered and defined. In particular, we will discuss the research background, the construction of the corpus that served as the tank of textual data, some challenging issues related to modeling and encoding, the proposed methodology of techniques to combine, the generated dataset and the possible outcomes. The generated dataset as well as the materials for parsing and processing the encoded texts (such as the Python script and the documentation) are available on GitHub.[3]

Related research

There is a considerable amount of literature on multi-layered XML annotations and

so

far, during the 23 years of XML technology, several multi-hierarchical markup formalisms

have been reported as XML alternatives for handling overlapping cases (i.e. CONCUR,

LMNL, MECS). However, the OHCO XML model has prevailed over the multi-hierarchical

markup languages, and now the most popular alternative to the XML stanoff mechanism

seems to be graph-based formalisms usually based on the RDF data model (GODDAGs,

EARMARK, TEILIX etc). Even though RDF is probably the most convenient data model to

capture and integrate multiple levels of annotations for the same content, it’s

still a data interchange format in comparison to XML which can be used easier as a

document interchange format. This means that XML seems to be the most suitable format

for encoding the primary data in the humanities and, in particular in literary studies,

since in these fields encoding mostly concerns books or any other kind of textual

documents. Instead, RDF could represent the content of the standoff XML annotations

allowing flexible data modeling compositions. However, a quite interesting graph-based

annotation formalism was published in 2018 under the name Text-As-Graph Markup Language

(TAGML) focusing mainly, but not exclusively, on manuscript research. TAGML is built

upon the LMNL[4] markup model, uses the cyclic-graph theory and introduces the text as a

multi-layered, non linear construct

.[5] Even though TAGML opens up new perspectives for the representation of

textual data, it lacks editing and processing tools, while querying and interchanging

becomes even more difficult.[6]

In the Humanities and especially in literary studies the TEI tagset seems to be the

most preferred as well as the most suitable XML-based language for encoding, editing

and

processing textual documents. The TEI community keeps working on multi-layered annotations[7] and enrich <standoff> container, which, among others, may

include interpretative annotations, feature structures (ie. syntactic structure,

grammatical structure etc), group of links and lists of persons, locations, events

or

relations.

The LIFT project, published by Francesca Giovannetti in 2019, uses TEI tagset to

encode semantic relations in literary texts. The project does not make use of the

<standoff> container. Instead, it establishes links among

elements (persons, locations, events) by transcribing them into lists inside the

<teiHeader> section that is mainly intended to serve as the

resource registry. It should be noted though that the intention of the LIFT project

is

to demonstrate the flexibility that object oriented programming has in manipulating

and

parsing TEI/XML files, as it offers a Python 2.7 script that uses the lxml and RDFLib

libraries to convert TEI encoded relations into RDF triples. LIFT project also invites

users to choose between a set of ontologies that may meet their relation mapping needs

(AgRelOn, CIDOC, DCMI, OWL, PRO etc). However, none of the ontologies listed is designed

to represent the literary knowledge or the knowledge inherent in the humanities texts

which on the other hand is completely understandable, since it seems there is no model

available for mapping the types and the components of semantic relations occurring

in

humanities or literary texts. For example, the AgRelOn model, which defines private,

social or working relations among persons, is reported as incomplete and data pertinent,

while the well known CIDOC-CRM is focused on museums and other kinds of cultural

institutions and is highly generic and holistic. DCMI is a model for libraries designed

for bibliographic description, whereas PRO is a model written in OWL in order to

describe concepts involved in the publishing process. OWL, on the other hand, is a

family of languages which contains a variety of syntaxes (OWL abstract, XML, RDF)

and

specifications that can be used to describe taxonomies, relations and properties of

web

resources. A domain specific ontology is something that is still missing from literary

studies.

In much the same way, the ECARLE annotation campaign used the TEI tagset in order to extract relations from a corpus of polytonic Greek texts related to literary studies. The relationships were extracted in standoff annotations in the form of binary links, pointing labeled entities of different types (is. Person + Title). The linkages between entities were designed on the basis of the RE training needs and constraints, and since there is no domain specific ontology to model literary relations, not even a combination of the above mentioned ontologies could have described with the desired accuracy the extracted data. The annotated TEI documents were finally parsed with python 3.7 (BeautifulSoup, Pandas etc.) which allowed groupings of relationships that formed bigger networks based on annotated years, persons, titles and locations. The generated relations produced lists of named entities and IDs as well as XLS, CSV and RDF data representations of relations.

Corpus design

When designing our annotation campaign we had to consider the possible usage scenarios of the SaaS we were aiming at, as well as the features and constraints of the Relation Extraction training process. The idea behind our supervised machine learning (ML) strategy was to build a corpus of literary(-related) texts that would facilitate all of the ML tasks of our project. For example, the scanned pages of the corpus would serve as training and testing input, their human-corrected content would be used as the ground truth data for the Greek-polytonic OCR pipeline, while their annotated content would serve as the main training dataset, available also for the evaluation of the NER and RE systems.

The corpus building criteria established for this purpose were related to some of the key features of the corpus, namely i) the language, ii) the font-family, iii) the publication date, iv) the textual type (narrative, non-fictional, poetry, dictionary etc) and v) the structure. The first three criteria were defined in such a way as to facilitate the development of an OCR system capable of digitizing Greek polytonic texts printed during the 19th century. The other two criteria (the textual type and the document structure) were defined to ensure, as far as was possible, the adequacy of training data for both layout analysis and information extraction systems. In fact, we managed to retrain the grc-tesseract model and further improve it by deploying a text-line detection algorithm that enhanced the accuracy of the characters’ recognition. (Tzogka et. al 2021) However, enhancing layout recognition results required the textual structural features to be annotated not only on the TEI/XML files but also on the scanned image files, producing way enough training data for every structural feature we meant to be recognised. Therefore, the very type of the text was an important selection criterion because different text types can differ in their textual structure as in the named entities included. For example, factual text types, such as dictionaries or essays, and literary text types, such as poems and narrative, –not to mention the numerous sub-types of each type– may include, and often do, different quantities of named entities and relations and their content has completely different structures. Taking into account that in our case a considerable amount of annotated named entities and semantic relations was required, we decided to focus on prose texts and particularly on essays related to literary criticism, as well as on correspondences and memoirs that provided us with the desired structural diversity.

Issues

Human labeling is a time-consuming and complicated process, which includes many challenges that one comes to realize only through practising. Our annotation campaign was held by a research team of 5 pre-trained encoders, who were provided with extra training and project-specific guidelines as well as with encoded samples. The annotation campaign was launched with a pilot phase of labeling four small texts, during which each text was assigned to two encoders, while a super-encoder (a sixth researcher) was used to check the well-formedness, the TEI-conformance, as well as the discrepancies between the encoded versions. Based on the evaluation of the pilot, we enriched our guidelines and samples, and we built xml:id inventories (xlsx) that we were updating during labeling. Every printed document was assigned to two encoders of different roles, one of which undertook the structural encoding, and the other the entities’ and relations’ encoding. After both encoders had checked their annotated deliverable, a super encoder was used to verify the well-formedness and the TEI compliance. For the encoding process it was preferred an XML editor since the code editing experience allowed us accuracy on supervision of the markup and efficient error handling.Omitting some other crucial issues (i.e. related to human resource management), we will focus on the challenges of converting unstructured plain texts into a structured set of information that includes annotated data regarding the logical structure of the document, the cultural named entities and their semantic relations.

Since one of the main objectives of our project was to develop a service for the automated extraction of relationships of cultural content from Greek literary texts, we had to comply with the entities and relations constraints imposed by the ML model. In fact, the entities constraints were referred to (a) the number and the type of the participant entities, while the relation constraints were referred to (b) the distance between them. In particular, a relation was considered to be a valid training instance if it consisted of only two (2) entities, each of which should belong to a different type of entity, while both should have been detected within no more than three consecutive sentences. Taking into account these constraints we specified the types of the relations and their components that could be annotated and generate a sufficient training dataset. However, designing the annotation campaign we had to overcome difficulties related to entity ambiguities or overlapping relations.

Ambiguity Issue

When it comes to literary texts the entities of cultural meaning as well as their

relations may be highly unclear, or suggestive, or most of the time fictional. For

example, entity types like persons, titles of artworks or locations may appear in

various written forms, namely as nicknames, allegories and abbreviations.

Animal farm

, for instance, is a book title (Orwel, 1945), a

socio-political symbol, a song title (Kinks 1968), a tv-film title (1999) and a

reference to livestock, while 1984

is a numerical that at the same

time stands for a date and a book title. It is also quite common for the book titles

to coincide with character names (e.g. Anna Karenina) or they can be shortened to

the characters’ name (e.g. The tragedy of Hamlet, Prince of Denmark becomes

Hamlet), or even to have one-letter form.[8] So, to which category does the phrase The little prince

belong? It could be both a book title or a reference to the well known fictional

persona, or it could be neither of the two.

Our labeling strategy attempted to deal with ambiguity issues by producing more

detailed encodings of the ambiguous types of entities and using the ID mechanism to

record, track down and link entities. In the first place, therefore, we decided to

encode every occurrence of the selected entities as long as they were written as

named

entities and directly expressed. This means, in particular,

that we annotated abbreviated titles and names like N.Y., but ignored indirect and

implicit references such as the city that never sleeps

. Where needed,

we described with attributes the entities’ distinctive features that allowed

us to overcome the markup polysemy. For example, using the attribute-value mechanism

we distinguished between real and fictional persons. Finally we agreed to exclude

a

type of entity, namely historic events, since it was located in highly ambiguous

linguistic contexts, generalized phrases or words that may have no capital

characters and often consist just of common nouns. Thus, the category of historical

events has been omitted from the current annotation in order to reduce the noise in

the labeled data and benefit the training of the NER classifier.

Given the ambiguity constraints, we defined two thematic categories of relations about cultural heritage, namely (a) artworks and (b) state institutions. Moreover, each of these categories was divided into specific subcategories relative to the dating, the participants and the geography of the relation. The core components of the relations we defined are five different types of named entities, that is i) person, ii) organization, iii) location, iv) date and v) title.

Table I

Semantic Relations of Cultural Content

| Relation themes | Relation categories | Named entities |

|---|---|---|

| Artworks | Artwork creator | Person - Title |

| Artwork hero | Person - Title | |

| Artwork dating | Title - Date | |

| State institutions | Inst-org geography | Organization - Location |

| Inst-org worker | Organization - Person | |

| Inst-org dating | Organization - Date |

The elements belonging to the five entity types were assigned a single unique ID,

which, no matter the entity type, allowed us to generate inventories of titles,

persons, organizations, and even dates. The IDs were generated according to the

rules we had laid down in order to enable labelers to supervise the already

generated IDs, so as to prevent homonymy. In fact we came up with two complementary

ways that enabled us to avoid creating several IDs for the same named entity or vice

versa. More precisely, we have placed the letter t

at the beginning

of the title’s IDs and the letter y

at the beginning of the

year’s IDs. For instance, the y1984

ID represents the year

while the t1984

ID stands for the book title whether it is written as

Nineteen Eighty-Four or as 1984. Likewise, the IDs t1984

and

t1Q84

are referred to two different named-entities that

correspond to two different books. In this way, we have been able to produce more

indicative IDs and, therefore, more detailed annotations, which allowed us to

prevent the confusion as well as the misconnection between entities. Thus, two

apparently similar entities, whether or not are related, were now assigned with

distinctive IDs, which could be linked together. With this in mind, we created xls

catalogues where we logged every ID we produced, so that we could avoid assigning

more than one ID to the same entity or vice versa. In addition, we associated the

persons’ and the locations’ entities with their VIAF ID, where it was

available, in order to broaden the scope of use for our data, and open up

opportunities for interlinking with cross-lingual data.

Overlapping problem

Although designing an entity-relationship model is a challenging relational and

multifocal task, the major challenge of our project proved to be the annotation for

training data, for it had to meet certain conditions and, at the same time, it had

to produce accurate and flexible representations of our data without missing any

information. Taking into account the parameters and constraints posed by the RE

training technique that it was used, in order for a relation to be considered a

valid training sample, it had to consist of only two objects of different entity

types that must have been detected within no more than three consecutive sentences.

As a consequence the direct annotation of textual space

that contains

a relation by marking up its boundaries was not an option.

Therefore, in numerous cases, relations emerged entangled and highly complex. In

these cases, it was quite common for two or more relations to overlap each other or

to consist of more than two entities. Relations of more than two entities could of

course be divided into smaller pairs of entities that comply with the rule of two

components. This solution, however, can intensify the overlapping problem, because

the relations’ components would have been even more entangled to each other.

For instance, if we had a passage stating multiple author names, titles and dates,

we should split it by pairs of two, where we had to restate the relation for every

written version of a name. However those bipolar

fragments of texts

not only overlap each other, but they are more difficult to parse and group into

semantic relations. The types of overlapping relations can be presented in two

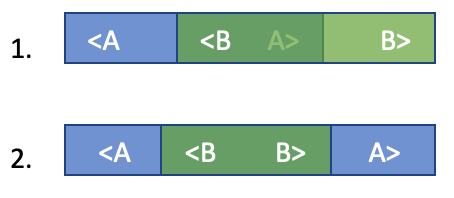

different schematic representations. As shown in the figure below the second

relation may either start inside the previous one and end after it (case 1), or it

may nest in it perfectly (case 2).

Figure 1: Kinds of overlapping relations

Although the second kind of the above relations may seem a well-formed XML structure, it is, as the first one, perceived as an overlapping case and both are equally problematic. In the first case we cannot get a valid XML tagging, while in the second case one relation rules the other in a parent-child hierarchy. It is also a matter of data quality as both kinds contain a lot of noise because a tagged relation includes not only the related named entities but also their context, which may contain entities that are not involved in the relationship.

Proposed methodology

Our encoding technique addressed the overlapping issues by using the standoff XML

mechanism in a simple yet flexible way that allowed us to record more than 1055

relations in a corpus of 2.846 pages.The mechanism of standoff annotations proved

to be

quite appropriate, since it allows multi-hierarchical structures to be encoded in

XML.

In fact, the multiple levels of information are captured in different levels of

annotations that are linked together. In the standoff representation, the concurrent

structures may be kept separated in different XML files that are linked together,

or

they may be stored in a single XML file as separate sections. Among these options,

we

have chosen the second one, because keeping all the information stored in a single

file

is more flexible and more in line with the document-process logic of the SaaS we were

aiming at. Since every XML file will contain a single book, it’s much more

convenient to gather all the information extracted in a single XML file. As a result,

these XML files, which are TEI-conformant documents, include not only the encoded

content of the book (<text>), but also the relations extracted

(<standoff>), as well as all the metadata about its bibliographic

description and its digitization (<teiHeader>). The core structure of

the produced XML file is illustrated in the figure below.

<TEI>

<teiHeader

<!-- electronic resource description -->

</teiHeader>

<text>

<!-- OCRed content of the document -->

<front></front>

<body></body>

<back></back>

</text>

<standoff>

<!-- encoding relations -->

</standoff>

</TEI>

But what exactly does the standoff section include and how are the relations tagged

in

there since all the content of the digitized book is included in the

<text> section? No content was transcribed in the standoff

section. In fact, the relations were not actually tagged

, but they have

been declared as links inside the <standoff> container. Every link is

an empty tag which establishes a binary relation among two different entities by

targeting their IDs.

The ID assignment mechanism

The entities that had been observed to participate at least once in a relationship had been marked up and assigned with a single unique ID. Each ID was called on every occurrence of its signified thus bonding together all the different written versions of a single named entity. This ID pointing mechanism allowed us to generate inventories of recorded entities (persons, titles, locations) that enabled us to supervise the ID assignment, as well as to collect the variety of tokens referred to the extracted entities, and to provide NER with a training dataset. For example, the name of the poet Jean Moréas has been recorded in 8 different versions written in two languages (Γιάγκου Παπαδιαμαντοπούλου, Παπαδιαμαντόπουλον, Jean Moréas, Παπαδιαμαντοπούλου, Παπαδιαμαντόπουλος, Ἰωάννην Παπαδιαμαντόπουλον, Ζὰν Μωρεὰς, Μωρεάς).

<p>Εἰδικῶς εἰς τὰ ποιήματα τοῦ <persName type="real" xml:id="MoréasJ">Γιάγκου

Παπαδιαμαντοπούλου</persName> διεκρίνετο ἐνωρὶς πνοὴ γενναιοτέρας

πρωτοτυπίας, τάσις τις, ἀκόμη δειλὴ καὶ ἀμφίβολος νεωτερισμοῦ καὶ ποιά τις

ἐπιμέλεια περὶ τὴν τόνωσιν καὶ τὴν σμίλευσιν τοῦ στίχου. Βεβαίως εἰς τὸν ἔφηβον

<persName type="real" ref="#MoréasJ">Παπαδιαμαντόπουλον</persName>, τὸν

στιχουργὸν τῶν νεανικῶν ποιημάτων καὶ εἰς τὸν κατόπι νεκρὸν ψάλτην τῶν <title

xml:id="tTrigoneskeEchidne">«Τρυγόνων καὶ Ἐχιδνῶν»</title> δὲν διαφαίνεται

ἀκόμη ὁ <persName type="real" ref="#MoréasJ">Jean Moréas</persName>, ὁ ὥριμος

ποιητής, ὅστις ἠδυνήθη ἔπειτα νὰ διανοίξῃ νέους φωτεινοὺς ὁρίζοντας εἰς τέχνην

τόσον ἤδη προηγμένην καὶ νὰ προσθέσῃ νέας δάφνας εἰς τοὺς πλουσίους καὶ

ἀειθαλεῖς δαφνῶνάς της· ἀλλὰ προσπάθειά τις σφρίγους ζωογόνου σπαργᾷ ἔκτοτε

ἐντὸς τῶν ἑλληνικῶν στίχων του.</p>

<p>Διὰ νὰ λάβουν δὲ οἱ ἀκροαταί μου σαφεστέραν περὶ αὐτῶν ἰδέαν καὶ διὰ νὰ μορφώσουν

μᾶλλον συγκεκριμένην γνώμην, ἂς μοῦ ἐπιτραπῇ νὰ ἀναγνώσω τινὰς ἐξ αὐτῶν.</p>

<p>Τοὺς στίχους τούτους παραλαμβάνω ἔκ τινων ποιημάτων του δημοσιευθένττων εἰς μίαν

Ἀνθολογίαν, ἥτις ὑπὸ τὸν τίτλον <title xml:id="tParnassosΑ">«Παρνασσὸς»</title>

ἐξεδόθη ἐν ἔτει <date xml:id="y1874">1874</date> τῇ πρωτοβουλίᾳ καὶ ἐπιστασίᾳ

αὐτοῦ τοῦ Παπαδιαμαντοπούλου ἐνταῦθα, περιλαβοῦσαν δὲ μετὰ τῶν κυριωτέρων

ποιημάτων τῶν συγχρόνων ἐπιφανῶν ποιητῶν, καί τινων μάλιστα ἀνεκδότων, νομίζω, ἢ

δυσευρέτων ἔργων αὐτῶν, καὶ ποιητικὰ δοκίμια τῆς ἰδιαιτέρας ἡμῶν φιλικῆς ὁμάδος,

ἥτις τοιουτο<pb n="41"/><fw type="pageNum" place="top">—41—</fw>τρόπως ἐλάμβανεν

ἀφ’ ἑαυτῆς τὸ εἰσιτήριον διὰ τὴν ἀθανασίαν.</p>

<p>Ἰδοὺ ἕν ποίημα τοῦ <persName type="real" ref="#MoréasJ"

>Παπαδιαμαντοπούλου</persName>, ἐπιγραφόμενον <title xml:id="tProstinaidona"

>«Πρὸς τὴν ἀηδόνα»</title>·</p>

The standoff linking technique

When two named entities of a different type were observed to form a relation, at

least once, within no more than three consecutive sentences, that relationship was

recorded as a link between their IDs. Therefore, every relation was encoded as a

single <link>, which, as illustrated in the figure below, is an

empty element that bears all the information about the relations in attribute-value

pairs. The @type attribute indicates the thematic category of the relation, while

the @target attribute links the related entities by declaring their IDs. Τhe

standoff section, therefore, contains just empty

links that represent

the relations discovered inside the whole book starting from the title page. From

the moment a relation has been identified and annotated in the standoff section, the

link created is enough to represent any other occurance of the same relation, since

its unique IDs were recalled every time when the entity was observed to participate

in a relation inside.

<link type="artAuthor" target="#MoréasJ #tTrigoneskeEchidne"/>

<link type="pubDate" target="#tParnassosΑ #y1874"/>

<link type="artAuthor" target="#MoréasJ #tProstinaidona"/>

<link type="artAuthor" target="#MoréasJ #tOximon"/>

<link type="artAuthor" target="#Goethe #tWilhelmMeister"/>

<link type="artHero" target="#tWilhelmMeister #Mignon"/>

<link type="artAuthor" target="#Goethe #tFaust"/>

<link type="artAuthor" target="#XenosK #tFylla"/>

<link type="artAuthor" target="#VasileiadēsS #tEpeapteroenta"/>

<link type="artAuthor" target="#KabouroglouI #tPatrisneotis"/>

<link type="artAuthor" target="#PapouliasCha #tDakria"/>

<link type="artAuthor" target="#ManitakisN #tParthenon"/>

<link type="artAuthor" target="#tParthenon #PolitēsN"/>

<link type="workAt" target="#Parnassos #Rhoidēs"/>

<link type="artAuthor" target="#Rhoidēs #tPeritissygchellinikispoiiseos"/>

<link type="artAuthor" target="#Rhoidēs #tPeritisenElladikritikis"/>

<link type="artAuthor" target="#MoréasJ #tOligaiselidesRoidouVlachouEridos"/>

<link type="pubDate" target="#tOligaiselidesRoidouVlachouEridos #y1878"/>

<link type="artAuthor" target="#MoréasJ #tPapillion"/>

<link type="artAuthor" target="#MoréasJ #tSyrtes"/>

<link type="artAuthor" target="#MoréasJ #tStances"/>

<link type="artAuthor" target="#MoréasJ #tCantilènes"/>

<link type="artAuthor" target="#MoréasJ #tPélerinPassioné"/>

<link type="artAuthor" target="#MoréasJ #tIfigeneiaMor"/>

<link type="artAuthor" target="#DoyleAC #tFiveorangepips"/>

<link type="artAuthor" target="#DoyleAC #tNavaltreaty"/>

<link type="artAuthor" target="#WellsHG #tPeiratetisthalassis"/>

<link type="artAuthor" target="#WellsHG #tintheabyss"/>

<link type="artAuthor" target="#DoyleAC #tSilverblaze"/>

The binary links that represent the discovered relationships are components of a

group-of-links element (<linkGrp>) contained inside the standoff

sections, but they haven’t grouped because after evaluation we decided that

they didn’t need to be further classified. This simple standoff linking

mechanism was proved to be effective in meeting the needs and the objectives of the

annotation campaign. However, the available tagset allows more detailed descriptions

and classification of the relations. But since there wasn’t further grouping,

the <linkGrp> and <link> tags could be easily

replaced by the <listRelation> and <relation>

tag without any changes. In addition, it should be mentioned that the @target

attribute could be assigned with more than two attributes, thus linking more than

two entities and allowing larger and more complex relations to be captured.

The Generated Dataset

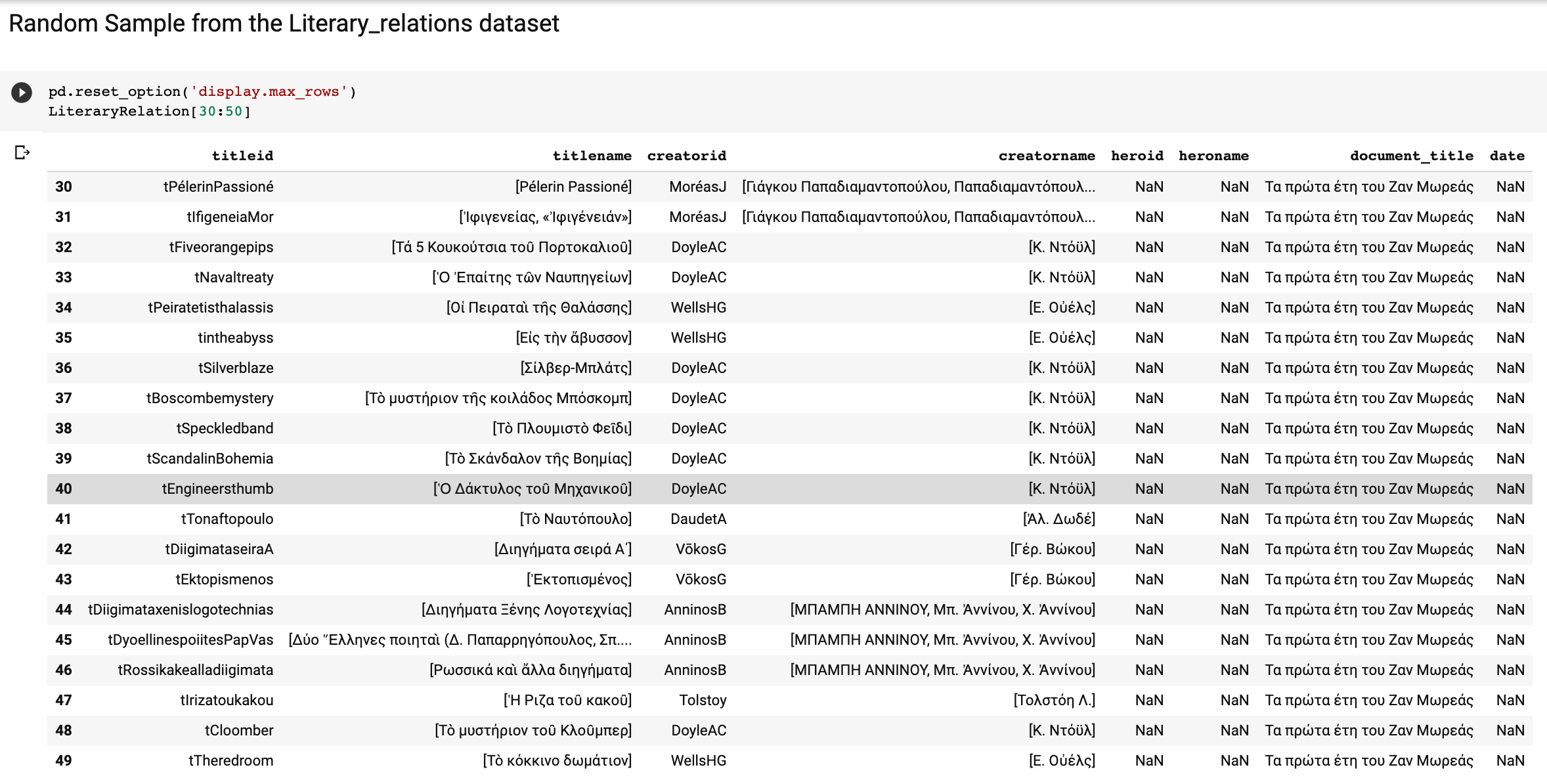

The encoded XML/TEI documents were parsed both with Python 3.7 and XSLT. Python proved to be more efficient, because our objective was to extract and process relationships-related data and not to convert the xml documents to other formats. Besides, Python enables coders to combine together libraries that allow easier and more flexible data processing and visualizations. In our case we used the BeautifulSoup library in order to parse XML files and then used the Pandas library in order to manipulate and exploit further the extracted data. Α data frame was created for each of the two thematic categories we defined and the information collected was organized in 5 columns. For example, as illustrated in the figure below, which depicts a random sample of the artworks_relations frame, the encoded relations were organized in columns such as title_id, titles_name (in listed tokens), creators_id, creator_name (in listed tokens), hero_id, hero_names (in listed tokens), the document_title and date. The conversion of the encoded information into frames per thematic category allowed us to reshape the data through further groupings and categorisations. In total, 1055 relationships were recorded of which 908 were artwork-related and 147 was referred to the Greek and European organization and institutions.

Figure 2: A random fragment of the dataset

The 1055 human labeled relations served as the knowledge database for distant supervision, which revealed in total 1553 instances of the already encoded relations in the same corpus, and which formed the final training dataset.[9]

Conclusions

By encoding relations as links between entities we succeeded in producing a more realistic and accurate representation of named entities’ relations, because the relationship was represented as a linkage between IDs of named entities. In this case, there was no reason to transfer and transcribe content in the standoff section. The empty links targeting binary values worked perfectly as pointers and since the standoff annotation technique was combined with the id linking mechanism we did also easily networked every entity with all the available written versions of it. The ID linking mechanism allowed us to match an entity with all its written instances not just in a single document but throughout the entire corpus of texts. This means that from the moment a relation had been annotated in the standoff section, this one annotation was enough to represent any other occurrence of the same relation. The valuable outcome of the proposed methodology was not just the generated list of the 1055 relations, but the inventory of entity tokens that enhanced our data. The extracted information was captured in two dimensional frameworks in a way that allowed further analysis and knowledge extraction through data reshaping and grouping. For instance, we could observe the relations grouped by title or by author or even by year, merging together the entries that had the same title_id or same creators_id or same dating.

Even though the proposed methodology of combined techniques served perfectly the goals of the ECARLE project, still allows room for improvements. It could be said that the standoff annotations of relations could have been even more detailed and further organized or that they could include additional information about the models of ontologies or even that they could be linked with completely external hierarchies of data. The conversion of the annotated information in other formats (RDF, CSV etc) is easy and less important, because there are several tools for this job. On the other hand, the real challenge lies in capturing, processing and reshaping semantic information in a meaningful way, as well as in enriching our data with domain specific ontologies.

In addition to the benefits of analysis and visualization, the relations enhanced by the interlinked entities formed a training dataset as complete as possible in order to supply with training and evaluation data both the NER and ER model. At the same time the annotation methodology as well as the generated dataset containing titles of artworks, names of creators and heroes and dates contributes to the automatic identification of the artwork references, which still remains of highest importance. Needless to say, the proposed methodology and the generated dataset are available for re-usage, dissemination and optimization, as they aim to contribute to the discovery and the interconnection of semantic relationships of literary content.

Acknowledgements

We wish to acknowledge and thank our colleagues, namely Maria Georgoula, Valando Landrou, Markia Liapi, Thalia Miliou and Marianna Silivrili, who worked on the implementation of the method and the encoding of the selected texts.

We also acknowledge the support of this work by the project "ECARLE (Exploitation of Cultural Assets with computer-assisted Recognition, Labeling and meta-data Enrichment - cultural reserve Utilization using assisted identification, analysis, labeling and evidence enrichment)" which has been integrated with project code T1DGK- 05580 in the framework of the Action "RESEARCH - CREATE - INNOVATE", which is co-financed by National (EPANEK-NSRF 2014-2020) and Community resources (European Union, European Regional Development Fund).

References

[Putra 2019] Putra, Hadi Syah, Rahmad Mahendra and Fariz Darari.

BudayaKB: Extraction of Cultural Heritage Entities from Heterogeneous

Formats.

In Proceedings of the 9th International

Conference on Web Intelligence, Mining and Semantics (WIMS2019).

Association for Computing Machinery, New York, NY, USA, Article 6,

(2019). doi:https://doi.org/10.1145/3326467.3326487.

[Bleeker 2020] Bleeker, Elli, Bram Buitendijk and Ronald Haentjens

Dekker. Marking up microrevisions with major implications: Non-linear text in

TAG.

Presented at Balisage: The Markup Conference 2020, Washington, DC, July

27 - 31, 2020. In Proceedings of Balisage: The Markup Conference

2020. Balisage Series on Markup Technologies, vol. 25 (2020). doi:https://doi.org/10.4242/BalisageVol25.Bleeker01.

[Haentjens 2018] Haentjens Dekker, Ronald,

Elli Bleeker, Bram Buitendijk, Astrid Kulsdom and David J. Birnbaum. TAGML: A

markup language of many dimensions.

Presented at Balisage: The Markup

Conference 2018, Washington, DC, July 31 - August 3, 2018. In Proceedings of Balisage: The Markup Conference 2018. Balisage Series on

Markup Technologies, vol. 21 (2018). doi:https://doi.org/10.4242/BalisageVol21.HaentjensDekker01.

[Kruengkrai 2012] Kruengkrai, Canasai, Virach Sornlertlamvanich,

Watchira Buranasing and Thatsanee Charoenporn. Semantic Relation Extraction from

a Cultural Database.

In Proceedings of the 3rd

Workshop on South and Southeast Asian Natural Language Processing (SANLP),

Association for Computational Linguistics, Mumbai (2012), pp. 15-24.

https://www.aclweb.org/anthology/W12-5002.pdf.

[Marrero 2013] Marrero, Mónica, Julián Urbano, Sonia Sánchez-Cuadrado, Jorge Morato and Juan Miguel

Gómez-Berbís. Named Entity

Recognition: Fallacies, Challenges and Opportunities.

Computer Standards & Interfaces vol. 35, no. 5

(2013) 482–89. https://julian-urbano.info/publications/003-named-entity-recognition-fallacies-challenges-opportunities.html. doi:https://doi.org/10.1016/j.csi.2012.09.004.

[Grover 2006] Grover, Claire, Michael Matthews and Richard Tobin.

Tools to address the interdependence between tokenisation and standoff

annotation.

In Proceedings of the 5th Workshop on NLP

and XML (NLPXML-2006), Trento (2006), pp. 19-26.

https://www.aclweb.org/anthology/W06-2703.pdf. doi:https://doi.org/10.3115/1621034.1621038.

[Barrio 2014] Barrio, Pablo, Gonçalo Simoes, Helena Galhardas and

LLuis Gravano. REEL: A relation extraction learning framework.

In

Proceedings of the 14th ACM/IEEE-CS Joint Conference on

Digital Libraries, London (2014), pp. 455-45.

http://www.cs.columbia.edu/~gravano/Papers/2014/jcdl2014.pdf.

[Desmond 2016] Desmond, Allan Schmidt. Using standoff

properties for marking-up historical documents in the humanities.

it - Information Technology, vol. 58, no. 2 (2016) pp.

63-69. doi:https://doi.org/10.1515/itit-2015-0030.

[Kuhn 2010] Kuhn, Werner. Modeling vs encoding for the

Semantic Web.

Semantic Web 1 (2010) 1-5 https://www.researchgate.net/publication/220575533_Modeling_vs_encoding_for_the_Semantic_Web. doi:https://doi.org/10.3233/SW-2010-0012.

[Stührenberg 2014] Stührenberg, Maik. Extending standoff annotation.

In Proceedings of 9th International Conference on Language Resources and Evaluation (LREC’14),

Reykjavik (2014), pp. 169-174. https://www.aclweb.org/anthology/L14-1274/.

[Pose 2014] Pose, Javier, Patrice Lopez and Laurent Romary. A

Generic Formalism for Encoding Stand-off annotations in TEI.

HAL (2014). https://hal.inria.fr/hal-01061548.

[Spanidi 2019] Spadini, Elena, and Magdalena Turska. XML-TEI

Stand-off Markup: One Step Beyond.

Digital Philology: A Journal of Medieval Cultures vol.

8 (2019) pp. 225-239. doi:https://doi.org/10.1353/dph.2019.0025.

[Viglianti 2019] Viglianti, RRaffaele. Why TEI Stand-off

Markup Authoring Needs Simplification.

Journal of the

Text Encoding Initiative vol. 10 (2019).

https://journals.openedition.org/jtei/1838. doi:https://doi.org/10.4000/jtei.1838.

[Cayless 2019] Cayless, Hugh.

Implementing TEI Standoff Annotation in the browser.

Presented at

Balisage: The Markup Conference 2019, Washington, DC, July 30 - August 2, 2019. In

Proceedings of Balisage: The Markup Conference 2019. Balisage Series on Markup Technologies, vol. 23 (2019). doi:https://doi.org/10.4242/BalisageVol23.Cayless01.

[Beshero-Bondar 2018] Beshero-Bondar, Elisa E., and Raffaele

Viglianti. Stand-off Bridges in the Frankenstein Variorum Project: Interchange

and Interoperability within TEI Markup Ecosystems.

Presented at Balisage:

The Markup Conference 2018, Washington, DC, July 31 - August 3, 2018. In Proceedings of Balisage: The Markup Conference 2018. Balisage

Series on Markup Technologies, vol. 21 (2018). doi:https://doi.org/10.4242/BalisageVol21.Beshero-Bondar01

[Ciotti 2016] Ciotti, Fabio, and Francesca Tomasi. Formal

Ontologies, Linked Data, and TEI Semantics.

Journal of

the Text Encoding Initiative vol. 9 (2016).

https://journals.openedition.org/jtei/1480. doi:https://doi.org/10.4000/jtei.1480.

[Banski 2020] Banski, Piotr. Why TEI Stand-off Annotation

Doesn’t Quite Work and Why You Might Want to Use It Nevertheless.

Presented at Balisage: The Markup Conference 2010, Montréal, Canada, August 3 - 6,

2010.

In Proceedings of Balisage: The Markup Conference

2010. Balisage Series on Markup Technologies, vol. 5 (2010). doi:https://doi.org/10.4242/BalisageVol5.Banski01.

[Piez 2015] Piez, Wendell. TEI in LMNL: Implications for

Modeling.

Journal of the Text Encoding

Initiative vol. 8 (2015).

https://journals.openedition.org/jtei/1337. doi:https://doi.org/10.4000/jtei.1337.

[van Hooland et. al 2015] van Hooland, Seth, Max De Wilde, Ruben

Verborgh, Thomas Steiner and Rik Van de Walle. Exploring Entity Recognition and

Disambiguation for Cultural Heritage Collections.

Digital Scholarship in the Humanities vol. 30, no 2

(2015) pp. 262-279. doi:https://doi.org/10.1093/llc/fqt067.

[Tzogka et. al 2021] Tzogka, Christina, Fotini

Koidaki, Stavros Doropoulos, Ioannis Papastergiou, Efthymios Agrafiotis, Katerina

Tiktopoulou and Stavros Vologiannidis. OCR Workflow: Facing Printed Texts of

Ancient, Medieval and Modern Greek Literature.

QURATOR

2021 – Conference on Digital Curation Technologies.

http://ceur-ws.org/Vol-2836/qurator2021_paper_8.pdf.

[1] Exploitation of Cultural Assets with computer-assisted Recognition, Labeling and meta-data Enrichment https://ecarle.web.auth.gr/en/

[2] Different types of datasets were used for the training of the OCR and the Document Layout Recognition systems (Tzogka et. al 2021).

[3] The Encoding-Semantic-Relations-in-Literature Github repository contains all the materials related to this paper, such as the generated dataset, a complete guide, and samples. Visit the repository at https://github.com/coidacis/Encoding-Semantic-Relations-in-Literature.git.

[4] LMNL is one of the first non-hierarchical markup metalanguages where elements are captured as ranges that may overlap each other.

[5] For the TAGML visit https://huygensing.github.io/TAG/.

[6] Due to the limited available tools for editing and parsing LMNL/TAGML, and due to the rarity of it’s utilization, we prefered the XML, without excluding the possibility of future conversion between the two markup formats.

[7] See Cayless 2019 or records from lingSIG 2019 and TEI-C elections 2020

[8] Take a look for example at the single-charachter titles list of goodread: https://www.goodreads.com/list/show/101840.Books_With_A_Single_Letter_Of_The_Alphabet_In_The_Title

[9] For more information about the ML training process, see the Christou, D., and Tsoumakas, G. (2021). Extracting semantic relationships in Greek literary texts. Manuscript submitted for publication.