Introduction

XProc is a language for defining pipelines. Pipelines are ubiquitous in our lives. They operate all around us, all the time, at every level. There are multienzyme complexes in your cells that function as pipelines strictly controlling metabolic processes [Pröschel, et. al, 2015]. Modern CPU architectures, like the one you probably have in your phone, run pipelines of instructions: literal pipelines implemented in silicon. The global delivery supply chain network that powers modern industry is a massively complicated, massively pipelined process. And we’ve said nothing of the literal pipelines of oil and water and gas that we rely upon daily. These pipelines are analogies, some stronger than others, for what XProc does.

Anecdotally, one of the strengths of Unix (specifically of the Unix command line interface) is that it offers a broad collection of “small, sharp tools” that can easily be combined. Small in the sense that they accomplish a single, focussed task. Sharp in the sense that they do that task efficiently, with a minimum of fuss.

Learning to think about problems in terms of small, sharp tools is incredibly valuable. For the benefit of readers who aren’t familiar with the Unix command line, let’s move our analogy out into the real world. A pair of scissors, is a prototypical small, sharp tool; the antithesis of a Rube Goldberg machine. Other examples that we might classify as small, sharp tools are string, tape,and paper clips. Each one does a single, particular thing (tying, sticking, clipping) and does it well. They’re also adaptable. String can be used to tie many things; scissors can cut many things, tape and paper clips likewise.

When we compose tools together, we’re forming pipelines.

What is a pipeline language?

A pipeline language provides a set of tools and a declarative

language for describing how those tools should be composed.

In this context, we mean software tools. In particular, as markup

users, we mean tools that parse, validate, transform, perform XInclude, rename

elements, add attributes, etc.

You write ad hoc pipelines with these tools every day, you write shell

scripts or Windows batch files or Makefiles or Ant build scripts, or

Gradle build scripts, or any one of a dozen other possibilities (in a

large system, more likely several of them).

Looking slightly farther afield from the core markup language

technologies, we also want to get data from APIs, extract

information from .docx files, update bug tracking systems, construct

EPUB files and publish PDF documents.

Integrating that broader set of tools into ad hoc pipelines only

increases the complexity of those scripts and makes it harder to

understand what they do.

What is XProc?

XProc is (an extensible) set of small, sharp tools for creating and transforming markup and other documents, and a declarative XML vocabulary for describing pipelines composed in this way.

XProc 3.0 is actively being developed by a community group. There are:

-

Four principal editors: Achim Berndzen, Gerrit Imsieke, Erik Siegel, and Norman Walsh.

-

Several specifications: a core language spec currently in “last call”, a standard step library expected to go into “last call” this year, and several specifications for optional steps and additional vocabularies.

-

Two independent implementations tracking the specifications, MorganaXProc by Achim Berndzen and XML Calabash by Norman Walsh

-

A public organization at GitHub where you are encouraged to comment on the specifications.

-

A public xproc-dev mailing list that you are encouraged to join.

-

Public workshops held several times a year, often co-located with other markup events.

-

In addition, Erik Siegel has written a complete programming guide to XProc which will be published by XML Press as soon as the editors stop changing things!

The narrative structure of the rest of this paper is designed to give you a complete overview of XProc. It contains several examples and describes all of the major structures in XProc. It doesn’t attempt to cover every nuance of programming with pipelines. Please feel free to ask questions in one of the fora above, or talk to any of the editors.

What about XProc 1.0?

XProc 1.0 became a W3C Recommendation in 2010. It has been used very successfully by many users, but has not seen anything that could reasonably be described as widespread adoption. There are several reasons for this. Although most pipelines in the real world need to interact with at least some non-XML data, the XProc 1.0 language is extremely XML-centric. The language is also verbose with few syntactic shortcuts and a number of complex features that hinder casual adoption.[1] In addition, XML Calabash, the implementation introduced to most users interested in learning XProc 1.0 provides very little assitance to inexperienced users and quite terse error messages.

If you have never used XProc 1.0: good. You may begin your journey into XML pipelines with XProc 3.0 and never have to wrestle with the inconveniences of XProc 1.0. If you have used XProc 1.0 successfully, the community group believes that you will be delighted by the improvents in XProc 3.0. If you have attempted to use XProc 1.0 and been stymied by it, please attempt to set aside the prejudices you may feel towards XProc and journey into a new world of XML pipelines.

What about XProc 2.0?

There isn’t one. This is something of a running joke. As the working groups involved in the development of XPath, XSLT, XQuery, and the family of related specifications, worked their way towards a second major release, they had a problem. When that work started, XQuery 1.0 had been published along with XSLT 2.0. The ongoing work was very much a product of cooperation between the working groups. The next release of XSLT couldn’t be 2.0, obviously, but having XQuery 2.0 and XSLT 3.0 seemed only likely to introduce confusion for users. The decision was made that XQuery would skip 2.0 entirely and the group would meet at 3.0.

In the XProc realm, there had been some work on a 2.0 version; it is possible to find drafts labeled 2.0 on the internet, even though that work was abandoned at the W3C before advancing very far. It seemed that the simplest thing to do, given that we were building on the 3.x versions of the underlying XML specifications, was to fall in line and jump directly to 3.0 as well.

Pipeline concepts

We believe it will be easier to understand the examples which follow if we invest a little time laying some conceptual groundwork.

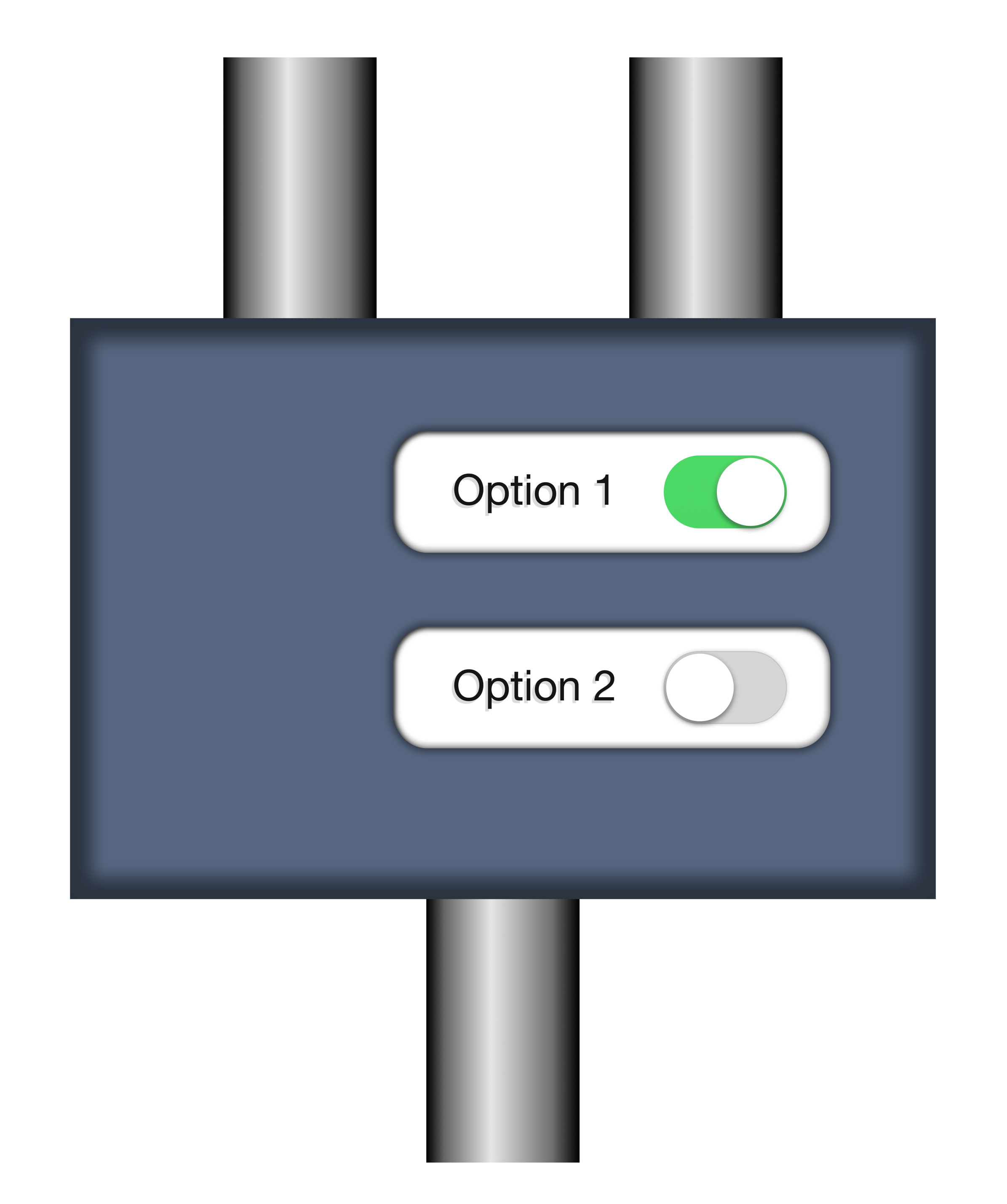

Steps and ports

The central concept in XProc piplines is the step. Steps are the tools from which pipelines are composed. You can think of a step as a kind of box. It has holes in the top where you can pour in source documents, it has holes in the bottom out of which result documents will flow, and it may have some switches on the side that you can toggle to control the behavior of the box. In XProc, the holes are called ports and the switches are called options.

The simplest possible step is the p:identity step.

It has one source port, one output port, and no options. You pour

documents in the top, they come out the bottom. It is every bit as

simple as it sounds, although not quite as pointless!

A slightly more complex step is the p:xinclude

step, which performs XInclude processing. Like the identity step,

it has a single input port and a single output port. If you put an XML

document in the source port, it will be transformed according to the

rules of the XInclude specification and the transformed document will

flow out of the result port.

The XInclude specification mandates two user options: one to control

how xml:base attributes are propagated and another to control

xml:lang attributes (both of these are called “fixups” by

the specification). These options are exposed directly on the “side” of the

XInclude box.

Here’s an example of using the XInclude step in an XProc pipeline:

<p:xinclude name="expanded-docs"/>

As this example shows, steps can also have names. We’ll come back

to those names later in the section “Documents from another step”. The names

aren’t required. You can name every step if you want, or only the steps

where you need the names to connect to them.

Here’s an example of using p:xinclude with xml:base fixup explicitly disabled:

<p:xinclude fixup-xml-base="false"/>

We’ll look at options more closely in the section “Step options”.

An often used step that is a bit more interesting is the XSLT step. Before we present the “XSLT box” we need to cover ports in a little more detail.

-

Ports are always named and the names are always unique on any given step. In the case of XSLT, we’ll have a port called “source”, for providing the step with documents we want to transform, and a port called “stylesheet”, where we give the step our XSLT stylesheet.

-

Ports can be defined so that they accept (or produce) either a single document or a sequence of documents (zero or more). It’s an error to pour two documents into a port that only accepts a single document. It’s an error if a step defined to produce a single document on an output port doesn’t produce exactly one document on that port.

-

Any kind of documents can flow through a pipeline, XML documents, HTML documents, JSON documents, JPG images, PDF files, ZIP files, etc. (We’ll come on to describing how you get documents into pipelines in a bit.) Some steps, like the identity step (or the

p:countstep that just counts the documents that flow through it) don’t care about what kinds of documents they receive. Most steps do care. It only makes sense to send XML documents to the XInclude step, for example. Ports can specify what content types they accept (or produce). It is an error to send any other kind of document through that port. -

Finally, exactly one input port and one output port can be designated as “primary”. That doesn’t really have anything to do with the semantics of the step, it has to do with how they’re connected together. You can think of the primary ports as having little magnets so they snap together automatically when you put two steps next to each other.

With those concepts in hand, we’re ready to look at the input ports of the XSLT step. In XProc, they’re defined like this:

<p:input port="source" content-types="any" sequence="true" primary="true"/> <p:input port="stylesheet" content-types="xml"/>

Those declarations say that the port named “source” accepts a sequence of any kind of document and is the primary input port for the step. The “stylesheet” port accepts only a single XML document.

The output ports are defined like this:

<p:output port="result" primary="true" sequence="true" content-types="any"/> <p:output port="secondary" sequence="true" content-types="any"/>

In other words, the port named “result” is the primary output port and it can produce a sequence of anything. The “secondary” output port can also produce a sequence of anything.

If you’re familair with XSLT, the result port is where the main result

document appears (the one you didn’t identify with

xsl:result-document or the one with a xsl:result-document that

doesn’t specify a URI). Any other result documents produced appear on

the “secondary” port.

This is a good time to point out that steps in XProc do not typically

write to disk. If you’re used to running XSLT from the command line or

from within an editor, your mental model may be that XSLT reads files

from disk, does some transformations, and writes the results back to

disk. This is not the case in XProc. In XProc, everything flows

through the pipeline. There’s a step, p:store, that will write to

disk, but otherwise, all your documents are ephemeral.

Step options

The p:xslt step also has a number of options. These

correspond to the processor options “inital mode”, “named template”,

and “output base URI”. Like the options on the XInclude step, the

options are defined by the XSLT specification itself:

<p:option name="initial-mode" as="xs:QName?"/> <p:option name="template-name" as="xs:QName?"/> <p:option name="output-base-uri" as="xs:anyURI?"/>

As you can see, options have a name and may define their type.

They may also define a default value or assert that they are required,

though none of these options do either. When your pipeline is running,

values will be computed for these options and passed to the step.

Unlike ports, through which documents flow, options can be any

XPath 3.1 Data Model

[XDM] item.

The p:xslt step has a version attribute,

so that you can assert in your stylesheet, for example, that you need

an XSLT 26.2 processor and there’s no point even trying to run the step

if the XProc implementation can’t provide one.

Finally, there’s an option called parameters that takes a map. This is how

you pass stylesheet parameters to the step. Here’s a complete syntax

summary for the p:xslt step:

<p:declare-step type="p:xslt"> <p:input port="source" content-types="any" sequence="true" primary="true"/> <p:input port="stylesheet" content-types="xml"/> <p:output port="result" primary="true" sequence="false" content-types="any"/> <p:output port="secondary" sequence="true" content-types="*/*"/> <p:option name="initial-mode" as="xs:QName?"/> <p:option name="template-name" as="xs:QName?"/> <p:option name="output-base-uri" as="xs:anyURI?"/> <p:option name="version" as="xs:string?"/> <p:option name="parameters" as="map(xs:QName,item()*)?"/> </p:declare-step>

You can use XSLT as many times as you like in your pipeline, with

different inputs and different option values, but every instance of

the p:xslt step will fit this “signature”.

Imagine that you have a stylesheet, tohtml.xsl that transforms XML

into HTML. It has a single stylesheet option, css, that allows the

user to specify what CSS stylesheet link should be inserted into the

output. Here’s how you might use that in an XProc pipeline:

<p:xslt parameters="map { 'css': 'basic.css' }">

<p:with-input port="stylesheet" href="tohtml.xsl"/>

</p:xslt>

Option values can also be computed dynamically with expressions as we’ll see in the section “XPath expressions”.

In the declaration of a step (the

definition of its signature) the allowed inputs and outputs are

identified with p:input and p:output. When a

step is used, the p:with-input

element makes a connection to one of the ports on the step. In the

example above, the pipeline author is connecting the

stylesheet port to the document tohtml.xsl.

The source port, the primary port, is being connected automatically in

this example.

An obvious analogy for connecting up steps is to think of them as tanks with ports on the top and bottom, the connections between them as hoses, and the documents like water. You link all the steps with hoses and then pour water in the top of your pipeline; magic happens and the results pour out the bottom.

It’s a good analogy, but don’t hold onto it too tightly. It breaks

down in a couple of ways. First, you can attach any number of “hoses”

to the output of a step. Want to connect the output of the validator

to ten different XSLT steps? No problem. Second, you never have to

think about the “output ends” of the pipes. Each input port identifies

where it gets documents. If you say that the p:xslt step gets its

input from the result of the validator, you’ve said implicitly that

the output of the validator is connected to the XSLT step. You can’t

say that explicitly. The outputs are all implicitly connected according to

how the inputs are defined.

By the way, if you don’t connect anything to a particular output port, that’s ok. The processor will automatically stick a bucket under there for you and take care of it.

Documents

As stated earlier, any kind of document can flow through an XProc pipeline, but where do documents come from? There are four possible answers to that question: from a URI, from another step, from “inline”, or “from nowhere” (a way of saying explicitly that nothing goes to a particular port).

Documents from URIs

The p:document element reads from a URI:

<p:document href="mydocument.xml"/>

The URI value can be an expression, in which case it may be useful to assert what kind of documents are acceptable:

<p:document href="{$userinput}.json" content-type="application/json"/>

We saw the content-type attribute earlier in the discussion of ports.

Generally, you can specify a list of MIME Media Types there, but you can

also use shortcuts: “xml”, “html”, “text”, or “json”. In fact, the example

above uses application/json merely as an example; using “json” would

be simpler.

If the (computed) URI is relative, it will be made absolute with

respect to the base URI of the p:document element on which

it appears.

As you saw in the p:with-input example in section “Step options”, there is a shortcut for the simple case

where you want to read a single document into a port. (In which case,

it will be made absolute with respect to the base URI of the

p:with-input element.)

Documents from another step

The “magnetic” property of primary ports means that they’ll

automatically snap their ports together for you; in many cases these implicit

connections are all that’s necessary. But they only works for steps

that are next to each other, so you will still sometimes have to add a pipe

to connect two steps together.

The p:pipe element constructs an explicit connection between two

steps. The pipe has two attributes: step, which gives the name of the

step you’re connecting to; and port, which gives the name of the port

you’re reading. There are sensible defaults: for example, if you omit

the port, the primary output port is assumed.

Here’s a pipe that connects back the first XInclude example.

<p:pipe step="expanded-docs"/>

It would be perfectly fine to add port="result" to that pipe, but

it’s not necessary.

Inline documents

You can just type the documents inline if you want. This is one

common use of the p:identity step:

<p:identity name="config">

<p:with-input>

<p:inline content-type="application/json">

{

"config": {

"uri": "http://example.com/",

"port": 8080,

"oauth": true

}

}

</p:inline>

</p:with-input>

</p:identity>

Now any step in the pipeline can read from the “config” step to

get the configuration data. The p:inline element is

required here because the content isn’t XML, so the content type must

be specified. If the inline data were a single XML document, p:inline

could be omitted.

<p:identity name="state-capitols">

<p:with-input>

<states>

<alabama abbrev="AL">Montgomery</alabama>

<alaska abbrev="AK">Juneau</alaska>

<!-- ... -->

<wisconsin abbrev="WI">Madison</wisconsin>

<wyoming abbrev="WY">Cheyenne</wyoming>

</states>

</p:with-input>

</p:identity>

I’ve also elided the port name (port="source") this is fine because

the p:identity step only has one input port (and, technically,

because it’s the primary input port).

This inline data needn’t always be in an identity step; you

can put it directly into the input port on any step. There are

additional attributes on p:inline that allow you to inline encoded

binary data, if you wish.

“Empty” documents

Sometimes it’s useful to say explicitly that no documents should

appear on a particular port. This is necessary if you want to defeat

the default connection mechanisms that would ordinarily apply.

The p:empty connection serves this purpose:

<p:count>

<p:with-input>

<p:empty/

</p:with-input>

</p:count>

Irrespective of the context in which this appears, no documents will be sent to the count step and it will invariably return 0.

XPath expressions

XProc uses XPath as its expression language. Expressions appear most commonly in attribute and text value templates and in the expressions that initialize options and variables.

Variables

It is sometimes useful to calculate a value in an XProc pipeline and then use that value in subsequent expressions. There are both practical and pedagogical reasons to do this. A variable has a name, an optional type, and an expression that intializes it:

<p:variable name="pi" select="355 div 113"/>

Variables are lexically scoped and can appear anywhere in a pipeline. The set of “in scope” variables can be referenced in XPath expressions. The variable declaration may identify what document should be used as the context item.

Value templates

When an option is passed to a step, its value can be initialized with an attribute value template:

<p:xinclude fixup-xml-base="{$dofixup}"/>

Value templates can be used in inline content:

<p:identity name="constants">

<p:with-input>

<constants>

<e>2.71828183</e>

<pi>{$pi}</pi>

</constants>

</p:with-input>

</p:identity>

Unlike text value templates in XSLT, text value templates in XProc can insert nodes into the document.

Long form options

The most convenient way to specify options on a step is usually to specify them as attributes, as we’ve seen in the preceding sections.

There is also a p:with-option element to specify

them more explicitly. This is necessary if you want to use the output

from another, distant, step as the context for the option value.

Assume, for example, that your pipeline already has an option named

“state” (or that you’ve already computed the value in some preceding

variable named “state”). You could initialize the “city” option on

some step to a state capitol using this element:

<p:with-option name="city"

select="/states/*[@abbrev=$state]"/>

<p:pipe step="capitols"/>

</p:with-option>

The p:pipe here assures that the context document

for the city expression is the captiols document we introduced

earlier, even if it isn’t the automatic connection to the step.

In practice, this is fairly uncommon.

Atomic and compound steps

All of the steps we’ve looked at so far are “atomic steps”, they

have inputs, outputs, and options, but they have no internal structure.

They are effectively “black boxes”. The p:xslt step does

XSLT, the p:identity step copies its input blindly,

the p:xinclude step performs XInclude processing.

Aside from any options exposed, you have no control over the

behavior of the step.

XProc also has a small vocabulary of “compound steps”

(see the section “Compound steps”). These steps are “white boxes”.

The steps explicitly wrap around an internal “subpipeline” that defines

some of their behavior. Whereas two p:xslt steps always do the same

thing, two p:for-each steps can do very different things.

Pipelines are graphs

Steps can be connected together in arbitrary ways. Many steps can read from the same output port and any given step can combine the outputs from many different steps into one input port. In this way, a pipeline is a graph. A key constraint is that the graph must be acyclic. A step can never read its own output, no matter how indirectly. Only M. C. Esher can make water flow uphill! Once a document has passed through a step, the only direction it can go is down.

One subtlety: when a variable is defined, it may have a context item that is the output of a step. If it does, subsequent references to that variable count as “connections” to that output port when considering whether or not the pipeline contains any loops.

Hands on: building some pipelines

As we’ve seen, steps are the basic building blocks in XProc 3.0. A large library of standard steps comes with every conformant implementation:

-

There are 50+ atomic step types in the standard library. These atomic steps are the smallest tools in your pipeline, doing things such as XSLT transformations, validation with Schematron, calling an HTTP web service, or adding an attribute to element nodes in a document.

-

The XProc 3.0 specification defines additional, optional step libraries with about twenty steps. They’re optional in the sense that a conformant implementation is not required to implement them, though most probably will. Optional step libraries include steps for file handling, interacting with the operating system, and producing paged media, among others.

-

In addition to the large library of atomic steps, XProc 3.0 also defines five compound steps containing subpipelines. These subpipelines can themselves be composed of atomic or compound steps. Compound steps are used for control flow, looping, and catching exceptions, for example. We’ll look at them more closely in the section “Compound steps”.

-

Implementations may also ship with additional defined either by the implementor or by some community process. The set of available atomic steps might even be user-extensible; implementations might allow users to program their own atomic steps.

The anatomy of a step

In XProc, documents flow between steps: One or more documents flow into a step; some work characteristic for that step is performed; and one or more documents flow out of the step, usually to another step. XProc 3.0 has five document types:

-

An XML document is an instance of a document in the XPath Data Model (XDM). These do not necessarily have to be well-formed XML documents; any XDM document instance will do. (XSLT can produce instances that contain multiple top-level elements, for example, or that contain only text nodes.)

-

An HTML document is essentially the same as an XML document. What’s different is that documents with an HTML media type will be parsed with an HTML parser (rather than an XML parser, so they do not have to be well-formed XML when they are loaded). If an HTML document is serialized, by default the HTML serializer will be used.

-

A text document is a text document without any markup. In the XDM, they are represented by a document containing a single text node.

-

A JSON document is one that contains a map, an array, or atomic values. These are represented in the XDM as maps, arrays, and atomic values. Any valid JSON document can be loaded; it is also possible to create maps and arrays that contain data types not available in JSON (for example,

xs:dateTimevalues). These will flow through the pipeline just fine, and will be converted back to JSON strings at serialization time (if they’re ever serialized). -

Finally there are other documents: anything else. This includes binary images or ZIP documents (in an ePUB), or a PDF rendered from a DocBook source. Implementations have some latitude in how they process arbitrary data.

These documents flow through the input and output ports of

steps. Steps can have an arbitrary number of input and output ports

corresponding to their requirements. The p:xslt step, as

we’ve seen, has two input ports and two output ports. Some steps may

have no input ports at all, only output ports (think of a step that

loads a document from disk), others may have input ports,

but no output ports. (It’s conceivable to have a step with no ports of

any kind, but it’s not obvious what purpose it would serve in the

pipeline.)

We’re going to use two steps in our example pipeline,

p:add-attribute and p:store. Here’s

the signature for p:add-attribute:

<p:declare-step type="p:add-attribute"> <p:input port="source" content-types="xml html"/> <p:output port="result" content-types="xml html"/> <p:option name="match" as="xs:string" select="'/*'"/> <p:option name="attribute-name" required="true" as="xs:QName"/> <p:option name="attribute-value" required="true" as="xs:string"/> </p:declare-step>

As you might guess from its name, the

p:add-attribute step adds attributes to elements in a

document. The document that arrives on the source port is decorated

with attributes and the resulting document flows out of the result

port.

What attributes are added? The attribute-name and

attribute-value options define the attribute name and its

value. The match attribute contains a “selection

pattern”, a concept borrowed from XSLT 3.0 (In XSLT 2.0, it was

called a “match pattern”) to identify which elements in the source

document to change.

The signature for p:store may be a little more

surprising:

<p:declare-step type="p:store"> <p:input port="source" content-types="any"/> <p:output port="result" content-types="any" primary="true"/> <p:output port="result-uri" content-types="application/xml"/> <p:option name="href" required="true" as="xs:anyURI"/> <p:option name="serialization" as="map(xs:QName,item()*)?"/> </p:declare-step>

It takes the document that appears on its

source port and stores it in the location identified by the

href option. (It’s implementation-defined whether any URI

schemes besides “file:” are supported.) The serialization

option allows you to specify how XML and HTML documents should be

serialized (with or without indentation, for example, or using

XHTML-style empty tags).

The p:store step has two output ports. What appears on the result

port is the same document that appeared on the source port.

What appears on the result-uri port is a document that

contains the absolute URI where the document was written.

This might not be intuitive at first glance, but it is a

convenience for pipeline authors. Think of debugging a pipeline: if

you want to inspect some intermediate results, just add a

p:store in your pipeline and you’re done. The

result-uri output is useful, for example, if you need to

send the location where a PDF was stored to some downstream process.

Either port’s output might be useful in some workflows, but you’re also free to

ignore one (or both!) of them.

Our first XProc pipeline

With that preamble out of the way, let’s try to put the concepts we’ve learned into a usable pipeline. It’s going to be a simple, contrived pipeline, but a whole and usable one nevertheless.

Suppose we have an XHTML document with URI

“somewhere.xhtml”. For some reason we need to change this

document to add an attribute named class with value

“header” to all h1 elements.

Assume we want to save the changed document at

“somewhere_new.xhtml”.

Our source document might look like

this:

<html xmlns="http://www.w3.org/1999/xhtml"> <body> <h1>Chapter 1</h1> <p>Text of chapter.</p> <h1>Chapter 2</h1> <p>Some more text.</p> <!-- ... --> <h1>Chapter 99</h1> <p>Text of the final chapter.</p> </body> </html>

Doing this by hand would be both boring and error-prone. So this is an extremely simple, but typical, use case for an XProc pipeline.

Given what we already know about XProc, we can sketch out what

is required: it’s a p:add-attribute step and a

p:store step, where the output port

result of the former is connected to input port

source of the latter.

Here’s what our “add attributes” step might look like using

the long-form options:

<p:add-option name="attribute-adder"> <p:with-option name="attribute-name" select="'class'"/> <p:with-option name="attribute-value" select="'header'"/> <p:with-option name="match" select="'xhtml:h1'"/> </p:add-option>

Note that the value of a select attribute in XProc

is an XPath expression, just like it is in XSLT. If you don’t

“quote” string values twice, you’ll get strange results.

If, for example, you left out the single quotes around “class”, you’d

be asking the processor to find an element named

class in the context document and use its string

value as the value for the attribute-name option.

That’s not likely to go well.

In any event, we’re more likely to use the convenient shortcut forms in practice, so let’s switch to those:

<p:add-option name="attribute-adder">

match="xhtml:h1"

attribute-name="class"

attribute-value="header"/>Much better, except it doesn’t have any input. You might think

that would mean you wouldn’t get any output, but if you glance back at

the signature for p:add-attribute, you’ll see that

the source port does not allow a sequence (i.e, it requires

exactly one input; not zero, and not more than one). If you don’t

provide any input, you’ll get an error.

Providing a way for a step to receive input is called “binding

the port” in XProc. To mark a port binding for a step, XProc 3.0 uses

a p:with-input element where the port

attribute is used to name the port which is to be bound. Inside this

element the actual binding takes place.

We saw p:document before; it’s what we need here;

but we also saw that you can use the href trick to read

a single document. Let’s just use that:

<p:add-attribute name="attribute-adder">

match="xhtml:h1"

attribute-name="class"

attribute-value="header">

<p:with-input href="somewhere.xhtml"/>

</p:add-attribute>

Our other step is p:store and we already know

everything we need to write that:

<p:store href="somewhere_new.html"/>

Now we only need to work out how to connect the result output

from p:add-attribute to the source port on

p:store. We can do that with a p:pipe

<p:store href="somewhere_new.html">

<p:with-input>

<p:pipe step="attribute-adder" port="result"/>

</p:with-input>

</p:store>Our first pipeline is almost complete. We have written the

two steps required to do the task, we have set the steps options to

the required values, and we have bound the input port of the two

steps. Two things are left: we need to give our steps a common root

element (every XProc pipeline has to be a valid XML document) and we

have to bind the namespace prefixes we’ve used. The root element of

every pipeline in XProc 3.0 has to be a

p:declare-step; it has a version

attribute that must be set to “3.0”.

So our final pipeline looks like this:

<p:declare-step version="3.0"

xmlns:p="http://www.w3.org/ns/xproc"

xmlns:xhtml="http://www.w3.org/1999/xhtml">

<p:add-attribute name="attribute-adder">

match="xhtml:h1"

attribute-name="class"

attribute-value="header">

<p:with-input href="somewhere.xhtml"/>

</p:add-attribute>

<p:store href="somewhere_new.html">

<p:with-input>

<p:pipe step="attribute-adder" port="result"/>

</p:with-input>

</p:store>

We can simplify this further. When two steps appear adjacent to each other in a pipeline, the default connection (the “magnetics”) will connect the primary output port of the first step to the primary input port of the second. That’s exactly the situation we have here, so we can remove the explicit pipe binding.

<p:declare-step version="3.0"

xmlns:p="http://www.w3.org/ns/xproc"

xmlns:xhtml="http://www.w3.org/1999/xhtml">

<p:add-attribute name="attribute-adder">

match="xhtml:h1"

attribute-name="class"

attribute-value="header">

<p:with-input href="somewhere.xhtml"/>

</p:add-attribute>

<p:store href="somewhere_new.html"/>

</p:declare-step>

Changing the pipeline

Given our first pipeline, let’s consider how we might adapt it

over time. Suppose our task becomes a little more complicated; not only

should we add the class attribute to the elements, but

we should also mark the header nesting by adding an attribute

level with value “1”.

All we have to do is to add another

p:add-attribute step between our two, existing steps.

<p:declare-step version="3.0"

xmlns:p="http://www.w3.org/ns/xproc"

xmlns:xhtml="http://www.w3.org/1999/xhtml">

<p:add-attribute name="attribute-adder">

match="xhtml:h1"

attribute-name="class"

attribute-value="header">

<p:with-input href="somewhere.xhtml"/>

</p:add-attribute>

<p:add-attribute name="level-adder">

match="xhtml:h1"

attribute-name="level"

attribute-value="1">

<p:with-input href="somewhere.xhtml"/>

</p:add-attribute>

<p:store href="somewhere_new.html"/>

</p:declare-step>

This example demonstrates the convenience of the default bindings. If we’d left in our explicit pipe binding to “attribute-adder”, the stored document would not have been unchanged by the new step we added.

In practice, everything in an XProc pipeline is about the connections between steps. Inserting new steps usually also involves fixing up the connections. Forgetting this can lead to surprising results.

It may also have occurred to you by now that, if you make all of the connections explicit (which you are entirely free to do), then the order of the steps in your pipeline document is basically irrelevant. For the sake of the poor soul (very possibly yourself) who has to modify your pipeline in six months, don’t take advantage of this fact.

A good rule of thumb is to represent make linear flows in your pipeline with linear sequences of steps in your pipeline document. Branching, merging, and nested pipelines always introduce some amount of complexity, see the section “Irreducible complexity”.

Compound steps

In addition to a large vocabulary of atomic steps, steps like

p:xinclude and p:xslt which have no child

elements, XProc 3.0 defines several “compound” steps that let you control the

flow of documents.

Writing pipeline steps

As we saw above, p:declare-step lets you write your own pipeline

steps. Once written, you can call them directly or embed them in

other pipelines.

Loops with for-each

The p:for-each step lets you perform a series of steps (a

subpipeline) to all of the input documents you provide to it.

The p:directory-list step returns a directory listing. The p:load

step has an href option and it loads the document identified by that

URI.

We can combine these steps with p:for-each to process all of the

documents in a directory:

<p:directory-list path="*.xml"/>

<p:for-each select="//c:file">

<p:load href="{resolve-uri(@name, base-uri(.))}"/>

<p:xslt>

<p:with-input port="stylesheet" href="tohtml.xsl"/>

</p:xslt>

</p:for-each>

Here we get a list of all the files in the current directory

that match “*.xml”, load each one, and run XSLT over it.

The resulting sequence of transformed HTML documents appears on the

output port of the p:for-each.

Conditionals

There are two conditional elements, a general p:choose and a syntactic

shortcut, p:if, for the simple case of a single conditional.

p:choose

Looking back at the p:for-each example, suppose

some of the documents in the directory are

already XHTML. We don’t want to process them with

our tohtml.xsl stylesheet because they’re already HTML,

but we do want to process the other documents. We can use

p:choose to achieve this:

<p:directory-list path="*.xml"/>

<p:for-each select="//c:file">

<p:load href="{resolve-uri(@name, base-uri(.))}"/>

<p:choose>

<p:when test="/h:html">

<p:identity/>

</p:when>

<p:otherwise>

<p:xslt>

<p:with-input port="stylesheet" href="tohtml.xsl"/>

</p:xslt>

</p:otherwise>

</p:choose>

</p:for-each>

The p:choose step will evaluate the test condition on each

p:when and run only the first one that matches. In this case, if the

root element of the document loaded is h:html, then we pass it through

the identity step. Otherwise, we pass it through XSLT. The output of

the p:choose step is the output of the single branch that gets run.

p:if

It is very common in pipelines to have conditionals where you

want to perform some step if an expression is true, and pass the

document through unchanged if it isn’t.

That’s what the preceding p:choose example does, in fact. The p:if

statement can be used to simplify this case. It has a single test

expression. If the expression is true, then its subpipeline is

evaluated, otherwise, it passes its source through unchanged.

The preceding pipeline can be simplified with p:if:

<p:directory-list path="*.xml"/>

<p:for-each select="//c:file">

<p:load href="{resolve-uri(@name, base-uri(.))}"/>

<p:if test="not(/h:html)">

<p:xslt>

<p:with-input port="stylesheet" href="tohtml.xsl"/>

</p:xslt>

</p:if>

</p:for-each>

The semantics are exactly the same. If the document element is not

h:html, it will be transformed, otherwise it will pass through

unchanged.

Exception handling

Many pipelines just assume that nothing will go wrong; often nothing does. But on the occasions when a step fails, that failure “cascades up” through the pipeline and if nothing “catches” it, the whole pipeline will crash.

Sometimes, having the whole pipeline crash is not appropriate. We can

write defensive pipelines by adding try/catch elements around the

steps that we know might fail (and for which there is some useful

corrective action). That’s what p:try is for:

<p:try>

<ex:do-something/>

<p:catch code="err:XC0053">

<ex:recover-from-validation-error/>

</p:catch>

<p:catch>

<ex:recover-from-other-errors/>

</p:catch>

</p:try>

This pipeline will do ex:do-something. If that succeeds, that’s the

result of the p:try. If it fails, p:try will choose a “catch”

pipeline to deal with the error.

If the error thrown is err:XC0053, a validation error

(unfortunately, you just have to look up the error codes), the

ex:recover-from-validation-step pipeline will be run. If it

succeeds, that’s the result of the p:try. (If it fails, the whole

p:try fails and we better hope there’s another one higher up!) If

the error thrown isn’t a validation error, then

ex:recover-from-other-errors will run.

In no case will more than one catch branch run.

Viewports

The p:viewport step is a looping step, like p:for-each. The

difference is that where p:for-each loops over a set of documents,

p:viewport loops over parts of a single document.

Suppose there’s some processing that you want to perform on specific

sections of a document. Let’s say you want to transform all sections

that are marked as “final” in some way.

Because sections can be nested arbitrarily, there’s no straightforward

way to “pull apart” the document so that you can run p:for-each over

it. Instead, you need to use p:viewport:

<p:viewport match="section[@status='final']">

<p:xslt>

<p:with-input port="stylesheet" href="final-sections.xsl"/>

</p:xslt>

</p:viewport>

This step will take each section marked as “final” out of the input

document and transform it with final-sections.xsl. It will then

stitch the results of that transformation back into the original

document exactly where the sections appeared initially.

All of the other content in the document will be left untouched.

Groups

The p:group element does nothing. Like div in HTML, it’s a

free-form wrapper that allows authors to group steps together. This

may make the pipelines easier to edit and it provides a way for

authors to limit the scope of variables and steps.

You’ll probably never use it.

Libraries

The pipeline steps you write can be grouped together into libraries for convenience. This allows whole libraries of related steps to be imported at once.

Loose ends

The authors wish to address a few more topics, without cluttering the flow of the preceding narrative.

Document properties

All documents flowing through an XProc pipeline have an associated collection of document properties. The document properties are name/value pairs that may be retrieved by expressions in the pipeline language and set by steps. There are standard properties for the base URI, media type, and serialization properties. Authors are free to take advantage of document properties to associate metadata with documents as they flow through the pipeline.

One natural question to ask is, when is metadata preserved? It

seems pretty clear that the properties associated with a document

should survive if the step passes through a p:identity

step. Conversely, it seems likely that the output from a

DocBook-to-HTML transformation is in no practical sense “the same

document” that went in and preserving document properties is as likely

to be an error as not.

Step authors should describe how their pipelines effect the properties of the documents flowing through them.

Irreducible complexity

The syntax of XProc 3.0 is, we believe, a marked improvement over the XProc 1.0 syntax. While much of it is still familiar, some awkward concepts have been removed and a large number of authoring shortcuts have been added. Unfortunately, at the end of the day, complex pipelines are still, quite obviously, complex. XProc is, fundamentally, a tree-based language describing a graph-shaped problem. Until such time as someone invents a useful, graph-shaped syntax, we may be stuck with a certain amount of irreducible complexity.

Why not just use XSLT?

XSLT is a fabulous tool. It appears in almost every XProc pipeline written to process XML. It is very definitely a sharp tool, but it is by no means “small” anymore. The XSLT 3.0 specification runs to more than 1,000 pages; printed in a similar way, the XProc 3.0 specification doesn’t (yet) break 100 pages.

That is absolutely not a criticism of XSLT. But there is value in breaking problems down into simpler parts. Developing, testing, and debugging six small stylesheets is much easier than performing any of those tasks on a single stylesheet that performs all six functions. Combining processing into a single stylesheet also introduces whole classes of errors that simply don’t occur in small, separate stylesheets.

If XSLT will do the job, by all means, use it. But we think there is a role for declarative pipelines that is complimentary to XSLT.

References

[Proschel2015] “Engineering of Metabolic Pathways by Artificial Enzyme Channels”. Frontiers in Bioengineering and Biotechnology. Pröschel M, Detsch R, Boccaccini AR, and Sonnewald U. 2015. doi:https://doi.org/10.3389/fbioe.2015.00168.

[XDM] XQuery and XPath Data Model 3.1. Norman Walsh, John Snelson, and Andrew Coleman, editors. W3C Recommendation. 21 March 2017. http://www.w3.org/TR/xpath-datamodel-31/

[XInclude] XML Inclusions (XInclude) Version 1.0 (Second Edition). Jonathan Marsh, David Orchard, and Daniel Veillard, editors. W3C Recommendation. 15 November 2006. http://www.w3.org/TR/xinclude/

[XProc30] XProc 3.0: An XML Pipeline Language. Norman Walsh, Achim Berndzen, Gerrit Imsieke and Erik Siegel, editors. http://spec.xproc.org/

[XSLT30] XSL Transformations (XSLT) Version 3.0. Michael Kay. W3C Recommendation 8 June 2017. http://www.w3.org/TR/xslt-30/

[1] In its defense, it was designed a decade ago. For several years early on the Working Group believed that they might finish XProc 1.0 before XPath 2.0 was finished. At least one working group member had in mind developing an implementation on top of an XPath 1.0 system.