Introduction

The nice thing about standards is that you have so many to choose from. -- Andrew Tanenbaum Tanenbaum, 2002

The eXtensible Markup Language (XML) is not only a standard in its own right, but also serves as something of a meta-standard, a standard which supports the creation and development of other standards. Jon Bosak, in discussing XML, argued that by providing a "standardized format for data and presentation," it would "eventually force vendors to support the standardized approach, just as user demand for access to the Internet forced vendors to support the Web," Bosak, 1998 pressuring software vendors to converge on single, standardized markup languages for various purposes. This would in turn bring about a number of benefits, including vastly improved interoperability and freeing users from dependence on a particular vendor.

To a certain extent, these hopes for XML have been born out. We can point to a number of cases where a single XML-based markup language has emerged for common use throughout a community. Applications of XML such as MathML and the Chemical Markup Language have provided useful data exchange formats for the sciences, and languages such as Encoded Archival Description (EAD) and the Text Encoding Initiative (TEI) Guidelines have become accepted international standards in their fields.

However, it is relatively easy to point to examples where a single common markup language within an application domain has not emerged. In some cases, this might be ascribed to a combination of market factors and vendor intransigence; no one really expects Microsoft to suddenly abandon the Office Open XML document format, any more than they expect the Apache Software Foundation to abandon the OpenDocument format. Similarly, the legal and marketing battles over rights expression languages have not resulted in a single XML rights expression language achieving complete domination in that application area, with the battles between the MPEG Licensing Authority and those who would prefer to see a common rights expression language not encumbered by patent claims leading to a situation where the Open Digital Rights Language and the MPEG-21 rights expression language both continue to see widespread use.

Failure to achieve standardization is a relatively well-studied phenomenon, and the political and economic factors that may derail efforts to achieve industry consensus on standards have been studied in a variety of commercial fields. In this paper, I will examine a somewhat less-studied phenomenon, failure to achieve standardization in cases of non-commercial XML language development, in particular the development and application of structural metadata languages within the digital library community over the past two decades. By examining standardization failures where economic concerns are not a primary motivator for avoiding standardization, we may be able to obtain a broader theoretical understanding of standardization processes and perhaps be able to forestall future failures of standardization and the costs such failures impose.

A Brief History of Structural Metadata in Digital Libraries

While it has been a much discussed topic in the digital library community, structural metadata has not been particularly clearly defined. One of the earliest definitions can be found in Lagoze, Lynch & Daniel, Jr., 1996:

structural data - This is data defining the logical components of complex or compound objects and how to access those components. A simple example is a table of contents for a textual document. A more complex example is the definition of the different source files, subroutines, data definitions in a software suite.

This article separately defined a form of what it called "linkage or relationship data," noting that "Content objects frequently have multiple complex relationships to other objects. Some examples are the relationship of a set of journal articles to the containing journal, the relationship of a translation to the work in its original language, the relationship of a subsequent edition to the original work, or the relationships among the components of a multimedia work (including synchronization information between images and a soundtrack, for example)." Arms, Blanchi & Overly, 1997, building on this definition, called structural metadata "metadata that describes the types, versions, relationships and other characteristics of digital materials," emphasizing the need to simultaneously define discrete sets of digital information and associate those sets with each other, as well as with other forms of metadata which describe those sets or individual items within them. Others have taken a more functional perspective on structural metadata, with the Making of America II (MOA II) project's white paper defining structural metadata as "those metadata that are relevant to the presentation of a digital object to the user. Structural metadata describe the object in terms of navigation and use. The user navigates an object to explore the relationship of a subobject to other subobjects. Use refers to the format or formats of the objects available for use rather than formats stored." Hurley et al., 1999

While a functional conception of structural metadata is a useful perspective to have, the Making of America II definition is focused on a very limited set of functions relevant to a digital library user: navigation of a complex digital object, and access to that object and its components. This ignores similar, but related, functional needs for managers of digital library systems, such as migration of components of a digital object based on their format, or enforcement of access control. If we look at the range of functions within a digital library for both end users (navigation and access) and managers (quality assurance, preservation, access control, storage management, metadata management), we recognize that fundamentally structural metadata is about two functions: identification and linking (what Lagoze, Lynch & Daniel referred to as "structural data" and "linkage data"). Structural metadata enables the identification of stored digital objects, whether they be composed of multiple files, single files or bitstreams within a file, and the establishment of links of various kinds between identified objects.

Structural metadata so defined has a lengthy history, and not merely within the digital library community. At a fundamental level, the idea of identifying something as a discrete entity and indicating its relationship to other entities is inherent to the concept of markup languages; tagging any segment of text involves its identification as a separate and unique entity for some purpose, and indicating at least implicitly the tagged text's relationship to other marked entities around it. In the digital library community, however, the term 'structural metadata' has tended to be reserved for markup languages which can impose a structure on a range of different types of objects, and are not specific to one class of texts or objects. A markup language such as the Association of American Publishers DTD ANSI/NISO, 1988 provides structure, but only for one particular class of documents (scientific journal publications). Something like the HyTime specification ISO/IEC 1992, which provides a more generalized architecture for addressing and hyperlinking, is much closer to the spirit of what the digital library community thinks of as structural metadata.

If a single standard were to be pointed to as the progenitor of later digital library structural metadata efforts, it would probably be the Text Encoding Initiative DTD. While TEI was designed to serve within a fairly specific application area ("data interchange within humanities research," ACH/ACL/ALLC, 1994, Section 1.3), the wide variety of texts subject to analysis in the humanities meant that the TEI DTD had to provide some relatively abstract structural elements in order to support markup of text features beyond those enumerated by more specific tags within its markup language. The default text structure for the TEI DTD thus includes the neutrally-named, recursive structural element, <div> (for division), to indicate a structural component within a text, with an attribute of "type" to indicate the nature of the specific division where the tag is applied. The TEI DTD also includes a number of elements intended to support hyperlinks between textual components within a document or to elements in other documents. As the pre-eminent standard for much of early work in SGML within academia, TEI had a tremendous influence on later developments, particularly within the digital library community.

That influence had a direct impact on the Making of America II project's efforts to create a DTD for encoding digital library objects. If you examine the grant proposal for the Making of America II project to the National Endowment for the Humanities Lyman & Hurley, 1998, you will find that the proposal, in discussing the development of structural metadata specifications for the project, explicitly mentions TEI: "An important research objective for this project is to work with the participating institutions, NDLF sponsors, other research libraries and national organizations to...investigate encoding schemes for structural metadata, such as SGML/TEI, table-based models, etc." The proposal also states that an "SGML DTD specialist will be hired to develop structural metadata proposals. The incumbent will coordinate this work with similar work being done elsewhere, for example at Michigan, through TEI, etc." As the author of this paper was the "SGML DTD specialist" in question, I can safely assert that our coordination efforts in this regard might best be characterized as wholesale copying of the TEI's default text structure mechanisms, not only because they provided a fairly abstract mechanism easily applied to the range of materials being digitized in the Making of America II project, but because employing a structure as similar to TEI as possible was seen as likely to ease conversion of structural metadata already in TEI format into the MOA II document format, and vice versa.

The structural mechanisms taken from TEI and used in the MOA II format were carried forward when the Digital Library Federation decided to revise and extend the MOA II DTD to try to advance it from 'interesting research result' into something that might serve as a potential standard for the digital library community. This new schema, named the Metadata Encoding & Transmission Standard (METS), made several changes to linking elements from the original MOA II DTD to try to better align the new schema with the XLink standard which was released during METS' initial development. However, the fundamental structural elements based on TEI's <div> text structure were left unchanged.

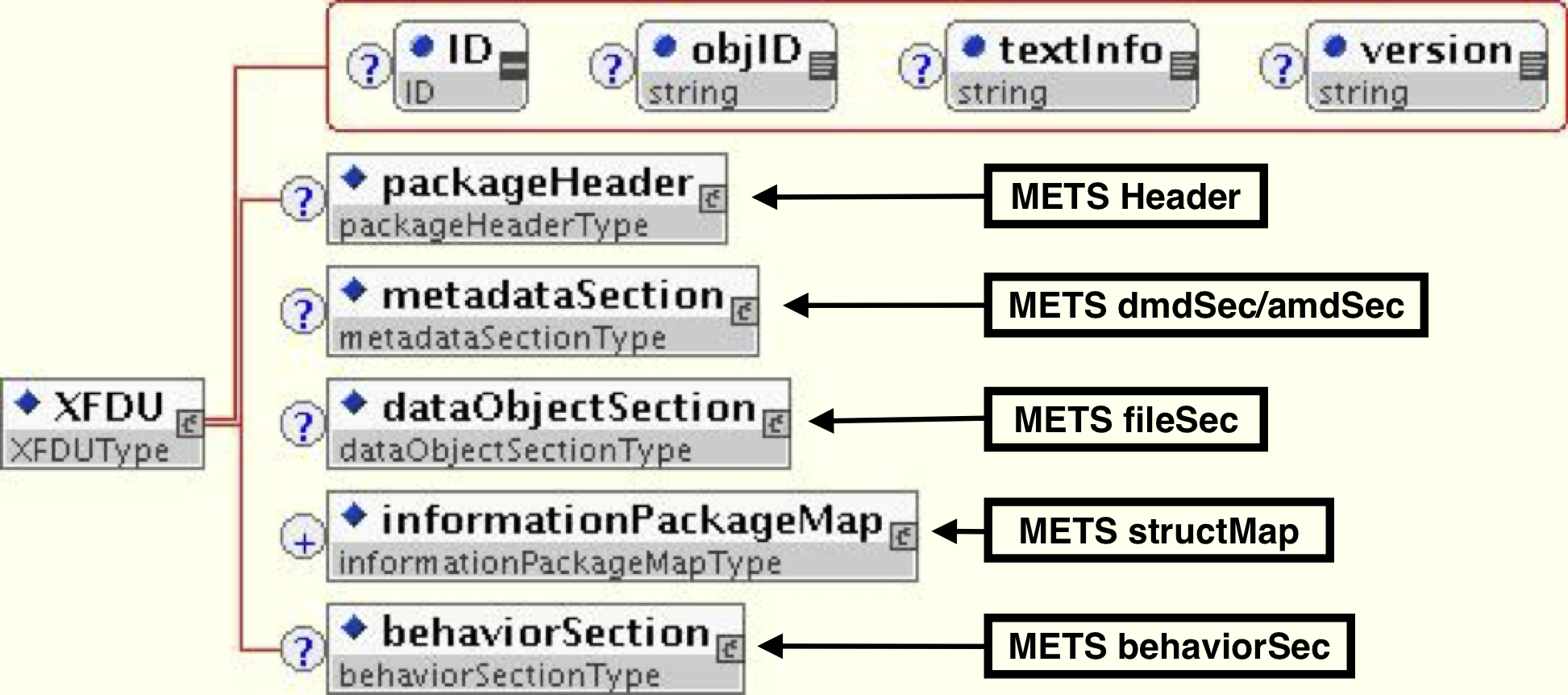

At the same time that the initial development efforts on METS were reaching their conclusion, the Consultative Committee on Space Data Systems released a document which would prove to have a tremendous influence on the digital preservation and digital library communities, the Reference Model for an Open Archival Information System (OAIS) CCSDS, 2002. A significant part of this standard, which defined an abstract model for the operation of archives, was an information model which identified the full set of information necessary to insure the long-term preservation of data (particularly digital data) and the relationships that those different types of information had to each other. The OAIS Reference Model has turned into a foundational standard for much of the work on digital preservation within the digital library community, but for practical purposes in the work of digital libraries and scientific data archives, it lacked an essential component. While it defined an abstract information model for digital preservation, it did not provide a reference implementation of that model which could be used for packaging data for preservation purposes. The CCSDS addressed this omission in their later standard, XML Formatted Data Unit (XFDU) Structure and Construction Rules CCSDS, 2004.

The XFDU standard's XML implementation was based heavily on the METS

standard. In fact, in the preliminary 2004 White Book version of XFDU, the included

XML

schema for XFDU includes several sections taken directly from the METS schema and

imports a schema to support XLink that resides in the METS web area on the Library

of

Congress Website. The structure outlined for an XFDU Package:

Figure 1: Figure 1: XFDU Root Structure with METS Equivalents

All of the standards discussed to date share something other than a formalization through the XML Schema language and a fondness for defining abstract, hierarchical structures via a recursively defined element. They all avoided trying to conform to the Resource Description Framework (RDF), in either its abstract model or more concrete serialization syntaxes. To a great extent this is reflects the realities of working with digital library materials. RDF demands the use of URIs for identification of resources, and most digital library operations simply don't want to engage in the effort to coin URIs (and arrange for dereferencing of URIs) for every thumbnail/web derivative/master image they create in digitizing a 300 page manuscript, or develop and manage an ontology of terms to use for predicates in RDF expressions. However, a desire to include digital library materials within the Linked Open Data world requires a way of presenting digital library materials in a manner compliant with RDF's data model and syntax. The Open Archives Initiative (OAI) has attempted to fulfill this need through the development of the Open Archives Initiative Object Reuse and Exchange (OAI-ORE) specifications, which define both an abstract data model OAI, 2008a and serialization syntaxes compliant with a variety of languages, including RDF/XML OAI, 2008b.

On its face, the OAI-ORE specifications do not appear to share other structural metadata standards' love of hierarchical structures. OAI-ORE defines mechanisms that enable someone to define an aggregation of web resources and allow them to be addressed via a single URI, and to do so via another web resource, the OAI-ORE resource map, which provides a machine-readable definition of the aggregation and its contents (along with metadata about the resource map and contents) in a manner conformant with RDF. However, as noted, OAI-ORE enables the aggregation of web resources and allows them to be referenced via a single URI, and such an aggregation is obviously itself a web resource. An OAI-ORE aggregation can therefore recursively include other OAI-ORE aggregations, which may include other OAI-ORE aggregations, etc. Recursive aggregation of OAI-ORE aggregations has been shown to be a successful strategy for packaging digital library materials in a manner which enables RDF-compliant metadata for those aggregations to be made available along with the digital materials themselves McDonough, 2010.

The utility of a hierarchical structure which can be mapped on to separately stored digital content is surely of no surprise to the markup language community. What perhaps should surprise and concern us is this: each of the above standards were created via the involvement of large number of individuals and organizations. The initial development of METS involved 29 individuals from 13 institutions, and similarly large numbers have been involved in the creation of XFDU and OAI-ORE (a somewhat larger number of organizations, in fact); the numbers of people and organizations involved in the TEI Guidelines development is larger still. There is a case to be made that all of the efforts mentioned above which followed the creation of the TEI Guidelines were, in fact, reinventing the wheel (and a wheel into which much thought and planning had been placed), and if so, that represents a substantial waste of intellectual energy and other resources. If this is the case, some consideration should be given to why academic XML developers are reinventing wheels, so that if possible, we can avoid such wasted effort in the future.

Necessity is the Mother of Reinvention

Academic digital library operations obviously do not occur within a vacuum. They are embedded within a variety of sometimes overlapping and mutually constitutive institutional frameworks including the library or libraries in which they are developed, the university in which a library exists, governmental entities which may set legal and administrative requirements for operations and any number of external public and private funding agencies. If we assume that digital libraries' motivations for reinvention are something other than simply Poe's imp of the perverse, a logical place to begin an inquiry into those motivations is to examine the relationships which exist between this wide variety of actors and what incentives and disincentives they establish for pursuing particular technological courses.

A critical, if often undiscussed, aspect of digital library work in the academy is its reliance on grant funding for a significant portion of its activities. Academic libraries have typically never found themselves within an excess of funding for their services, and the development of digital library services and collections over the past two decades, as a brand new set of activities for libraries, required either reallocating funding from other existing library activities or obtaining new funding streams to support digital library work. From the National Science Foundation's Digital Library Initiative from 1994-1998 and on, libraries have relied on grant funding from public agencies such as the National Science Foundation, the Library of Congress and the National Endowment for the Humanities, along with private foundations such as the Andrew W. Mellon Foundation, the Alfred P. Sloan Foundation and the Knight Foundation, to enable the creation of new services and collections.

The Making of America II project involved funding from two separate sources for different phases. The first planning phase of the project was supported by funding from the Digital Library Federation; the second testbed phase was supported by funding from the National Endowment for the Humanities. The planning phase was scoped to focus on four activities:

Identifying the classes of archival digital objects that will be investigated in the MOA II Testbed Project....

Drafting initial practices to create digital image surrogates for the classes of archival digital objects selected for this project....

Creating a structural metadata "working definition" for each digital archival class included in the project....

Creating an administrative metadata "working definition" for digital images to be used in the MOA II Testbed project. UC Berkeley Library, 1997

The structural metadata working definitions were to be based on an analysis of behaviors to be enabled for the classes of digital archival objects in question, not necessarily innate features of the objects themselves: "Behavior, metadata and technical experts will collaborate to determine the exact metadata elements that are required to implement the behaviors defined for each class of digital archival object." A functional approach to defining structural metadata for digital archival objects will obviously depend heavily on the classes of objects examined, and in the case of MOA II those were 1. continuous-tone photographs; 2. photograph albums; 3. diaries, journals and letterpress books; 4. Ledgers; and 5. correspondence Hurley et al., 1999, p. 11. As the MOA II report notes, the discussions of structural metadata within the project were influenced by TEI, but also by work occurring at the Library of Congress for its National Digital Library Program Library of Congress, 1997.

With this background, we can see several factors in play leading to a decision to pursue creation of a new structural metadata standard rather than employ a pre-existing one. First, digital library programs in this period needed to aggressively pursue grant funding to remain viable, and grant funding for development projects is not likely to be available for simply using pre-existing tools, standards and software. There is thus a strong financial motivation to design projects which will result in new 'product,' a concrete outcome that a grant agency can use to determine whether the results of its funding have been successful or not. A related factor is prestige and reputation. Libraries are more likely to receive further grant funding if they can point to prior examples of their having successfully created new systems and standards on previous grant-funded projects. Developing a new structural metadata language, particularly one designed to try to serve as a standard for the larger community, not only provides an original concrete work product that is more likely to be funded, but if successful, also positions the library to obtain further grant funding. Financial incentives for grant-driven work in digital libraries have thus traditionally favored developing new systems rather than building upon existing ones.

Political considerations also entered into the MOA II project's decision to pursue a new structural metadata language rather than use an existing one such as the TEI Guidelines. There had obviously been prior work in the field of structural metadata before the MOA II project commenced, and the various grant and planning documents for MOA II specifically mention TEI, the Library of Congress NDLP efforts, as well as the original Making of America project undertaken by the University of Michigan and Cornell University, the University of Michigan's structural metadata work on JSTOR, and the U.C. Berkeley E-Bind project which sought to develop a standard for making digital archival materials available online. The MOA II project was developed in an environment in which there were already several emergent potential standards for structural metadata, and the project was conceived of as an explicitly political one, which would endeavor to foster harmonization and standardization among those in the digital library community and in particular those participating in the Digital Library Federation efforts:

To create a national digital library, it will be necessary to define: a) community standards for the creation and use of digital library materials and; b) a national software architecture that allows digital materials to be shared easily over the network. It is possible to pursue both these goals concurrently.

The National Digital Library Federation, a program of the Council on Library and Information Resources, was created to help promote opportunities and address problems inherent in the creation of digital libraries. Five of its sponsors are joining in the present proposal to begin to address these two issues. They will work together with other NDLF sponsors, the Research Libraries Group (RLG), the Corporation for National Research Initiatives (CNRI), OCLC, five Council on Library and Information Resources/American Council of Learned Societies (CLIR/ACLS) Taskforces to Define Research Requirements of Formats of Information, The Library of Congress and others to develop best practices that will be required to implement a national digital library system. Lyman & Hurley, 1998

For the Making of America II project, then, the choice to reinvent an existing wheel was due to a mixture of financial, status and political considerations. Under that set of incentives, a choice to produce a new potential standard which significantly replicated pre-existing work made more strategic sense. The situation with respect to the METS initiative and its modifications to the MOA II DTD represented a rather different set of incentives and motivations, however.

The MOA II DTD was, with respect to its purpose as a grant project, successful, and also served its purpose as a boundary object reasonably well given the structure of the grant project which produced it. But it did suffer from several failings which prevented it from serving as an effective standard for wider digital library work. The first failing was the result of the MOA II project's focus on a limited range of archival classes, none of which included time-based media. Digital libraries interested in audio/visual works found the structural aspects of the MOA II DTD extremely weak, leaving them incapable of distinguishing adequately between distinct byte streams or time-based segments within a media file. Another failing which emerged in discussions among Digital Library Federation members regarding the MOA II DTD was that in certain respects it failed in its mission as a boundary object by being overly prescriptive in the non-structural metadata elements associated with digital library objects. Many DLF members, including the Library of Congress, the University of California and Harvard felt that they needed more flexibility with respect to non-structural metadata for the DTD to completely serve local needs. In short, the MOA II DTD was seen as lacking the interpretive flexibility necessary to simultaneously enable collaboration while allowing users of the DTD to 'agree to disagree' on some aspects of metadata practice McDonough, Myrick & Stedfeld, 2001. Despite these failings, within two years of its initial release, the MOA II DTD had seen a relatively high degree of use among the DLF membership, including its use in several production projects at various libraries. This led to a fairly common situation for any form of information technology seeing long-term use; there was a strong desire for change among the user base of the MOA II DTD and an expressed desire for a successor markup language to be created, but a sufficiently large user base for the current DTD to result in a certain degree of path dependency and a desire to avoid radical change.

The desire for a successor format did lead to a discussion among DLF members as to whether it might be better to consider an existing alternative schema to replace the MOA II DTD, with SMIL and the MPEG-7 formats being considered for their support for various forms of media. Members also discussed whether a successor format should keep using basic XML, employing the newly released XML Schema language from the W3C, or change over to using the RDF data model and the RDF schema language. In these discussions, path dependency played a major role, with organizations that had already invested in a significant amount of infrastructure to support basic XML not wishing to migrate to use of RDF, and those who had already begun developing infrastructure based on the MOA II DTD not wishing to abandon that work in favor of an entirely new standard such as SMIL or MPEG-7.

The outcome of these various discussions was that a new successor format to the MOA II DTD should be created, but that successor, METS, should to the extent possible be compatible with existing MOA II-conformant documents, so that new features (e.g., support for addressing time-based media) could be added without sacrificing support for older MOA II documents. The demand for greater 'interpretive flexibility' with respect to descriptive and administrative metadata was met by making these sections optional, and eliminating the use of specific metadata elements for administrative metadata within the METS schema. With these changes, older MOA II documents could, with very minor and easily automated modifications, be made to conform to the new METS schema, while new METS documents could take advantage of greater capabilities for imposing external structure on time-based media. Given these changes, it can be debated whether METS constitutes a reinvention of the MOA II wheel, or merely adding a shiny hubcap, but importantly, it was felt by the DLF members that it had to be developed and promulgated as a new and separate standard, so that control over the successor format could be placed in the hands of a more diverse and representative set of players than the five institutions that participated in the Making of America II project. So, in addition to the user demands for flexibility, there were user demands for accountability which required a successor format be established. Thus, the true reinvention for METS was not so much the technological changes as the social and political apparatus that was developed to support its development and maintenance.

At the same time that the METS initiative of the DLF was working on its first iteration of the METS XML Schema, the Consultative Committee on Space Data Systems chartered a new working group on Information Packaging and Registries to develop recommendations on data packaging standards that were better suited to the Internet and that employed XML, the clear emerging standard for data description languages at that time. Like other efforts in the structural metadata space, the IPR working group examined other existing standards efforts, but ultimately decided against them:

CCSDS prefers to adopt or adapt an existing standard rather than start from scratch to meet identified requirements. So after the development of scenarios and requirements, the IPR WG evaluated existing technologies and alternative solutions prior to any XFDU development. The efforts studied were METS developed under a Digital Library Federation initiative, Open Office XML File Format developed by SUN and other members of the Open Office Consortium, the MPEG-21 efforts in ISO, and the IMS Content Packaging Standard developed by the IMS Global Learning Consortium. There was significant discussion on adopting the METS standard but the focus on digital libraries datatypes and the lack of a clear mapping from the METS metadata to the OAIS RM led to the decision to use the flexible data/metadata linkage from METS but to implement an independent XFDU mechanism. Sawyer et al., 2006, p. 3

While MOA II, METS and XFDU share a common lineage that goes back to the TEI Guidelines, the OAI-ORE standard does not. With funding from the Andrew W. Mellon Foundation, the OAI-ORE work within the Open Archives Initiative (OAI) was a logical progression of OAI's efforts to develop web interoperability standards, with the prior OAI Protocol for Metadata Harvesting establishing a mechanism for distributed repositories to share metadata about content that they hold, and OAI-ORE moving past dissemination of metadata to dissemination of actual content. A significant part of this new effort would be the definition of a data model and serialization syntax for exchange of what OAI-ORE refers to as compound digital objects, digital content that can be constituted by multiple types of content with varying network locations and differing relationships between the object's components OAI, 2007. At that level, the OAI-ORE work group recognized its similarity to a number of prior efforts to developing packaging syntaxes, including METS and the MPEG-21 DIDL standard Van de Sompel & Lagoze, 2006. However, there were two fundamental contextual differences which led to the OAI-ORE effort following a very different track than previous packaging standard efforts within the digital library community.

The first was intended use context. Prior packaging standards had been developed as technologies that would primarily be employed in 'the back of the house'; they were intended to allow digital librarians to describe and manage digital library materials internally, and while it was hoped they might provide an interoperability syntax as well, early implementations of standards like METS were generally only seen by technical services, IT and digital library staff in libraries. METS documents were not something that were seen by the public or exposed to the open web, but would be transformed via XSLT or other technologies to enable a web presentable interface to digital content. MOA II and METS were specifically not designed for a solely web context, and in fact, design decisions on such issues as indicating a path to content files assumed that content might not be available through a web interface. OAI-ORE, on the other hand, was designed specifically to provide a packaging syntax for compound digital objects for use in a web environment and that complied with the W3C's web architecture.

The use context thus ended up determining a second, technological context: the Web. As the number of white papers and presentations by the OAI-ORE initiative make clear, this is a somewhat problematic technological context for what the OAI-ORE group wished to accomplish, as the standard web architecture promulgated by the World Wide Web Consortium is built on the notion that a URI identifies a resource, where a resource is both an abstraction and a singleton, a "time varying conceptual mapping to a set of entities or values that are equivalent" World Wide Web Consortium, 2002. What OAI-ORE required was a way of identifying an aggregation of resources where the individual resources are not all mapped from a single URI. The solution to OAI-ORE's dilemma was to draw upon the idea of a named graph (and the RDF data model), and declare the existence of a new type of web resource, an aggregation, which would 1. have a URI assigned to it; 2. have a resource map document identifying the components of the aggregation with a distinct URI assigned to it, where 3. the resource map URI should be easily and automatically discoverable given the URI for the aggregation. Unlike other standards efforts delineated above, the technological framing for the OAI-ORE's work was the W3C's Resource Description Framework and graph theory, not the XML schema which had been used by other structural metadata standards, and in fact OAI-ORE is rather ecumenical about serialization syntax, allowing resource maps to be expressed in "Atom XML, RDF/XML, RDFa, n3, turtle and other RDF serialization formats" Open Archives Initiative, 2008c.

OAI-ORE's decision to implement a new structural metadata standard was thus ultimately driven by the Open Archives Initiative's desire to make content available within a web context. Pursuing that goal meant assuming certain use cases and not others, and required aligning OAI-ORE's technological design with the larger web architecture paradigm established by the W3C. Under those constraints, adopting any of the major pre-existing structural metadata standards would have been an impossibility. The initial choice of use cases established a technological frame that required creation of a new standard.

Why Standards Sometimes Aren't

For a theoretical school predicated upon the notion that technological determinism is a fatally flawed approach to the study of technology, sociotechnical systems case studies can often seem a bit pre-determined in their outcome. Whether examining disastrous failures of technology, such as the Challenger explosion, or technological success stories, such as the rise of the Moog synthesizer, in-depth examination of the complex interactions surrounding a technological success or failure can make the trajectory of a particular technology seem inevitable. If one reads through the details on the interactions between NASA's various centers and the contractor responsible for the Shuttle's boosters (Morton Thiokol) and the motivations driving each, one can be left with the strong impression that the only surprising aspect of the Challenger's explosion was that such an event took so long to occur Presidential Commission, 1986. Similarly, while Trevor Pinch goes out of his way to show the multiple technological trajectories the analog synthesizer might have followed after its initial development by pioneers such as Don Buchla and Robert Moog, his discussion of the influence of path dependence on the synthesizer's development makes the outcome seem very close to pre-determined Pinch, 2001.

While the above discussion of the history of structural metadata standards within the digital library community might seem to be a further contribution in the field of technological predestination, that is not the intent. While sociotechnical influences may make certain technological trajectories more likely than others in particular circumstances, the above cases could have gone differently. The MOA II project could have decided that an application profile of the TEI Guidelines would adequately support its needs for structural metadata; OAI-ORE might have decided they needed to support aggregation outside the context of the Web. What is important about these cases is that they each demonstrate what an actor-network theorist might label as a failure of enrollment for an existing standard; faced with pre-existing standards with which they might have aligned their efforts, those responsible for MOA II, METS, XFDU, and OAI-ORE all chose to pursue an independent course. These outcomes were not predestined, but a variety of sociotechnical factors did influence the outcome in each case. If we have a better understanding of what those factors are and their influence on processes of standardization, people responsible for standardization efforts within the community may be able to make more informed decisions about whether a pre-existing standard might be worth adopting, or whether a new standard effort is worth the time and investment it will require. Given that it has been known for some time that failures of standardization can have real costs both for those who might employ those standards and the people/community they might serve Katz & Shapiro, 1985, and that repeated efforts to achieve standardization within a particular technological space carry their own costs in terms of time and labor invested, more insight into our community's reasons for reinventing the wheel seems potentially valuable.

Sociotechnical theory in general, and Actor-Network theory in particular, has characterized processes of standardization in technology as ones of enrollment, translation and alignment, in which an emerging potential area of agreement becomes stabilized as those actors (human and non-human) already involved in a technology seek to enroll other actors in the project of that technology through a process of a translation. If that is successful, enrolled actors find their social worlds coming into alignment; they share a mutual understanding and language for that technology and its role and purpose, to a degree where the technology becomes invisible, an assumed part of everyone's shared landscape, a black box that does not need examination or consideration (Callon, 1991, Law, 1999, Law & Mol, 2002).

This sounds simple enough, but the reality is anything but. As Star, Bowker & Neumann, 2003 point out, these processes involve negotiations across a variety of communities of practice with different pre-existing needs, views, constraints and external connections, and Hanseth et al., 2006 in their examination of electronic patient record systems, make clear that processes of translation can suffer from complexity effects, and efforts to enroll others in a technological project can in the right circumstances produce the opposite effect, something they refer to as reflexive standardization. When we consider cases of standardization in the digital library community, we need to remember that it is in many ways not a particularly well-defined community, its participants engage with a large number of further communities of practice (academic, commercial and governmental) and that they are all already engaged in complex social and institutional networks which influence both their ability to translate others into a standardization program and to be translated themselves.

Looking at the cases discussed above, the problems inherent in establishing a standard within a complex overlapping set of social networks becomes clear. The case of the Making of America II involves the digital library community (and the larger library and university communities in which they are enmeshed) along with the complex social networks which exist between universities and grant agencies. The goals of the grant project participants were relatively clear; they wanted to promote greater interoperability among digital libraries through standardization of digital object encoding standards. While that goal actually aligns with those of the grant-making agencies involved, and opens up the possibility of collaboration between the members of the grant project and the DLF and NEH, obtaining funding required the grant participants to align themselves with the grant agencies' larger goals in order to be enrolled as actors in the grant funding network. And the grant agencies in this case were interested in funding research (as opposed to development). Research funding agencies have an inherent slant towards neophilia; they exist to support the creation of new knowledge and practices Godin & Lane, 2012. Given this, achieving alignment among all the participating communities favored an outcome in which a brand-new standard emerged, rather than one in which existing knowledge practices and standards were applied.

Similar complexities with respect to negotiating standardization across multiple community boundaries exist if we look at the case of OAI-ORE. While OAI-ORE was also seeking to promote standardization around the definition and exchange of digital objects within the digital library community, that was only one of a variety of communities involved in the discussions regarding OAI-ORE. The meetings of OAI-ORE group included representation from digital libraries and repositories, but also from the publishing industry (Ingenta and the Nature Publishing Group) and major software companies (Microsoft and Google) OAI, 2007. The OAI-ORE effort also wished to insure that it was aligned with other standards efforts, including specifically the Resource Description Framework and the W3C's web architecture standards more generally. This diverse set of players, with pre-existing sociotechnical networks and agendas, complicated efforts to try to achieve mutual alignment on a standard at a variety of levels, up to and including the choice of a syntax for serialization (academics within the community were more fond of RDF/XML and RDFa, while the participants from the software industry had existing infrastructure built on the Atom syndication format and hence preferred that). Trying to cultivate alignment among the participants given a need for ease of implementation and conformance with the RDF data model and W3C web architecture, as well as differing use cases and different technology preferences for academic digital repositories and commercial players, was difficult enough. Trying to enroll such a diverse set of players and also build on pre-existing standards within the digital library community would have been an insurmountable obstacle.

Given the tremendous difficulties inherent in negotiating among diverse actors enmeshed in a multitude of networks, the strategies a standards effort deploys to attempt to enroll actors in its project are obviously critical, and we see a variety of strategies playing out in the case of structural metadata standards. For the Making of America II project, strategies to try to promote enrollment included trying to enroll the support of a significant independent player within the digital library community, the Digital Library Federation, as a funder, a strategic step which had implications for further strategic decisions with respect to technological development. MOA II also employed a strategy of pursuing technological novelty to persuasive effect, allowing them to distance their own efforts from a community that might be seen as tangential to the digital library community, removing the TEI and digital humanities communities from the of those the project sought to immediately enroll, and allowing MOA II to present its work as an entirely de novo effort within the structural metadata space for consideration by the digital library community.

The METS effort pursued an entirely different set of strategies in an effort to enroll members of the digital library community in its sociotechnical project. One of the primary strategies to enroll members of the digital library community was to establish a maintenance mechanism which was answerable to that community. This assurance that the community would have a voice in the future direction of the standard (and thus have some ability to align it with their own interests) made METS more attractive as a potential standard. This effect was enhanced by modifications to the MOA II DTD in the production of METS to increase its interpretive flexibility by members of the digital library community. A schema like METS, with significant flexibility for local interpretation and implementation, means that actors do not really have to modify their processes or tools much to align their efforts with METS, making the cost of enrollment in the METS project low.

XFDU has been similar to MOA II in some of its strategic decisions, deciding to distance their efforts from those being pursued by related but tangential communities as a way to increase enrollment among the principal communities of practice with which they are concerned. However, unlike METS, which pursued interpretive flexibility as a mechanism for easing enrollment of actors, XFDU chose the opposite strategy, choosing to develop a standard which used terminology and structures tightly bound to the preexisting language and standards of the Consultative Committee for Space Data Systems with respect to archiving. Developing a standard less open to interpretation might discourage some users who occupy a liminal status in the space science data community, but could encourage those who are in the core of the community, as it aligns well with existing sociotechnical systems and languages that the community already employs.

Standardization efforts adopt their strategies to enroll further actors in their projects based on a variety of factors, including the network of associations which already exist for potential enrollees in a sociotechnical project as well as the networks in which standards developers are already developed. What lessons might those interested in markup languages and standards take from the digital library community's efforts with respect to structural metadata?

An important lesson for grant agencies which fund standards efforts is that the fundamental nature of research grant funding as it exists today in the academy tends to favor reinventing wheels over using the one already in hand. Grant funding is typically awarded based on its likelihood to generate new knowledge and practices. As long as that is true, standards efforts which rely upon external grant funding are unlikely to try to develop pre-existing work to make it more useful. There is a lesson here for potential standards developers as well; relying on external funding means aligning yourself in some ways with the sociotechnical project of your funding agency, so careful consideration as to that agency's goals (explicit and implicit) are, and whether they in fact align with your efforts, is critical. It may be that some standards projects would be better off avoiding the use of grant funding.

Another lesson for potential standards efforts is that, everything else being equal, the larger and more complex the network of actors you are seeking to enroll, the greater the difficulty in aligning all of those actors successfully with a project. MOA II and the XFDU effort intentionally sought to limit the size of the network they were trying to influence in order to simplify the process of enrolling actors in their work, while METS and OAI-ORE both attempted to be more inclusive, which had impacts on their ability to enroll actors. In the case of METS, achieving enrollment meant modifying the schema to improve its ability to localize, but that ability comes at a cost in terms of ease of interoperability. Additionally, the interpretive flexibility added to METS to enable enrollment of actors in the digital library committee ultimately ended up working against METS' ability to enroll other communities such as the space science data community. OAI-ORE needed to support multiple serialization syntaxes to enroll its initial participants, a result which complicated implementation, and given that the Atom serialization is currently deprecated, may not have been as successful in long-term enrollment of commercial actors as hoped.

Another important sociotechnical lesson for those seeking to create standards is to remember that in surveying the networks of actors that are potentially implicated in their efforts, to pay attention to non-human actors as well as human actors, and in the case of pre-existing standards as non-human actors, to pay attention to their trajectory. METS provides an interesting case to consider. While it was widely adopted,[1]there is reason to doubt its adoption was related to its original purpose and intent. Tests of METS as a format for interoperability's sake (DiLauro et al., 2005, Nelson et al., 2005, Abrams et al., 2005, Anderson et al., 2005) showed questionable performance at best; METS' interpretive flexibility allows a freedom of implementation that, bluntly, is the enemy of interoperability. How then to explain METS' wide adoption if it fundamentally fails as an exchange syntax, one of its primary design goals?

I would argue that while the digital library community needs structural metadata, it has lacked any significant need to share structural metadata. Digital library software does require the ability to identify the components of a complex digital object and their relationships, and METS fulfills that role well for a variety of software implementations, but that is purely a local system need. To a great extent, digital libraries' need (or desire) to exchange content seems to have been somewhat exaggerated, and when such a need exists, it appears to often be met through other, simpler technologies. The Afghanistan Digital Library (http://afghanistandl.nyu.edu/index.html) at NYU, for example, while it uses METS as a mechanism for encoding digitized books, allows exports of books from the digital library as PDF files. Many repositories collecting electronic theses and dissertations are harvesting them using the OAI-PMH protocol to gather metadata (including the URIs for content) and web harvesting the content files directly. It may be that in the case of structural metadata for digital libraries, the question should not be "why do we keep reinventing the wheel" but "why do we keep inventing wheels when the community wants a pulley?" Examining the trajectories of existing standards may be invaluable to those trying to determine whether to launch one, and to what purpose.

Fundamentally, standards are agreements involving a wide variety of actors intended to facilitate interactions among those actors. They evolve in a sociotechnical landscape of actors, human and non-human, already enmeshed in a complex web of relationships of varying kinds. The job of a standards developer is ultimately not simply authoring a technical document, but charting a path for all of the relevant actors to reconfigure their network of arrangements to include a new, non-human actor, the standard. Successful creation of a standard involves engineering the social as well as the technical, and failure to account for both will result in a standard which is not a standard at all.

References

[Abrams et al., 2005] Abrams, S., Chapman, S., Flecker, D., Kreigsman, S., Marinus, J., McGath, G. & Wendler, R. (Dec. 2005). Harvard's Perspective on the Archive Ingest and Handling Test. D-Lib Magazine 11(12). doi:https://doi.org/10.1045/december2005-abrams. Retrieved from: http://www.dlib.org/dlib/december05/abrams/12abrams.html

[ANSI/NISO, 1988] American National Standards Institute & The National Information Standards Organization (Dec. 1, 1988). Electronic Manuscript Preparation and Markup: American National Standard for Electronic Manuscript Preperation and Markup. ANSI/NISO Z39.59-1988. New Brunswick, NJ: Published for the National Information Standards Organization by Transaction Publishers.

[Anderson et al., 2005] Anderson, R., Frost, H., Hoebelheinrich, N., Johnson, K. (Dec. 2005). The AIHT at Stanford University; Automated Preservation Assessment of Heterogeneous Digital Collections. D-Lib Magazine 11(12). doi:https://doi.org/10.1045/december2005-johnson. Retrieved from: http://www.dlib.org/dlib/december05/johnson/12johnson.html

[Arms, 2012] Arms, W. Y. (Aug. 2012). The 1990s: The Formative Years of Digital Libraries. Library Hi Tech 30(4). doi:https://doi.org/10.1108/07378831211285068. Retrieved from: https://www.cs.cornell.edu/wya/papers/LibHiTech-2012.pdf

[Arms, Blanchi & Overly, 1997] Arms, W. Y., Blanchi, C. & Overly, E. A. (Feb. 1997). "An Architecture for Information in Digital Libraries." D-Lib Magazine. Retrieved from https://web.archive.org/web/20011201112301/http://www.dlib.org:80/dlib/february97/cnri/02arms1.html

[ACH/ACL/ALLC, 1994] The Association for Computers and the Humanities (ACH), The Association for Computational Linguistics (ACL) & The Association for Literary and Linguistinc Computing (ALLC) (1994). Guidelines for Electronic Text Encoding and Interchange (P3). Chicago: Text Encoding Initiative. Retrieved from http://www.tei-c.org/Vault/GL/P3/index.htm

[Bosak, 1998] Bosak, J. (October, 1998). Media-Independent Publishing: Four Myths about XML. Computer 31(10). IEEE Computer Society. doi:https://doi.org/10.1109/2.722303. Retrieved from http://ieeexplore.ieee.org/iel4/2/15590/00722303.pdf

[Callon, 1991] Callon, Michel (1991). Techno-Economic Networks and Irreversibility. A Sociology of Monsters: Essays on Power, Technology and Domination, J. Law (ed.) Routledge, London, pp. 132-161.

[CCSDS, 2002] Consultative Committee for Space Data Systems (January 2002). Reference Model for an Open Archival Information System (OAIS). CCSDS 650.0-B-1. Blue Book. Washington, DC: CCSDS Secretariat, National Aeronautics and Space Administration.

[CCSDS, 2004] Consultative Committee for Space Data Systems (Sept. 15, 2004). XML Formatted Data Unit (XFDU) Structure and Construction Rules. White Book. Washington, DC: CCSDS Secretariat, National Aeronautics and Space Administration. Retrieved from https://cwe.ccsds.org/moims/docs/Work%20Completed%20(Closed%20WGs)/Information%20Packaging%20and%20Registries/Draft%20Documents/XML%20Formatted%20Data%20Unit%20(XFDU)/XML%20Formatted%20Data%20Unit%20(XFDU)-Structure%20and%20Construction%20Rules.doc

[DiLauro et al., 2005] DiLauro, T., Patton, M., Reynolds, D. & Choudhury, G. S. (Dec. 2005). The Archive Ingest and Handling Test: The John Hopkins University Report. D-Lib Magazine 11(12). doi:https://doi.org/10.1045/december2005-choudhury. Retrieved from: http://www.dlib.org/dlib/december05/choudhury/12choudhury.html

[Godin & Lane, 2012] Godin, B. & Lane, J. P. (2012). A century of talks on research: what happened to development and production? International Journal on Transitions and Innovation Systems 2(1), pp. 5 - 13. doi:https://doi.org/10.1504/IJTIS.2012.046953. Retrieved from: https://www.researchgate.net/profile/Joseph_Lane3/publication/264816655_A_century_of_talks_on_research_what_happened_to_development_and_production/links/5425b33f0cf26120b7ae9ba6.pdf

[Hanseth et al., 2006] Hanseth, O., Jacucci, E., Grisot, M. & Aanestad, M. (Aug. 2006). Reflexive Standardization: Side effects and complexity in Standard Making. MIS Quarterly 30, special issue on standard making, pp. 563-581. doi:https://doi.org/10.2307/25148773. Retrieved from: http://www.jstor.org/stable/25148773

[Hurley et al., 1999] Hurley, B., Price-Wilkin, J., Proffitt, M. & Besser, H. (Dec. 1999). The Making of America II Testbed Project: A Digital Library Service Model. Washington, DC: Digital Library Federation and the Council on Library and Information Resources. Retrieved from https://www.clir.org/wp-content/uploads/sites/6/pub87.pdf

[ISO/IEC 1992] International Organization for Standardization (ISO) & International Electrotechnical Commission (IEC) (1992). Information Technology -- Hypermedia/Time-based Structuring Language (HyTime). ISO/IEC 10744:1992. Geneva: ISO/IEC.

[Katz & Shapiro, 1985] Katz, M. L. & Shapiro, C. (June, 1985). Network Externalities, Competition and Compatibility. The American Economic Review 75(3), pp. 424-440. Retrieved from: http://www.jstor.org/stable/1814809

[Lagoze, Lynch & Daniel, Jr., 1996] Lagoze, C., Lynch, C. A., and Daniel Jr., R. (July 12, 1996). The Warwick Framework: A Container Architecture for Aggregating Sets of Metadata. Cornell Computer Science Technical Report TR96-1593. Retrieved from https://ecommons.cornell.edu/handle/1813/7248

[Law, 1999] Law, John (1999). Traduction/Trahison: Notes on ANT. Center for Science Studies, Lancaster University. Retrieved from: http://www.lancaster.ac.uk/fass/resources/sociology-online-papers/papers/law-traduction-trahison.pdf

[Law & Mol, 2002] Law, John & Mol, Annemarie (2002). Complexities: Social Studies of Knowledge Practices. Durham, NC: Duke University Press.

[Library of Congress, 1997] Library of Congress (1997). Structural Metadata Dictionary for LC Digitized Material. Washington, DC: Author. Retrieved from: https://memory.loc.gov/ammem/techdocs/repository/structmeta.html

[Lyman & Hurley, 1998] Lyman, Peter & Hurley, Bernard J. (1998). The Making of America II Testbed Project. Berkeley, CA: University of California. Retrieved from https://web.archive.org/web/20000829033352/http://sunsite.berkeley.edu:80/MOA2/moaproposal.html

[McDonough, 2010] McDonough, Jerome P. (2010). Packaging Videogames for Long-Term Preservation: Integrating FRBR and the OAIS Reference Model. Journal of the American Society for Information Science & Technology 62(1): pp. 171-184. doi:https://doi.org/10.1002/asi.21412

[McDonough, Myrick & Stedfeld, 2001] McDonough, Jerome P., Myrick, Leslie & Stedfeld, Eric (2001). Report on the Making of America II DTD Digital Library Federation Workshop.

[Nelson et al., 2005] Nelson, M. L., Bollen, J., Manepalli, G. & Haq, R. (Dec. 2005). Archive Ingest and Handling Test: The Old Dominion University Approach. D-Lib Magazine 11(12). doi:https://doi.org/10.1045/december2005-nelson. Retrieved from: http://www.dlib.org/dlib/december05/nelson/12nelson.html

[OAI, 2007] Open Archives Initiative (2007). Open Archives Initiative - Object Reuse and Exchange Report on the Technical Committee Meeting, January 11, 12 2007. Carl Lagoze & Herber Van de Sompel (Eds.). Retrieved from: https://web.archive.org/web/20070709215139/http://www.openarchives.org:80/ore/documents/OAI-ORE-TC-Meeting-200701.pdf

[OAI, 2008a] Open Archives Initiative (2008a). ORE Specification - Abstract Data Model. Carl Lagoze, Herbert Van de Sompel, Pete Johnston, Michael Nelson, Robert Sanderson & Simeon Warner (Eds.). Retrieved from: https://www.openarchives.org/ore/1.0/datamodel

[OAI, 2008b] Open Archives Initiative (2008b). ORE User Guide - Resource Map Implementation in RDF/XML. Carl Lagoze, Herbert Van de Sompel, Pete Johnston, Michael Nelson, Robert Sanderson & Simeon Warner (Eds.). Retrieved from: https://www.openarchives.org/ore/1.0/rdfxml

[Open Archives Initiative, 2008c] Open Archives Initiative (2008c). ORE User Guide - Primer. Carl Lagoze and Herbert Van de Sompel, Pete Johnston, Michael Nelson, Robert Sanderson & Simeon Warner (Eds.). Retrieved from: https://www.openarchives.org/ore/1.0/primer

[Pinch, 2001] Pinch, Trevor (2001). "Why You Go to A Music Store to Buy a Synthesizer: Path Dependence and the Social Construction of Technology." Path Dependence and Creation. Raghu Garud and Peter Karnøe (Eds.). Mahwah, NJ: Lawrence Erlbaum Associates, Inc.

[Presidential Commission, 1986] Presidential Commission on the Space Shuttle Challenger Accident (June 6, 1986). Report to the President By the Presidential Commission on the Space Shuttle Challenger Accident. Washington, DC: National Aeronautics and Space Administration. Retrieved from: https://spaceflight.nasa.gov/outreach/SignificantIncidents/assets/rogers_commission_report.pdf

[Sawyer et al., 2006] Sawyer, D., Reich, L., Garrett, J. & Nikhinson, S. (March 2006). NASA Report to NARA on OAIS Based Federated Registry/Repository Research: May 2005 - January 2006. Retrieved from: https://www.archives.gov/files/applied-research/papers/oasis.pdf

[Star, 2010] Star, S. L. (2010). This is Not a Boundary Object: Reflections on the Origin of a Concept. Science, Technology & Human Values 35(5), pp. 601-612. doi:https://doi.org/10.1177/0162243910377624

[Star, Bowker & Neumann, 2003] Star, S. L., Bowker, G. C. & Neumann, L. J. (2003). Transparency beyond the individual level of scale: Convergence between information artifacts and communities of practice. Digital Library Use: Social Practice in Design and Evaluation. Ann Peterson Bishop, Nancy A. Van House & Barbar P. Buttenfield (Eds). Cambridge, MA: The MIT Press.

[Tanenbaum, 2002] Tanenbaum, A. (2003). Computer Networks. 4th Edition. Upper Saddle River, NJ: Prentice Hall PTR.

[UC Berkeley Library, 1997] The UC Berkeley Library (1997). The Making of America II Planning Phase Proposal. Retrieved from: https://web.archive.org/web/19980424235800/http://sunsite.berkeley.edu:80/MOA2/moaplan.html

[Van de Sompel & Lagoze, 2006] Van de Sompel, Herbert & Lagoze, Carl (2006). The OAI Object Re-Use & Exchange (ORE) Initiative. CNI Task Force Meeting, Washington DC, December 4, 2006. Retrieved from: https://www.openarchives.org/ore/documents/ORE-CNI-2006.pdf

[World Wide Web Consortium, 2002] World Wide Web Consortium (2002). What does a URI Identify? Norman Walsh & Stuart Williams (Eds.). Retrieved from: https://www.w3.org/2001/tag/doc/identify

[1] The University of Illinois OAI-PMH Data Provider Registry (http://gita.grainger.uiuc.edu/registry/) lists METS as the sixth most widely implemented metadata format in OAI-PMH repositories, following Dublin Core, two varieties of the MARC bibliographic standard, the RFC 1807 bibliographic format, and NCBI/NLM journal publishing schema.