Markup is a modern solution to an old problem: how to make what we know storable, searchable, and retrievable. Markup allows us to enrich our content so that we, and others, have access to its structure. The extra layers of meaning added by markup not only enable us to say more about the whats, hows, and whys of our content, they also allow others to find meaning we may not have known was there. Kruschwitz2005 describes using HTML tags to determine the terms used to index documents: if a term appears in at least two different markup contexts (e.g. headings, titles, anchors, meta tags), it is important enough to index. The author of the HTML almost certainly did not have this use in mind when marking up their content. Nonetheless, the information is there to be used.

The genesis for this paper is in threads of conversation that have enlivened markup conferences for years (Balisage, in particular, but also XML Prague, XML Amsterdam, XML London, and most recently, and especially, Markup UK 2018). They have occurred since the beginning of markup conferences; probably longer. This is not an explicilty technical paper because these are not explicitly technical conversations. Many of you have participated in these same conversations and may already imagine that you know where this discussion is going: declarative vs. imperative markup, XML vs HTML, XML vs JSON, JavaScript vs the world. You’re right, of course; those discussions are necessary. But the adversarial position is not where we want to start.

Instead, we want to begin with the proximate and specific event which is the origin of this paper by these authors: Tommie Usdin’s excellent Markup UK keynote Shared Tag Sets as Social Constructs.

Markup culture is time-saving, energy saving, and application enabling.

— B. Tommie Usdin (2018)

One of the defining features of markup is that it is readable by human beings. Readability doesn’t immediately and magically make every marked up document understandable (even if we sometimes call XML “self-describing” or speak of “intuitive markup”), but it gives us a place to stand, a place from which we might be able to understand.

In the days before Microsoft Word laid waste to the office document market, there were many word processors: literally dozens. They all had proprietary, binary file formats that made the content of your documents utterly opaque. This vendor lock-in worked to the advantage of vendors, or was perceived to: once you had a big enough commitment to SomeProprietaryTool, you were going to stick with it. Of course, programmers and capitalism being what they are, there were programs that could convert between formats: they did so poorly, with low fidelity in most cases, and only between specific versions of each piece of software. (Writing such software today would no doubt be a violation of the Digital Millenium Copyright Act in the United States.)

Not only has markup diminished the extent to which lock-in is possible, it has also given us an ecosystem of tools and users and vendors that work explicitly to make high fidelity transformations between formats not only possible but (relatively) easy.

All of this is prelude to, groundwork for, the most important takeaway from Usdin’s paper:

Markup is culture and community.

— B. Tommie Usdin (2018)

We understand commuinity to be a feeling of fellowship with others, as a result of sharing common attitudes, interests, and goals. Human beings are social creatures. Sharing is a key benefit for everyone in a community. Sharing allows us to leverage the experiences, the triumphs and the failures of others. It fosters trust and cooperation. Declarative markup encourages sharing. It’s easy to see and touch and change. You can scribble it on a napkin if you have a great idea in the pub! Closed, binary formats discourage or even outright prevent sharing.

Culture forms around tag sets, our use cases shape our tag sets, and our tag sets shape our culture:

-

DocBook & DITA: a culture of authoring.

-

JATS & BITS: a culture of interchange and archving.

-

TEI: a culture of content analysis and annotation.

Some tag sets are about authoring, some about interchange and archiving, some about analysis and annotation. Shared wisdom shapes our markup vocabularies.

We, collectively, get a huge benefit from sharing. The specifications and recommendations and tools that we use can assure that what we produce is technically correct, within the very narrow lines of “correct” laid out in those documents and tools, but what we share isn’t codified explicitly in all those places. An ordinary person, working alone, isn’t likely to produce something the existing community would recognize. But Usdin points out that just as sharing shapes our markup vocabularies, our markup vocabularies shape what we share. Markup vocabularies shape our notion of wisdom.

Usdin’s example is overlap, which she describes with the metaphor of the "tree-shaped scars" that are etched into the minds of XML veterans. These scars prevent us from being able to consider overlapping structures. Whatever our technology, language, or software of choice, we end up with scars that channel the paths of what we produce. In proposing to create a new community around declarative markup, part of our aim is to ask about the types of scars that are caused by generic markup. We would like to argue, in fact, that generic markup may be broad enough that the paths it etches are as close to being universal paths for managing text as it is possible to find. To put it another way: rather than being a specific way of thinking, maybe markup is a state of mind.

Descriptive markup is not just the best approach of the competing markup systems; it is the best imaginable approach.

— James H. Coombs et al. (1987)

We tend to think of markup in terms of technology, and of technologies: XML, RDF, JSON, HTML, and so on. But what if we instead think of these technologies as instantiations of a generalized tendency that has shaped how we approach texts and the data they contain, and been shaped by those texts in turn? And what if we broaden our perspective, and think about markup as something that needn’t require so much as a single logic circuit or microchip?

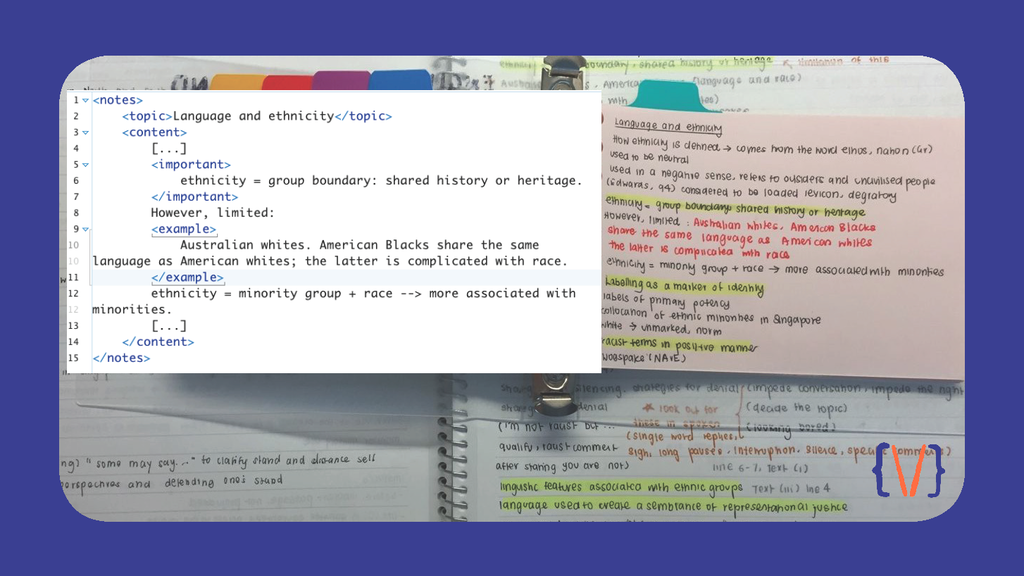

Why do highlighter pens come in packs of many colours? Take a

brief tour of the #studygrammer hashtag on

Instagram, and you will find some beautiful uses of highlighters to

categorize information in textbooks and study journals: the colours

are used to indicate themes, study topics, and types of knowledge

(facts vs. analyses, for example), as well as marking days and dates, or types of

activity. Highlighters also mark out titles,

different levels of headings, quotations, and technical terms. In brief, highlighters

are used to add metadata

to the text on the page.

The highlighting on the right is not so different from the highlighting on the left:

Figure 1: Markup and highlighting

Of course there are differences—you can’t use XSLT on the first one, for one thing—but the general impulse behind these approaches to the text is the same. This is what we mean by markup as a state of mind.

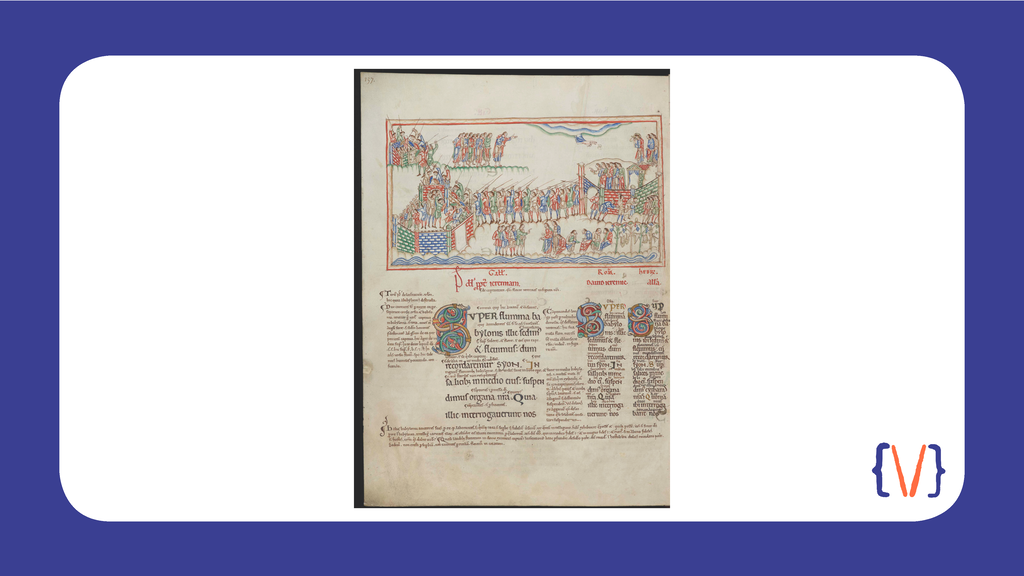

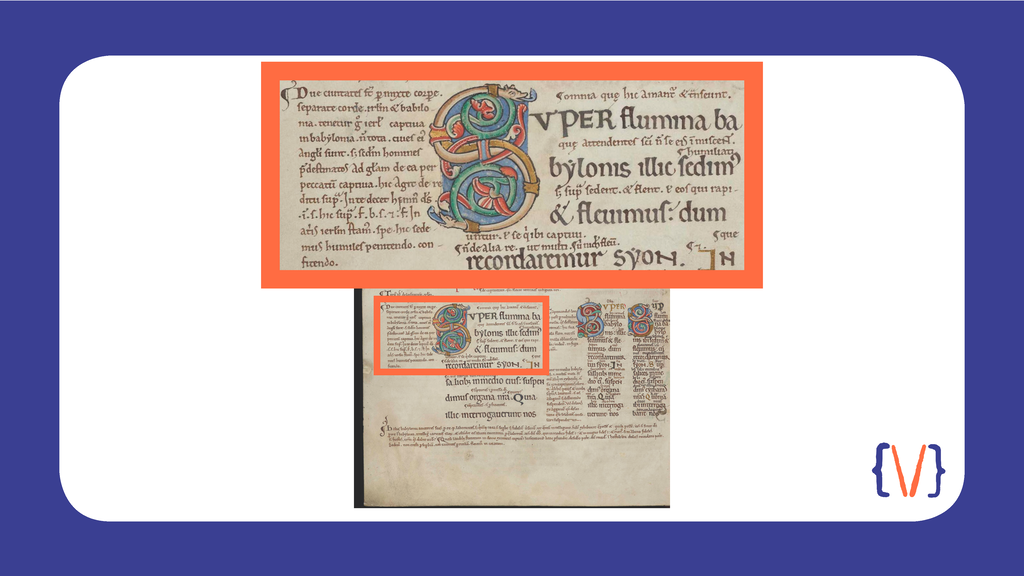

Lest we think this approach to text is a modern one, let’s look at something older: perhaps the most beautiful manuscript to come out of Anglo-Saxon England. This is the Eadwine Psalter, produced in Canterbury in the 12th century. It’s named after the monk Eadwine, who apparently coordinated the ten or more scribes and illuminators responsible for the work as a whole.

Here’s a representative page, showing the psalm which in modern English translation begins "By the waters of Babylon".

Figure 2: Eadwine Psalter: folio 243v

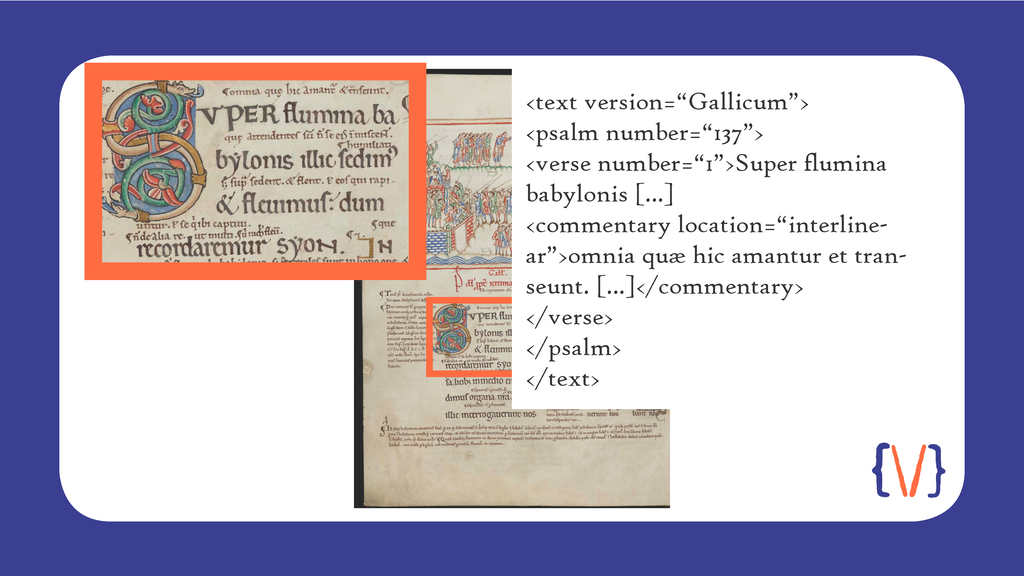

The red headings indicate three different Latin versions of the Psalms, from three different sources known as the Gallican, the Roman, and the Hebrew. The Gallican, as the most popular version in the Western Church at the time, has the largest script size. It also has expository notes in Latin in the margins and at the foot of the page.

Figure 3: Three sources

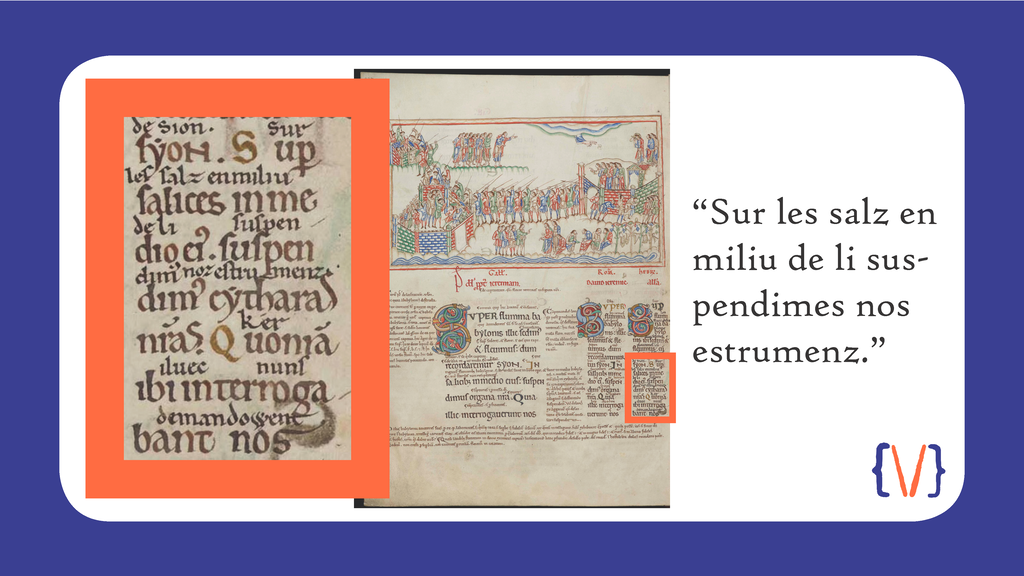

The Roman version features on interlinear translation into Old English, while the Hebrew features a translation into Norman French.

Figure 4: Norman French interlinear gloss

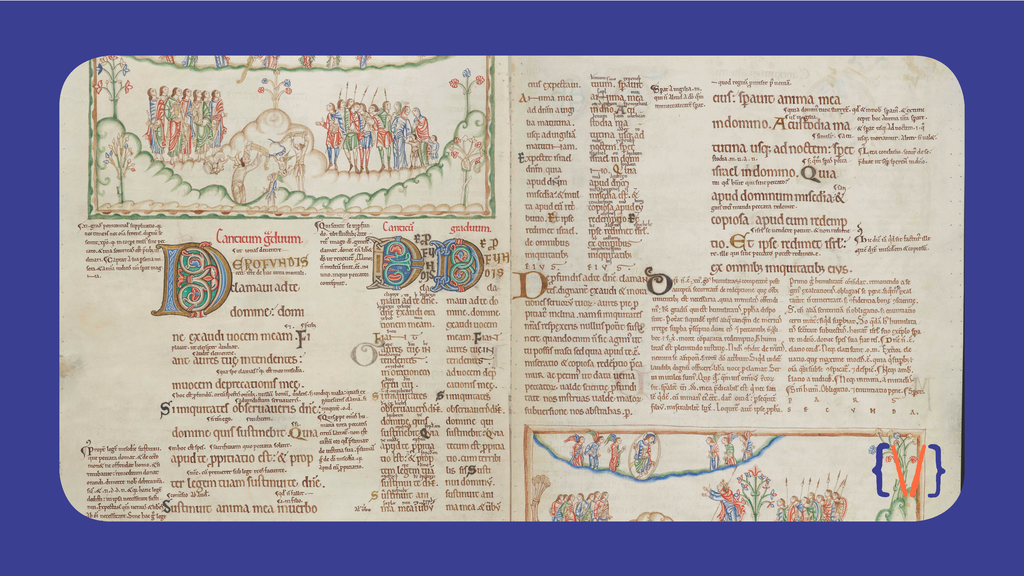

Where are the features we might call metadata or markup on this page? Each psalm begins with an illustration, and a richly illuminated capital initial for all three Latin versions. This clearly demarcates the individual texts within the wider work. There are paragraph symbols and larger capitals in the marginal commentary. Different scripts are used for the various types of text. The psalm texts are in a script palaeographers might describe as an English vernacular minuscule, as is the Latin commentary, while the translations use a pointed insular script. So the scripts are used to separate Latin from the two vernacular languages.

Figure 5: Page detail

Notice also the differences in text size and colour. Headings are in red (this practice is the root of the term rubric, from the Latin for “red chalk”). Expository notes are significantly smaller than the text. This is a practical decision, in order to fit in enough text, but it also indicates the hierarchy of the words: word of God; human commentary.

Figure 6: Eadwine Psalter: folios 235v-236r

In a sense, the richness of the presentation is also a kind of

metadata. Part of the aim with this approach to sacred text was to

highlight its importance and spiritual resonance. We could think of

this beautiful presentation style as a way of rendering markup such as

text type = sacred.

Of course, Eadwine and his contemporaries didn’t have an XML document or a Relax NG schema to guide their choice of presentational features. But the consistency and care taken in creating texts whose underlying conceptual structure is so clear to the reader seems very similar to the mindset of today’s “scribes” marking up content declaratively. Eadwine’s Psalter simply conflates the steps of marking up text according to its different functions and meanings, and deciding what that markup means for the text’s look and feel.

If markup is a state of mind, a common approach to adding information to our own or another’s text, then markup needn’t be difficult to sell to people who are put off by angle brackets and doctypes and programming languages. That matters, because we are advocates for markup, and specifically for declarative markup, as a beneficial approach to data in terms of capture, storage, and presentation. Despite the growth of fields such as digital humanities and corpus linguistics, markup remains niche knowledge in many academic disciplines that could benefit from its functionality. The digital humanities person is too often a lone warrior, thought of by their fellow faculty as a cross between a web designer and tech support. And a recent introduction to corpus linguistics recommended that, as a first step, one throw away the angle brackets and metadata from pre-compiled corpora, because they just get in the way.

Part of the mission of the Markup Declaration—both as a document and as a movement—is to provide outreach; to bring a principled approach to declarative markup to those who are already using it, and to introduce the idea of markup to those who should be using it. Central to this will be the notion of generalized markup as a state of mind, encouraging experimentation with markup based on the principles of the Declaration.

Structuring information by means of markup implies a conceptual process as well as a technical one.

— Daniela Goecke et al. (2010)

The markup community isn’t just about languages and parsers and technical specifications. Those things, and the people who create them, are essential, of course. But markup is equally about theory and text and information. Data stored opaquely in applications lock you into those applications: whether they are desktop applications or “cloud” applications implemented in Javascript running in the web browser. There are many people whose work is all about theory and text and information who are still locked in to proprietary software because that’s what they’ve always known. They don’t know they need markup. We want to tell them.

Markup frees us from the tyranny of vendor lock-in to opaque, binary formats. It gave us the power to encode information in ways that we could leverage to power our own applications. Some of those applications became serious tools that allowed us to do even more (some became unserious tools that also allow us to do even more).

This virtuous circle continues today. We begin with texts and ideas, from a medieval book of psalms to modern legal documents and everything in between. From there we use declarative markup to explore those ideas, building new tools and applications. Some of these tools and applications are useful to small communities, some are useful to large communities. Many are still being invented and reported on at conferences like this one. Each of these applications leads to new texts or new ideas, beginning the cycle again.

It’s important to remember that markup has had some huge successes. The fact that the most significant word processors in the world store all of their data in XML is remarkable and of almost incalculable value. Nevertheless, it still stings a bit that publishing documents on the web, arguably the single thing XML was most specifically designed to support and absolutely best at, has been relegated to a single markup vocabulary that isn’t exactly XML. The W3C has recently closed down the XML Activity, signaling, not an end of interest in XML, but certainly a reduction in the priority of XML among the communities that can afford to belong to the W3C.

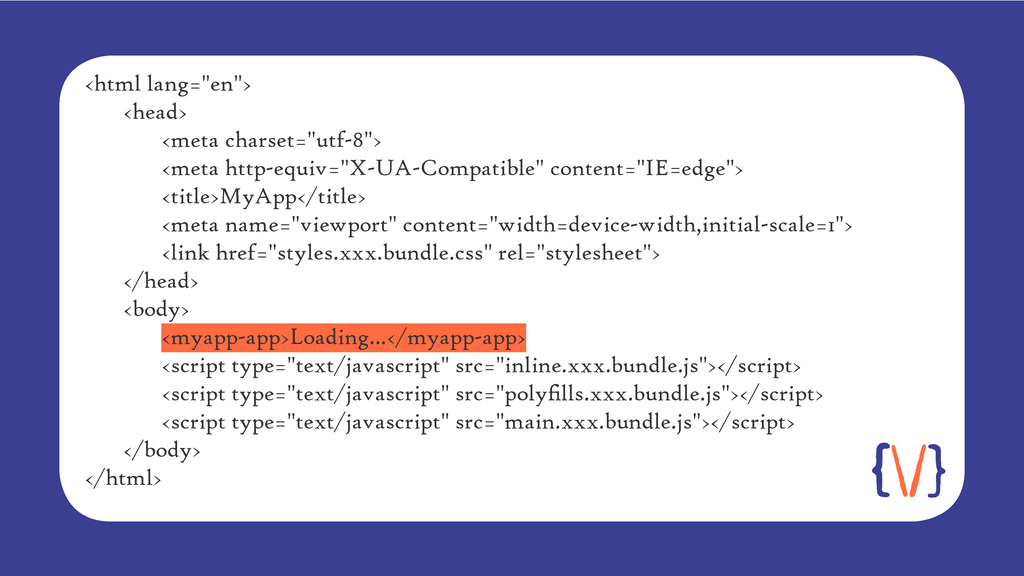

We, as a community, must recognize that we share some of the blame for this. At its peak, working groups were formed to make XML do absolutely anything and everything. It’s easy to look back and think maybe some of those ideas weren’t really playing to XML’s strongest suit, or were perhaps a tad over-engineered. We also allowed, or worse encouraged, the conversations with other communities to become adversarial. Support for XML waned. Erosion of support for XML has unfortunately led to (or at least has happened coincidentally with) an apparent erosion in support for declarative markup more generally. Where XForms provides a clear separation between model and instance, the web favors a random scattering of form elements and reams of imperative code to process it in a fairly ad hoc manner:

-

Markup escaped in strings (

"<p id=\"x\"><i>something</p>") -

Magic names (“

$ref”, “x-para”) -

etc.

JSON is a perfectly reasonable declarative format, ideally

suited to a wide range of applications. Unfortunately, the rush to

store everything that travels across the web in JSON (in part because

it fits into programming language shaped scars) has pushed actual

content down into strings of escaped markup and magic tokens barely

less opaque than binary formats. JavaScript frameworks, in an effort

to impose JavScript shaped scars on the web, have taken over web

applications to the point where any actual markup appears to be little

more than an accident of design in the domain-specific languages that

JavaScript interpolates. Many modern “web pages” serve up little more

than a naked <html> element and a pointer to

absolutely astonishing amounts of JavaScript.

Figure 7: MyApp

But there are good reasons to be optimistic. Markup conferences continue to flourish and provide supportive environments for learning and development. Academics in digital humanities and corpus linguistics, archivists, librarians, and others are showing us daily that markup adds inestimable value to our cultural heritage. The web community is full of voices that champion clean, readable HTML documents styled with CSS. The CSS community has responded by actively developing features that make that design possible.

Figure 8: CSS Zen Garden

All communities are intimidating to outsiders, technical communities particularly so. Our vision for the Markup Declaration is to shape a dedicated declarative-markup community: a community that is visibly open and deliberately welcoming; a community that seeks out newcomers who have markup-shaped data that they’re squashing into proprietary-software-shaped holes; a community that helps people take their first steps into markup, as enthusiastically as it helps those with thirty years of experience and a knotty technical problem to discuss.

And since communities are people, Markup Declaration is you, if you want it to be.

Figure 9: Come to the mark(up) side...

References

[Coombs1987] Coombs, James H., Allen H. Renear, and Steven J. DeRose.

Markup systems and the future of scholarly text processing

.

Communications of the ACM. Volume 30. Issue 11. November, 1987. doi:https://doi.org/10.1145/32206.32209.

[Goecke2010] Goecke, Daniela, Harald Lüngen, Dieter Metzing, Maik Stührenberg, and Andreas Witt.

Different views on markup

in Andreas Witt and Dieter Metzing (eds.), Linguistic modeling of information and markup languages. Springer. 2010. doi:https://doi.org/10.1007/978-90-481-3331-4_1.

[Kruschwitz2005] Kruschwitz, Udo. Intelligent document retrieval: exploiting markup structure. Springer. 2005. doi:https://doi.org/10.1007/1-4020-3768-6.