Introduction and Pre-History

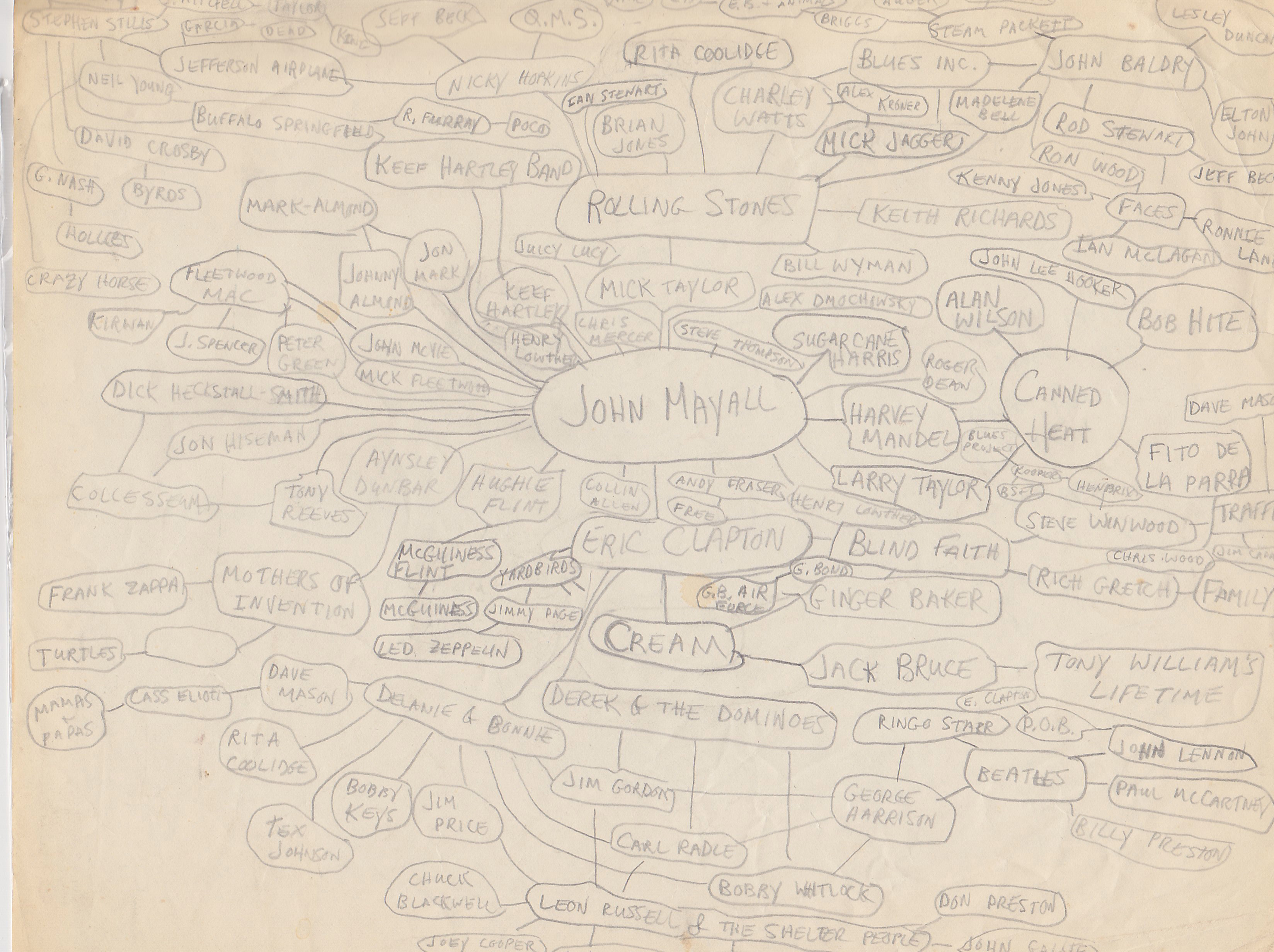

A long, long time ago in a galaxy much like our own, one of the authors, Ken Sall, was fascinated by the number of musicians who had played with John Mayall. [1] Armed only with paper, pencil and lots of record album jackets, he drew a diagram with Mayall at the center and arcs pointing to musicians who played with him, such as Eric Clapton, Peter Green, Mick Taylor, etc. Then he drew arcs from these musicians to others they had played with. Soon he reached the limit of the paper. Since this was prior to the emergence of the PC, there was no option to scroll or easily copy to a larger drawing area. But Ken held onto this labor of trivia for nearly 40 years. (See Figure 1.) Meanwhile, Pete Frame developed far more detailed and visually appealing rock family trees Frame 1983, some of which appeared in various publications such as Rolling Stone magazine and the Encyclopedia of Rock.

Nearly everyone is familiar with the Six Degrees of Kevin Bacon

trivia game Bacon.

The Bacon number is based on a game where actors were rated on distance they were

away from the actor

Kevin Bacon. Actors who appeared in a movie with Kevin Bacon were given a Bacon number

of 1, and actors

having only been in movies with actors with a Bacon number of 1 were given a Bacon

number of 2.

Each degree of separation lead to increasing the Bacon number by 1.

The object is to connect any movie star to Mr. Bacon with no more than six hops based

on

two people appearing in either the same movie or commercial.

This is a popular special case

of the more general Six Degrees of Separation Six Degrees

also known as the Human Web

. The idea is that any two people on the planet can be

connected by no more than six hops.

According to Wikipedia," Mathematicians use an analogous notion of collaboration distance".

Figure 1: John Mayall Connections

Dozens of musicians are within a few degrees of separation from John Mayall (circa 1972).

Problem Statement

Using semantic technology, we can certainly improve upon hand copying of data from record album jackets. If we can refer to a recording artist with all the associations to other musicians and to the albums they appeared on together, we can produce more complete graphs than what is shown in Figure 1. As avid fans of blues and rock music, we wondered if we could construct SPARQL queries to examine properties and relationships between performers in order to answer global questions such as "Who has had the greatest impact on rock music?" Our primary focus was Eric Clapton, a musical artist with a decades-spanning career who has enjoyed both a very successful solo career as well as having performed in several world-renowned bands. Then using Drupal and SVG to visualize the results, we could traverse the musician graphs in a straightforward manner.

This paper explores the use of DBpedia and MusicBrainz data sources using OpenLink Virtuoso Universal Server with a Drupal frontend. Much attention is given to the challenges we encountered, especially with respect to community-entered open data sources and the strategies we employed to overcome the challenges. One such challenge we encountered was that there were several properties by which an artist could be connected to another and the semantics were not well-defined, as discussed later.

We should be able to draw inferences from the data. According to Dean Allemang and Jim Hendler:

In the context of the Semantic Web, inferencing simply means that given some stated information, we can determine other related information that we can also consider as if it had been stated.

For example, ultimately we plan to use RDF and SPARQL to address questions such as these:

-

Which recording artist has directly played with the most musicians?

-

Which recording artist has the most connections within six degrees?

-

Which musician has been a session man for the most number of artists?

-

Which recording artist was most active during a particular decade?

-

Among all artists of a particular genre, who has played with the most other musicians?

-

Which rock artist's extended graph has the most other artists in 2 degrees? 3 degrees? 4 degrees?

-

Who has appeared on the most albums?

-

If we weight results by the length of time a band stays together, how does that impact other queries?

-

Can we distinguish between legitimate releases and unofficial releases?

-

What additional inferences can be made when multiple graphs are queried?

-

Can results be corroborated by comparing results to ground truths (i.e. documented in Joel Whitburn's Billboard books)?

-

Which musician-related properties are reversible (inverse makes sense)?

-

How can we differentiate between a musician's playing in a band, being associated with other musicians, starring together in a live show, and others collaborating with the musician?

-

Does total number of songs or album released correlate with other measures of success?

-

Who created the most songs?

-

Which song has been recorded the most times by any artists? ("Yesterday" and "White Christmas" are typically cited.)

-

Is there a predominant record label in the music world?

-

Which solo artist has had the longest career?

-

Which band has been together (in some form) the longest time?

-

What is the average age of a musician when he/she first joined a band?

-

For bands with changing membership, can we conclude which configuration lasted the longest?

-

What is the "Eric Clapton number" (a la Kevin Bacon number) for various musicians?

-

Can we use our own knowledge of Eric Clapton to clarify some of the semantics behind some of the RDF data we encounter?

Our notion of a musical artist's activity and impact can be explained in general terms. We consider activity to be correlated with the number of recordings produced. Another factor that we chose not to consider is an artist's concert performances unless they resulted in a commercially available recording. An artist's impact is more subjective. The greater the number of musicians that play with a performer (the greater the number of associations), the greater the potential impact of the performer, provided that the performer is not simply a session man. Another measure that would have proved extremely helpful in determining both activity and impact in a more quantitative manner would be the use of Billboard chart data. Unfortunately, use of Billboard data is not royalty free.[2]

Furthermore, our working definitions of activity and impact is primarily based on commercial recordings of an artist. We acknowledge that some artists tend to perform numerous live concerts and yet have produced relatively few commerical recordings; many of these concerts may be available on bootleg recordings. The argument could easily be made that the number of concerts and/or number of bootleg recordings are correlated with activity and/or impact. We have chosen not to consider these factors at this time.

Data Sources

Fortunately in 2011, there is a tremendous wealth of information about musicians freely available on the Web either in structured markup, especially triples, that lends itself to SPARQL queries. For example, Wikipedia tells us that Eric Clapton Clapton is associated with: Dire Straits, The Yardbirds, John Mayall & the Bluesbreakers, Powerhouse, Cream, Free Creek, The Dirty Mac, Blind Faith, J.J. Cale, The Plastic Ono Band, Delaney, Bonnie & Friends, Derek and the Dominos, and T.D.F. By comparison, Ringo Starr Starr is associated with The Beatles, Rory Storm and the Hurricanes, Ringo Starr & His All-Starr Band, and Plastic Ono Band. On the surface, Ringo's direct associations are fewer, but actually Ringo Starr & His All-Starr Band has had 11 different lineups (to date) with a total of 42 unique musicians, most of whom have a number of associations as well. Ringo Starr & His All-Starr Band

The various data sources we leveraged are discussed in the following subsections.

Wikipedia and Infoboxes

The primary (although indirect) source for our RDF data was Wikipedia which is a major source of detailed information about musicians, among many other things. To understand both the kinds of properties available and their open community origins, some details about Wikipedia are in order.

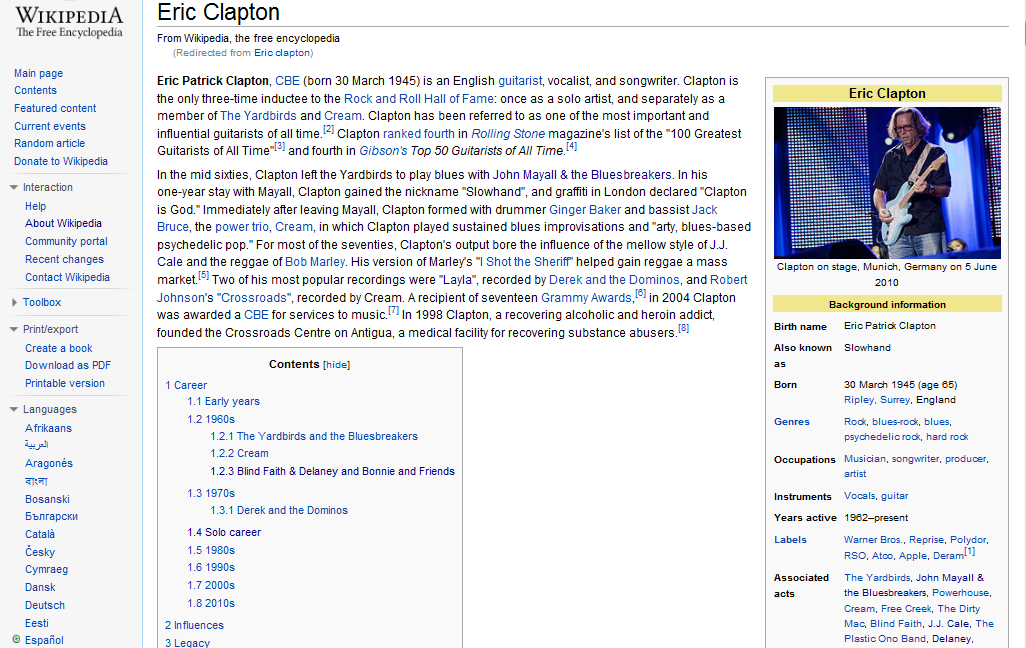

As illustrated by the excerpt from Clapton's Wikipedia page below, the main page for a musical artist contains an abstract at the top, a contents navigation box below the abstract with a variable number of section links pointing to the main content of the page, and a so-called infobox in the upper right. In addition to a main page, most musical artists with more than a handful of albums or singles have a separate discography page with varying amount of detail and organization regarding studio albums, live albums, compilations, singles, etc. The discography is linked to the musician's main page and vice versa.

Clapton's Wikipedia page: Eric Clapton's Wikipedia Page (excerpt with Infobox on right)

Wikipedia. "Eric Clapton -- Wikipedia, The Free Encyclopedia". 2011.

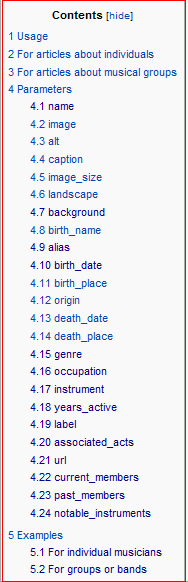

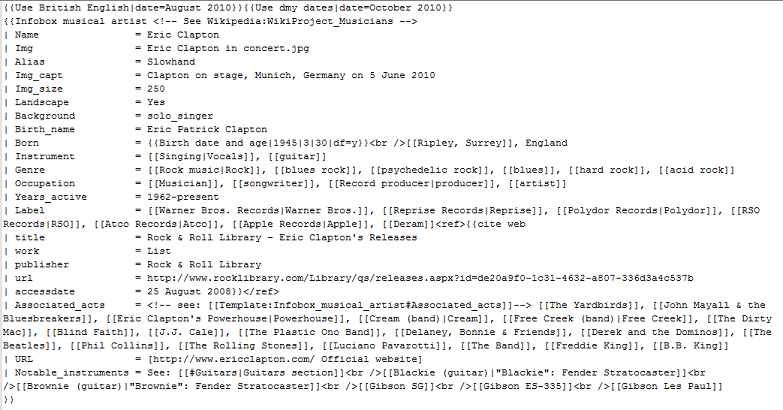

An infobox is a fixed-format table designed to be added to the top right-hand corner of Wikipedia articles to consistently and concisely present a summary of some common aspects that the articles of the same category (i.e., musical artist) share, as well as to improve navigation to other interrelated articles (i.e., music genres). Infoboxes are an instance of MediaWiki's template feature; there are numerous infobox templates arranged by broad categories such as arts and culture infobox templates, which is further divided into 10 subcategories including templates for film, fictional characters, and music. There are over 50 templates in the subcategory music infobox templates of which the most relevant to our work is the template for the infobox of musicial artists. (See the right side of Table 1 below.) This infobox template is used by Wikipedia authors to create infoboxes such as Clapton's, shown in the left side of the table. The correspondence between the template and the resultant infobox is apparent when viewed side by side. Most of the properties that we used in our queries (e.g., name, genres, associated acts, etc.) are based on the contents of the infobox, with the notable exception of albums (from the discography page).

Table 1

Clapton's Infobox (left) and Generic Musical Artist Infobox Template (right) [cited 03 Apr 2011]

|

|

The wiki source markup of the infobox is shown in Figure 3. For a much more complete

explanation of the Wikipedia extraction process employed by DBpedia including a discussion

of

the design and development of infobox templates,

see Auer and Lehmann 2007 and Auer et al 2007.

Figure 3: Wiki Source Markup for Clapton Infobox Compare markup to rendering shown in Table 1.

DBpedia

One of the two primary dataset we used was DBpedia 3.6 en (English) based on Wikipedia dumps from October/November 2010. DBpedia DBpedia Dataset is a community effort to provide sophisticated query access to the structured content of Wikipedia, thereby allowing a small group of researchers and developers to enhance Wikipedia by linking to additional datasets. The DBpedia 3.6 release announcement describes the content in detail:

"The new DBpedia dataset DBpedia Release describes more than 3.5 million things, of which 1.67 million are classified in a consistent ontology, including 364,000 persons, 462,000 places, 99,000 music albums, 54,000 films, 16,500 video games, 148,000 organizations, 148,000 species and 5,200 diseases. The DBpedia dataset features labels and abstracts for 3.5 million things in up to 97 different languages; 1,850,000 links to images and 5,900,000 links to external web pages; 6,500,000 external links into other RDF datasets, and 632,000 Wikipedia categories. The dataset consists of 672 million pieces of information (RDF triples) out of which 286 million were extracted from the English edition of Wikipedia and 386 million were extracted from other language editions and links to external datasets."

The DBpedia Ontology DBpedia Ontology is a cross-domain ontology

created from the most commonly used infoboxes within Wikipedia.

The ontology currently covers over 272 classes with 1,300 different properties and

1.667 million resources,

364,000 of which are Persons.

DBpedia predicate IRIs [3] begin with either http://dbpedia.org/ontology or

http://dbpedia.org/property; entitites are designated

by http://dbpedia.org/resource.

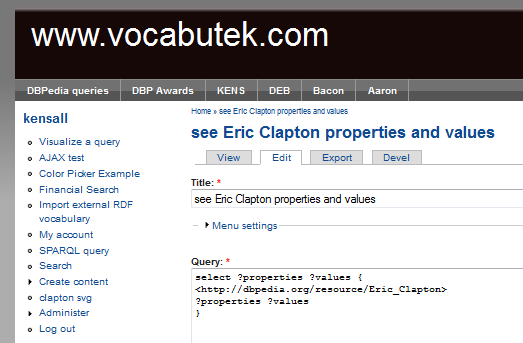

We initially used a simple SPARQL query SPARQL 1.0 to determine what properties are relevant to a musician

as shown in the following two listings. Some musicians had less than the 23 properties

shown;

others had more. Note that the Figure 4: Display Properties Defined for Eric Clapton (as object) Clapton's DBpedia Properties - Version 1: Clapton's DBpedia Properties - Version 1 (23 Predicates)resource IRI designating the musician is a straightforward rendering of

the artist's name in English with underscores replacing spaces:

http://dbpedia.org/resource/Eric_Clapton.

SELECT DISTINCT (?predicate) WHERE {

?s ?predicate <http://dbpedia.org/resource/Eric_Clapton>.

} ORDER BY ?predicate

http://dbpedia.org/ontology/artist

http://dbpedia.org/ontology/associatedBand

http://dbpedia.org/ontology/associatedMusicalArtist

http://dbpedia.org/ontology/composer

http://dbpedia.org/ontology/musicComposer

http://dbpedia.org/ontology/musicalArtist

http://dbpedia.org/ontology/musicalBand

http://dbpedia.org/ontology/partner

http://dbpedia.org/ontology/producer

http://dbpedia.org/ontology/spouse

http://dbpedia.org/ontology/starring

http://dbpedia.org/ontology/wikiPageDisambiguates

http://dbpedia.org/ontology/writer

http://dbpedia.org/property/associatedActs

http://dbpedia.org/property/before

http://dbpedia.org/property/currentMembers

http://dbpedia.org/property/music

http://dbpedia.org/property/pastMembers

http://dbpedia.org/property/producer

http://dbpedia.org/property/spouse

http://dbpedia.org/property/starring

http://dbpedia.org/property/writer

http://www.w3.org/2002/07/owl#sameAs

Wikipedia-like categories can also be specified in queries. The following query returns the short list of musical artists who have three distinctions: Grammy Award winners, Rock and Roll Hall of Fame inductees, and MTV Video Music Awards winners.

SELECT ?allAwards

{

?allAwards <http://purl.org/dc/terms/subject>

<http://dbpedia.org/resource/Category:Grammy_Award_winners>.

?allAwards <http://purl.org/dc/terms/subject>

<http://dbpedia.org/resource/Category:Rock_and_Roll_Hall_of_Fame_inductees>.

?allAwards <http://purl.org/dc/terms/subject>

<http://dbpedia.org/resource/Category:MTV_Video_Music_Awards_winners>

} ORDER BY ?allAwards

The thrice-honored, distinguished musical artists are: [4]

http://dbpedia.org/resource/Aerosmith

http://dbpedia.org/resource/Bruce_Springsteen

http://dbpedia.org/resource/Elton_John

http://dbpedia.org/resource/Eric_Clapton

http://dbpedia.org/resource/Johnny_Cash

http://dbpedia.org/resource/Madonna_%28entertainer%29

http://dbpedia.org/resource/Metallica

http://dbpedia.org/resource/Michael_Jackson

http://dbpedia.org/resource/R.E.M.

http://dbpedia.org/resource/The_Beatles

http://dbpedia.org/resource/The_Rolling_Stones

http://dbpedia.org/resource/U2

http://dbpedia.org/resource/Van_Halen

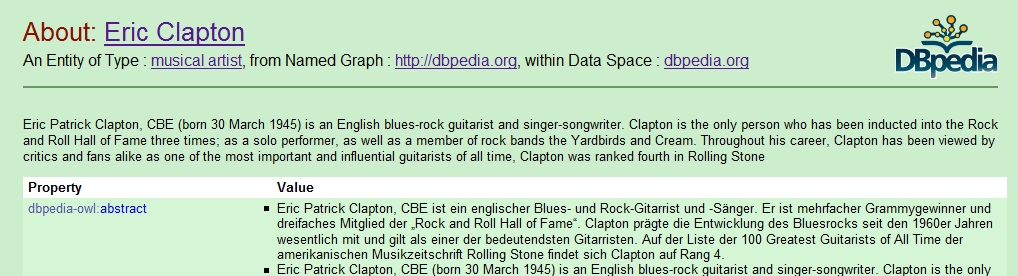

The full list of predicates relating to MusicalArtist

is quite large. The figure below is a partial view of the DBpedia "About: Eric Clapton" page.

The IRI Figure 6: Dereferencing the Eric Clapton DBpedia IRI (excerpt)http://dbpedia.org/resource/Eric_Clapton forwards to this page.

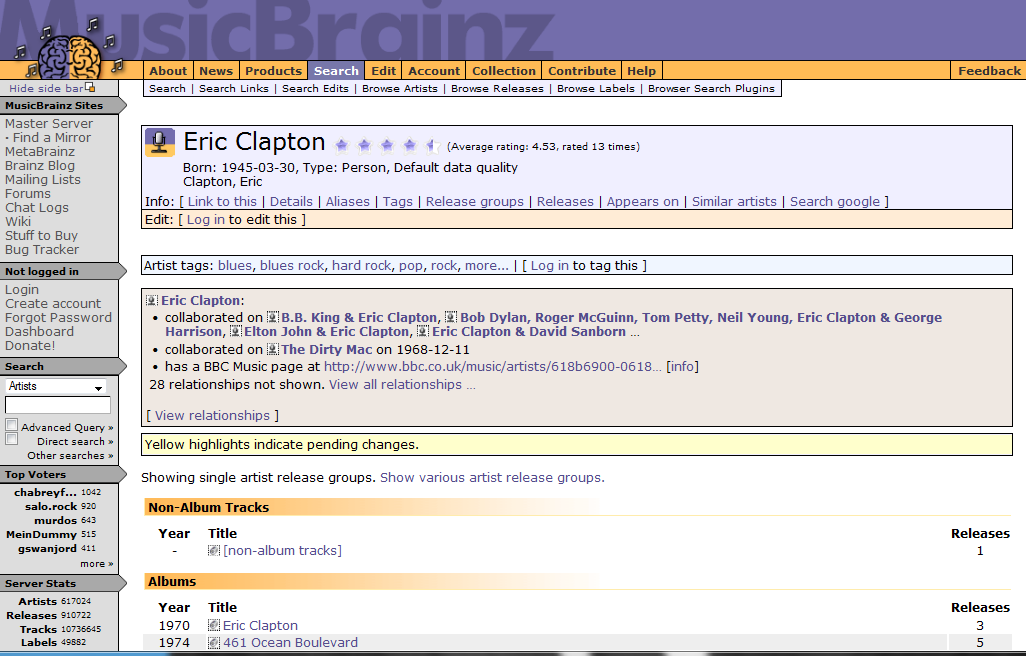

MusicBrainz

MusicBrainz MusicBrainz is another comprehensive public database for musician information; it contains detailed data about artists, release groups, releases, tracks, record labels and many relationships between them. As described in the database overview, MusicBrainz defines artist attributes including the artist's name, aliases, GUID, annotation, type (individual or group), and begin and end dates (birth/death of an individual or formation/disbanding for a group). A release group is a logical grouping of variant releases (deluxe/limited editions, reissues/remasters, international variations, box sets, etc.). One example is the release group describing several variants of Clapton's 461 Ocean Boulevard album. Each release group is identified by type (album, single, EP, compilation, soundtrack, live, etc.), as well as by artist, title, and ID. An individual release has the same properties as a release group as well as status (official, bootleg, etc.), Amazon ASIN, annotation, language, release event (date, country, format, label, etc.) and more. Each track has properties such as duration (in milliseconds) and PUID (the MusicIP acoustic fingerprint identifier for the track) as well as artist, title, and ID. Record labels are also described with a number of detailed attributes.

Every MusicBrainz ID (MBID) is a permanent GUID. There is a direct relationship between an artist's MBID, a IRI that identifies the artist, and a web page that collects information about the artist. For example:

-

Eric Clapton's MBID is 618b6900-0618-4f1e-b835-bccb17f84294

-

Eric Clapton's IRI is http://musicbrainz.org/mm-2.1/artist/618b6900-0618-4f1e-b835-bccb17f84294 (points to basic RDF metadata)

-

His MusicBrainz page is http://musicbrainz.org/artist/618b6900-0618-4f1e-b835-bccb17f84294.html

Below is a screenshot of the

Eric Clapton MusicBrainz page.

MusicBrainz offers a tremendous amount of detail about releases as exemplified by

the page for Clapton's classic

From the Cradle blues album.

Figure 7: Eric Clapton's MusicBrainz Main Page

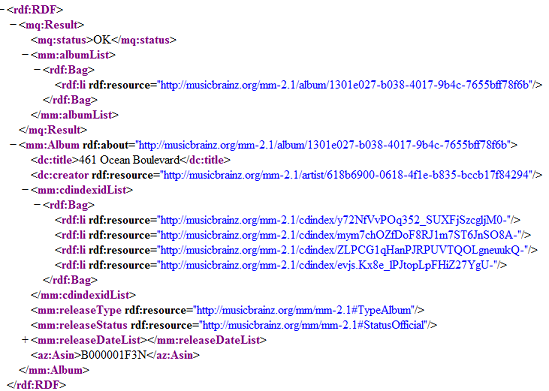

MusicBrainz also provides a web service interface to its extensive database.

Requests may be English-like such as http://musicbrainz.org/ws/1/artist/?type=xml&name=Eric+Clapton or

may contain MBIDs such as http://musicbrainz.org/mm-2.1/album/1301e027-b038-4017-9b4c-7655bff78f6b for the

"461 Ocean Boulevard" album, shown below. (Release dates of 1974 and three from 1996

are collapsed

in the screenshot.)

Figure 8: Web Service Result - Query for 461 Ocean Boulevard

MusicBrainz maintains numerous database statistics, a small sampling of which appear below. It is interesting to note that in the three months since the draft version of this paper, although the number of Recordings increased by approximately 37,000, the Artist count dropped by roughly 8,000. This seems to suggest the dynamic nature of the data both in terms of quantity and in how recordings are categorized. (For example, artists may have appeared under variant name spellings.) We could not find a definition for the new term Works in the terminology page or other MusicBrainz documentation.

Table II

Selected MusicBrainz Statistics [accessed 10 April 2011; updated 26 June 2011]

| Artists | 612,428 |

| Release Groups | 787,918 |

| Releases | 952,743 |

| Disc IDs | 460,876 |

| Recordings [tracks] | 10,307,311 |

| Labels | 52,156 |

| Works | 276,864 |

| Relationships [links] | 3,160,096 |

The MusicBrainz data quality page states that one of the goals is "Establish a method to determine the quality of an artist and the releases that belong to that artist. This provides consumers of MusicBrainz a clue about the relative quality rating of the data in the database." The page explains the connection between the quality number, voting periods, and strictness regarding edits.

To accomplish these goals, this feature will allow editors to indicate the quality for a given artist. An artist can be of unknown, low, medium or high data quality. The data quality indicator determines what level of effort is required to change the artist information or to add/remove albums from an artist. An artist with unknown or medium quality will roughly require the amount of effort that MusicBrainz currently requires to edit the database. An artist with low data quality will make it easier to add/remove albums or to change the artist information (name, sortname, aliases). And an artist with high data quality will require more effort to add/remove albums or the change the artist information. The data quality concept also applies to releases in the same manner. Changing a release with low data quality will be easier than changing a release with high data quality.

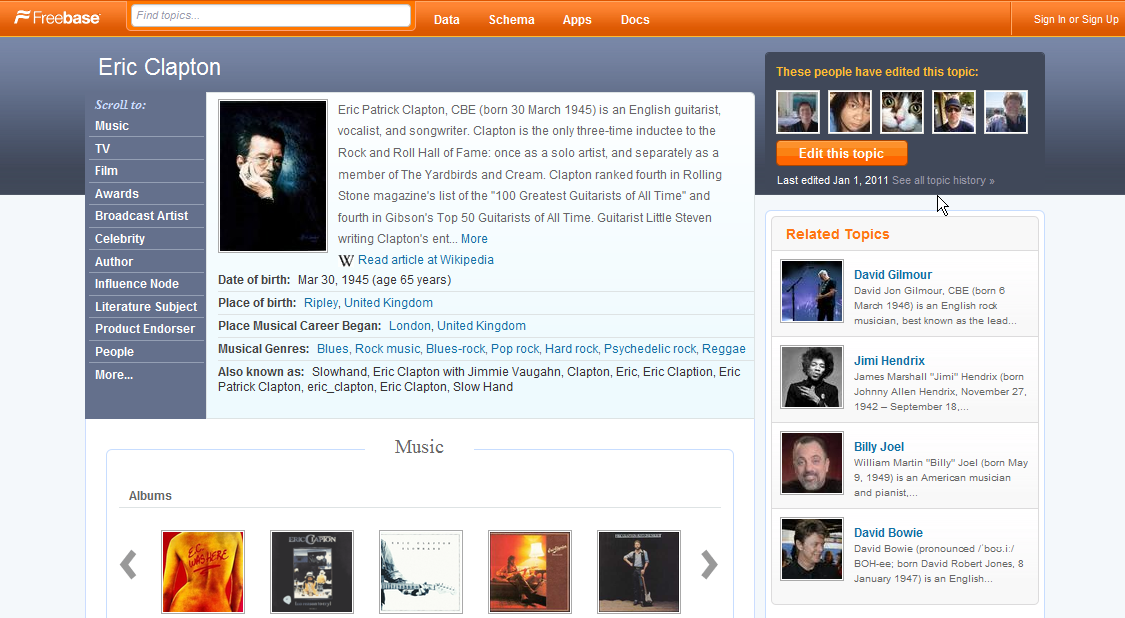

Freebase

Initially we considered using music data from Freebase Freebase, an open, community-based, Creative Commons licensed repository of structured data describing millions of entities (i.e., person, place, thing) which are connected as a graph. Freebase IDs can be used to uniquely identify entities from any web-reachable source. At the time of this writing, the 1.22 GB (uncompressed) music segment of Freebase contained 9 million topics and 33 million facts. The music category contains classical, opera, and many other genres in addition to blues and rock music. Data is formatted as approximately 50 separate Tab Separated Values files with filenames such as group_member.tsv, group_membership.tsv, musical_group.tsv, guitarist.tsv, album.tsv, artist.tsv, release.tsv, and track.tsv (the largest file at roughly 671 MB). A total of over 151,000 groups are listed in musical_group.tsv. [5]

Freebase data can be used in several ways. MQL (Metaweb Query Language) MQL is a query API analogous to SPARQL for RDF. MQL uses JSON objects as queries via standard HTTP requests and responses. For example, the IRI below will return all the genres associated with Eric Clapton (line breaks added for readability).

http://api.freebase.com/api/service/mqlread?query={%20%22query%22:

{%20%22active_start%22:null,%20%22genre%22:[],%20%22name%22:%22Eric%20Clapton%22,

%20%22type%22:%22/music/artist%22%20}%20}

will produce the following result,

indicating all the genres associated with Eric Clapton:

Figure 9: Freebase Genre Results for Eric Clapton

{

"code": "/api/status/ok",

"result": {

"active_start": null,

"genre": [

"Blues",

"Rock music",

"Blues-rock",

"Pop rock",

"Hard rock",

"Psychedelic rock",

"Reggae"

],

"name": "Eric Clapton",

"type": "/music/artist"

},

"status": "200 OK",

"transaction_id": "cache;cache03.p01.sjc1:8101;2011-01-26T22:45:13Z;0054"

}

For any given group, band members are non-sequential within the group_membership.tsv file. The relevant lines for the members of Blind Faith are collected in the table below.

Table III

Members of Blind Faith - from Freebase

| id | member | group | (role) | start | end |

|---|---|---|---|---|---|

| /m/01tfhrr | Ginger Baker | Blind Faith | 1968 | 1969-10 | |

| /m/01tfhry | Steve Winwood | Blind Faith | 1968 | 1969-10 | |

| /m/01wvwr8 | Ric Grech | Blind Faith | 1968 | 1969-10 | |

| /m/01tfhrk | Eric Clapton | Blind Faith | 1968 | 1969-10 |

The group_membership.tsv file contains dozens of entries for Clapton, one line for each band or other association in which he participated. Each entry (line) is identified by a different ID. For example:

/m/01t73cp Eric Clapton Derek and the Dominos

Eric Clapton's

main Freebase page is shown below. The IRI format for Clapton as a topic

is Figure 10: Eric Clapton's Freebase Pagehttp://www.freebase.com/view/en/eric_clapton.

Ultimately we elected not to use Freebase as a data source because we, like others, were unable to locate the complete Freebase dataset rendered as RDF and determined that the conversion process would be non-trivial. In fact, we found mailing list and forum messages with others expressing the same problem. Initially we had downloaded the dataset in its native format and considered converting it into RDF. This would have been possible although many of the lines contained a fourth element consisting of a number of values concatenated together; it was unclear how that could be cleanly converted into a named graph. After the rough draft of our paper was submitted, we discovered that RDF data is available manually by following a link labeled "RDF" near the bttom of each Freebase page. It appears that this RDF is rendered at execution time since there is a slight delay in the display of the RDF. For example, follow the RDF link on the Eric Clapton page. Had we discovered this sooner we might have attempted to obtain the Freebase RDF for Rock and Roll stars using some automated process.

Methodology

In this section, we discuss our frontend and backend development platform, a few term definitions, and details concerning the Graphviz visualization which is still under development as of this July writing.

Development Environment

The front end to our SPARQL queries was a guest virtual machine running under Sun VirtualBox 3.1.6_OSE. on a Dell Inspiron with an Intel Core 2 Quad CPU Q9400 running at 2.66GHz. The guest operating system is the 10.10 server release of Ubuntu Linux. The database machine leverages 2 Intel Xeon X5650 CPUs overclocked to 3.12GHz with 48 gigabytes of RAM. From the perspective of the 10.10 Ubuntu Desktop operating system, the 2 hexcore processors are regarded as 24 processors (149867.81 BogoMIPS).

Our RDF store was the open source (freely-available) version of

OpenLink Virtuoso Universal Server Virtuoso Universal Server (Version 06.01.3127) running in "Full Mode". Configuration changes were made to

maximize use of available RAM by making a change to the virtuoso.ini file:

"NumberOfBuffers = 7000000".

On the frontend, our queries were facilitated by Drupal 6.18 with the modules listed

in the

Drupal Modules appendix.

It was necessary to configure PHP to allow Drupal more memory, so "memory_limit =

512M"

was added to /etc/php5/apache2/php.ini.

Drupal 7.0 became available during 2011, but we elected not to migrate to that version. Although Drupal 7.0 does have substantially better support for RDF data than earlier versions, that is likely to only effect people publishing information that was authored or stored inside Drupal. Our use of Drupal was for collaborative development of and storing of queries, as well as for the visualization capabilities.

Working Definitions of Key Properties

The definition list below presents our current thinking about several key concepts that are covered in details in later sections; some refer to OpenCyc for the Semantic Web.

|

a person who is either a musician who plays one or more instruments,

or is a singer, or is a music composer; similar (but not identical) to

|

|

|

both the subject and object of this predicate are musicians;

similar to |

|

|

this is mapped either to associatedBand (for subjects who are individual artists) or associatedMusicalArtist (for bands) |

|

|

OpenCyc: An element of Band_MusicGroup is a (small or large) group of musicians who play non-Classical music together on either a regular or intermittent schedule. |

|

|

a categorization of music into types that can be distinguished from other types of music; can be applied to a musical artist, a band, an album, or individual songs. |

RDF Visualization Coding

In order to better understand our result sets a form of visualization was implemented as a custom Drupal module, written in PHP. The front end was a simple HTML form with JQuery and AJAX for displaying the information. Main processing was handled by the PHP module setup to intercept a post to a particular URL. The PHP module first accesses the POST data and extracts the input search term which became the subject of the following SPARQL query:

SELECT DISTINCT ?predicate ?object

WHERE {<" . $subject . "> ?predicate ?object }

ORDER BY ?predicate

where in our initial implementation $subject is replaced by the musician subject requested by the user.

XML output is specified by the request as the desired return format.

The next step was to convert the XML results output from the query to the Graphviz input format DOT language. Graphviz Graphviz is open source graph visualization software that converts descriptions of graphs in a simple text language either to diagrams in various formats (e.g., images, SVG, PDF, Postscript) or for display in an interactive graph browser.

Using the SimpleXMLElement class (from the PHP library), the code loops through the

results of the SPARQL query <binding> elements, accessing the “name” attribute of

each one.

If the name attribute is ‘predicate’ (from the ?predicate variable in our SPARQL query),

the predicate is obtained from the <uri> subelement.

If the name attribute is ‘object’ (from the ?object variable in the query),

the value is obtained from the <uri> subelement or the <literal> subelement,

depending on which is present in the XML output of the query.

If the <uri> subelement is found, we use the uri target for display,

but use the complete uri for the link, for use in future queries that the user can

select.

The Graphviz text input file is constructed incrementally by adding Graphviz

format input statements for each <binding> element. Since Graphviz defines

links and colors in a separate section of the file, the XML <binding> elements

are searched a second time to create the Graphviz [label] entries. (To

improve performance, we may do this in a single loop populating two sections of the

Graphviz input at once.) A static set of predefined colors is defined in the module.

As new predicates (i.e., artist, associatedMusicalArtist, associatedBand,

musicComposer, producer, writer, etc.) are encountered, new colors are assigned by

taking the next one from the list and associating it with the new predicate.

Use of an associative array allows predicates of the same type to be assigned the

same color.

The Graphviz input string is completed when all the <binding> elements have

been processed and a header and footer are added.

The SVG data is created by sending the Graphviz input string to the

graphviz_filter_process method available by means of a Graphviz filter module

installed on the Drupal site. The SVG description is serialized by the filter

module. At this point the return information is structured using the Drupal function

drupal_json to create JSON formatted data. The SVG data and the XML

data are each added as named elements of the JSON data and returned to the caller.

To use this module, an HTML page with a short embedded JQuery script invokes the module and displays the results. The HTML defines an input text field, a search button and two <div> elements which initially contain no content. They are given the IDs outputGraph and outputTable so content can be associated later using JavaScript (JQuery).The user interface is a simple text field into which the user types the name of the musician subject and then presses a search button which runs a JQuery function that posts the input field to the module previously described using AJAX. The AJAX method waits for the data to be returned from the module in JSON format. When the JSON data arrives, the returned SVG data and XML data are assigned to the <div> elements with the correct IDs.

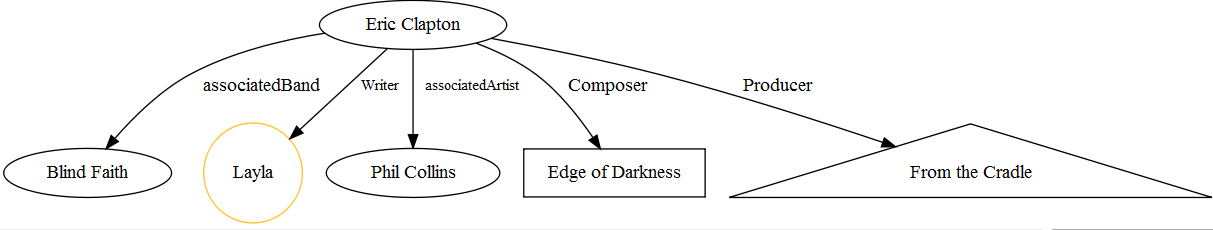

The figure below shows one of the Graphviz digraphs.

Graphviz SVG Digraph: Sample Graphviz SVG Digraph (linebreaks added for readability)

digraph G {

/*

* @title = CLAPTON

* @formats = svg

*/

rankdir=LR

"http://dbpedia.org/resource/Eric_Clapton"->"Unplugged_(Eric_Clapton_album)"[ label = "artist" ]

"http://dbpedia.org/resource/Eric_Clapton"->"Just_One_Night_(album)"[ label = "artist" ]

"http://dbpedia.org/resource/Eric_Clapton"->"Behind_the_Sun_(Eric_Clapton_album)"[ label = "artist" ]

"http://dbpedia.org/resource/Eric_Clapton"->"Journeyman_(album)"[ label = "artist" ]

"http://dbpedia.org/resource/Eric_Clapton"->"Back_Home_(Eric_Clapton_album)"[ label = "artist" ]

"http://dbpedia.org/resource/Eric_Clapton"->"Reptile_(album)"[ label = "artist" ]

"http://dbpedia.org/resource/Eric_Clapton"->"Live_in_Japan_(George_Harrison_album)"[ label = "artist" ]

"http://dbpedia.org/resource/Eric_Clapton"->"August_(album)"[ label = "artist" ]

"http://dbpedia.org/resource/Eric_Clapton"->"Steppin'_Out_(Eric_Clapton_album)"[ label = "artist" ]

"http://dbpedia.org/resource/Eric_Clapton"->"Edge_of_Darkness_(soundtrack)"[ label = "artist" ]

"http://dbpedia.org/resource/Eric_Clapton"->"Backless"[ label = "artist" ]

"http://dbpedia.org/resource/Eric_Clapton"->"461_Ocean_Boulevard"[ label = "artist" ]

"http://dbpedia.org/resource/Eric_Clapton"->"No_Reason_to_Cry"[ label = "artist" ]

"http://dbpedia.org/resource/Eric_Clapton"->"Slowhand"[ label = "artist" ]

"http://dbpedia.org/resource/Eric_Clapton"->"Pilgrim_(Eric_Clapton_album)"[ label = "artist" ]

"http://dbpedia.org/resource/Eric_Clapton"->"From_the_Cradle"[ label = "artist" ]

"http://dbpedia.org/resource/Eric_Clapton"->"One_More_Car,_One_More_Ride] </span> </div>

<div> <span style="color: black;">

[log] => [] </span> </div><div> <span style="color: black;">

[revision_timestamp] => [1300136539] </span> </div><div> <span style="color: black;">

[format] => [4] </span> </div><div> <span style="color: black;">

[name] => [wendy] </span> </div><div> <span style="color: black;">

[picture] => [] </span> </div><div> <span style="color: black;">

[data] => [a:1:{s:13:"form_build_id";s:37:"form-18d527e00db0361bf4cea1b231176078";}] </span> </div>

<div> <span style="color: green;">

[rdf] => array ( </span> </div><div> <span style="color: green;"> ) </span> </div>

<div> <span style="color: green;">

[path] => [content/claptonsvg] </span> </div><div> );</div></div></fieldset></div>

<div><fieldset class="toggler">

<legend><strong><a href="#"><em>view</em> $node->112</a></strong></legend>

<div class="content" style="display: none;"><div> $node = (</div><div>

<span style="color: black;">

[nid] => [112] </span> </div><div> <span style="color: black;">

[type] => [graph] </span> </div><div> <span style="color: black;">

[language] => [] </span> </div><div> <span style="color: black;">

[uid] => [3] </span> </div><div> <span style="color: black;">

[status] => [1] </span> </div><div> <span style="color: black;">

[created] => [1300117673] </span> </div><div> <span style="color: black;">

[changed] => [1300136539] </span> </div><div> <span style="color: black;">

[comment] => [0] </span> </div><div> <span style="color: black;">

[promote] => [1] </span> </div><div> <span style="color: black;">

[moderate] => [0] </span> </div><div> <span style="color: black;">

[sticky] => [0] </span> </div><div> <span style="color: black;">

[tnid] => [0] </span> </div><div> <span style="color: black;">

[translate] => [0] </span> </div><div> <span style="color: black;">

[vid] => [112] </span> </div><div> <span style="color: black;">

[revision_uid] => [3] </span> </div><div> <span style="color: black;">

[title] => [claptonSvg] </span> </div><div> <span style="color: black;">

[body] => [<div class="graphviz graphviz-"svg>

<object type="image/svg+xml"

data="http://www.vocabutek.com/sites/default/files/graphviz/ca80a36e3d121526b2b93ec1f1e076a3.svg">

<embed type="image/svg+xml"

src="http://www.vocabutek.com/sites/default/files/graphviz/ca80a36e3d121526b2b93ec1f1e076a3.svg"

pluginspage="http://www.adobe.com/svg/viewer/install/" />

</object>

</div>

]

Interface Screenshots

A sampling of our interface on Vocabutek Web Site is presented in the following figures. As of this writing, the Graphviz visualization is expected to undergo changes. Figure 12 illustrates how we entered queries in the Drupal interface to test.

Figure 12: Query Entry

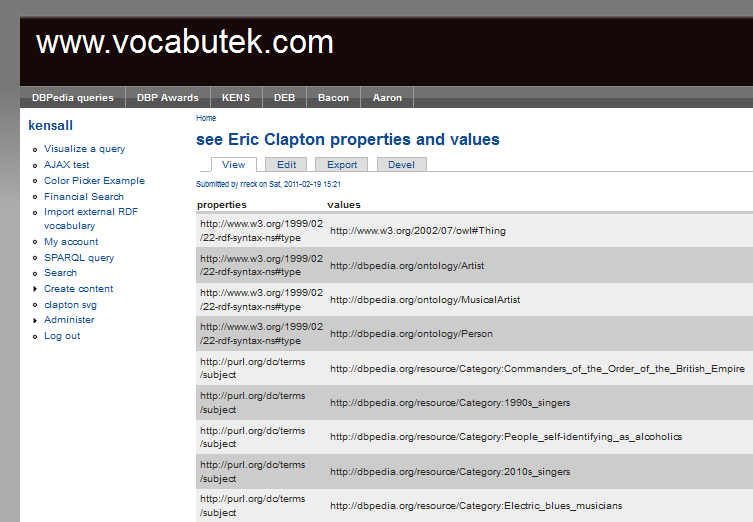

Figure 13 displays the result of executing the query shown in Figure 12.

Figure 13: Query Result

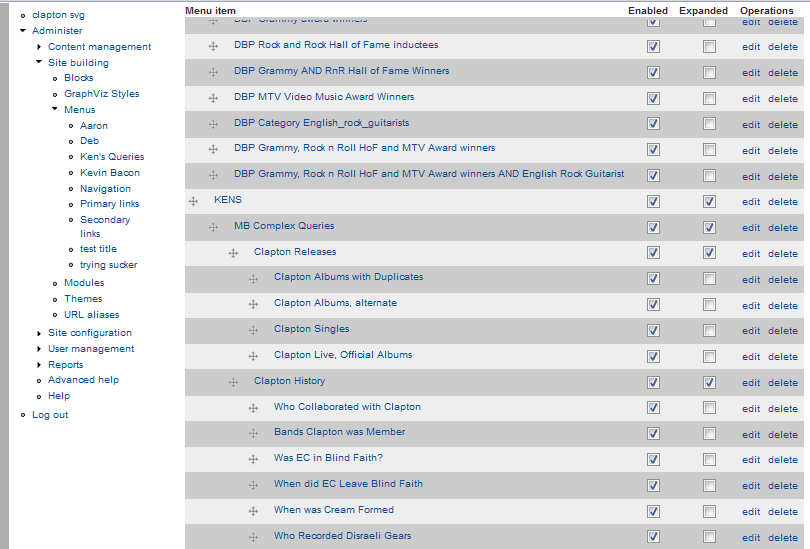

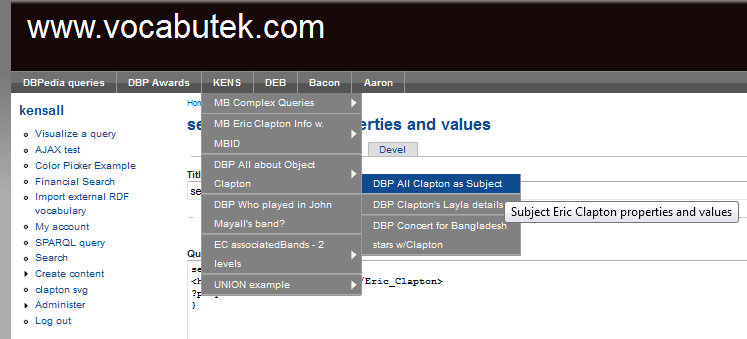

We used the Drupal adminstration content management to organize queries into cascading menus, as shown in Figure 14.

Figure 14: Query Administration - Menu Organization

The result of the query organzation is shown in Figure 15

Figure 15: Cascading Menus

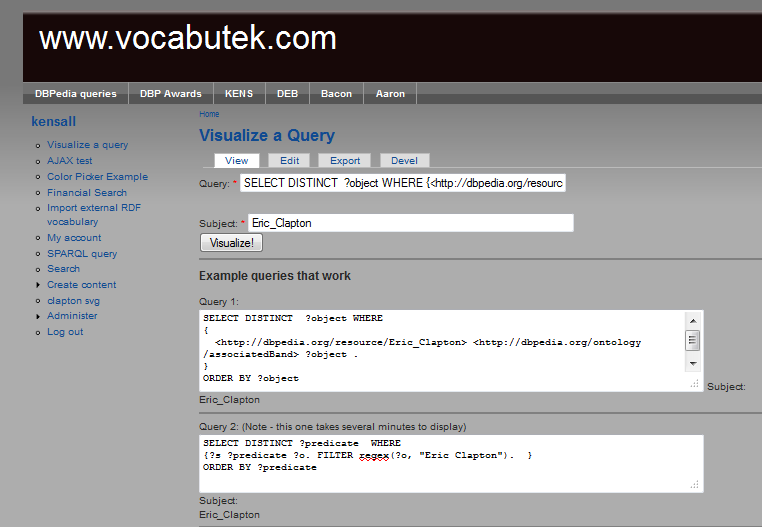

Figure 16 illustrates the interface for running a query to send to Graphviz for rendering in SVG. Pre-tested queries can be copy/pasted into a single string (topmost text field) or new queries can be entered. The subject defaults to Eric Clapton but any musician can be entered.

Figure 16: Visualize a Query - Copy/Paste or Enter

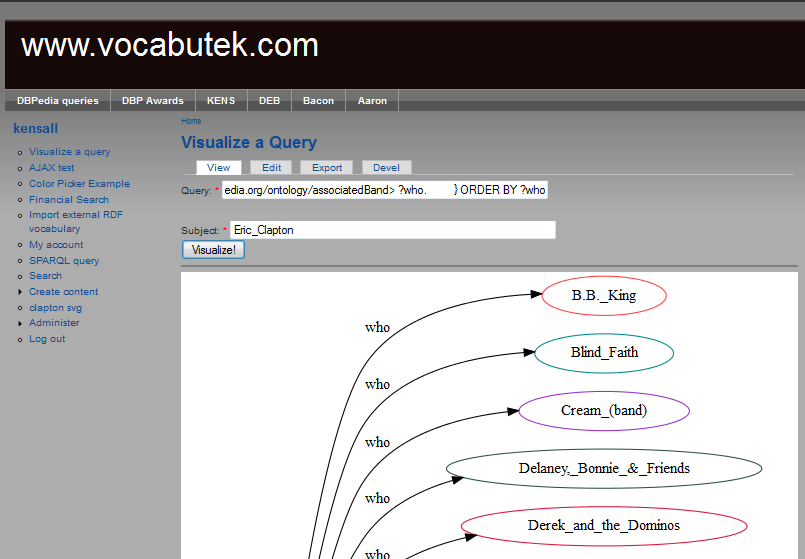

Finally, Figure 17 depicts part of the SVG resulting from a query, as rendered by Graphviz. (This is one aspect we plan to improve over time.)

Figure 17: Graphviz AssociatedBand SVG

Challenges and Results

Our results were hampered by various anomalies or complications discovered in the data sources especially in terms of semantics. In this section, we present some of the problems we encountered and either how they were solved or how they might be approached in the future.

Properties of a Musical Artist

We next consider a different view of Clapton predicates. The query below returns properties with an object value that contains the string "Eric Clapton".

Figure 18: Find Predicates for Which Clapton is (all or part of) the Object

SELECT DISTINCT ?predicate WHERE {

?s ?predicate ?o.

FILTER regex(?o, "Eric Clapton").

} ORDER BY ?predicate

Compare the result below to that presented in figure Clapton's DBpedia Properties - Version 1.

Clapton's DBpedia Properties - Version 2: Clapton's DBpedia Properties - Version 2 (44 Predicates)

http://dbpedia.org/ontology/abstract

http://dbpedia.org/ontology/alias

http://dbpedia.org/property/after

http://dbpedia.org/property/album

http://dbpedia.org/property/alias

http://dbpedia.org/property/allWriting

http://dbpedia.org/property/altArtist

http://dbpedia.org/property/artist

http://dbpedia.org/property/associatedActs

http://dbpedia.org/property/aux

http://dbpedia.org/property/before

http://dbpedia.org/property/caption

http://dbpedia.org/property/chronology

http://dbpedia.org/property/composer

http://dbpedia.org/property/cover

http://dbpedia.org/property/description

http://dbpedia.org/property/extra

http://dbpedia.org/property/founders

http://dbpedia.org/property/fromAlbum

http://dbpedia.org/property/img

http://dbpedia.org/property/imgCapt

http://dbpedia.org/property/label

http://dbpedia.org/property/lastAlbum

http://dbpedia.org/property/lyrics

http://dbpedia.org/property/music

http://dbpedia.org/property/musicalguests

http://dbpedia.org/property/name

http://dbpedia.org/property/namedAfter

http://dbpedia.org/property/nextAlbum

http://dbpedia.org/property/note

http://dbpedia.org/property/notes

http://dbpedia.org/property/partner

http://dbpedia.org/property/pastMembers

http://dbpedia.org/property/producer

http://dbpedia.org/property/recordedBy

http://dbpedia.org/property/shortDescription

http://dbpedia.org/property/starring

http://dbpedia.org/property/text

http://dbpedia.org/property/thisAlbum

http://dbpedia.org/property/title

http://dbpedia.org/property/writer

http://purl.org/dc/elements/1.1/title

http://www.w3.org/2000/01/rdf-schema#label

http://xmlns.com/foaf/0.1/name

When we subsequently connected the DBpedia and MusicBrainz data sources as discussed in Bridging Data Sources below, we obtained a third view of Clapton's properties, shown in figure Clapton's Properties Combined.

Along Comes the Association

The paramount requirement in determining an Eric Clapton Number is the ability to unambiguously identify those musicians with whom he directly worked. Originally we thought this would be rather straightforward. Properties that seemed relevant to making that determination included:

-

http://dbpedia.org/property/associatedActs -

http://dbpedia.org/ontology/associatedBand -

http://dbpedia.org/ontology/associatedMusicalArtist -

http://dbpedia.org/property/starring -

http://dbpedia.org/property/musicalguests

musicalguests property was eliminated because it relates only to variety shows such as

"The Late Show". The starring property also proved to be unreliable since multiple

musicians might have all been considered stars of a performance but might not have

played together.

One case in point is the The Concert for Bangladesh;

a query returned Klaus Voorman, Billy Preston,

Bob Dylan, Eric Clapton, George Harrison, Leon Russell, Ravi Shankar, and Ringo Starr.

While

most of these performers did share the stage at one time during the concert, Ravi

Shankar did not

perform with the others. Furthermore, members of the band Badfinger did play with

most of the

others, but they were not returned by the query although we note that Badfinger is

cited in the abstract of

the Wikipedia article in the list of stars of the supergroup. On the other hand,

if we look at the infobox

on the concert's Wikipedia page,

Badfinger is not listed as one of the stars.

We considered the associatedActs property since that is the term used in the infobox.

As seen in Table 1, the infobox on Clapton's Wikipedia page

lists these associated acts:

"The Yardbirds, John Mayall & the Bluesbreakers, Powerhouse, Cream, Free Creek, The

Dirty Mac,

Blind Faith, J.J. Cale, The Plastic Ono Band, Delaney, Bonnie & Friends, Derek and

the Dominos".

Our initial attempt to retrieve these artists was a little surprising. Our query was:

SELECT DISTINCT ?who

{ <http://dbpedia.org/resource/Eric_Clapton> <http://dbpedia.org/property/associatedActs> ?who.

} ORDER BY ?who

The result, shown below, was one long string

[6]

the contents of which represented a superset of the associated acts on the

Wikipedia page. The additional associated acts are underlined below.

"The Yardbirds, John Mayall & the Bluesbreakers, Powerhouse, Cream, Free Creek, Dire Straits,

George Harrison, The Dirty Mac, Blind Faith, Freddie King, Phil Collins,

J.J. Cale, The Plastic Ono Band, Delaney, Bonnie & Friends, Derek and the Dominos,

T.D.F., Jeff Beck, Paul McCartney, Steve Winwood, B.B. King, The Beatles, The Band"@en

It is unclear how there could be this disparity since the DBpedia data is derived

from Wikipedia. Although several of the underlined performers played with Clapton,

they were not in bands together (as far as we could determine). Furthermore, several

of the bands listed on the original Wikipedia page are arguably not exactly bands

in

the general sense since they existed only briefly and for a specific purpose. The

Dirty

Mac were a supergroup consisting of Eric Clapton, John Lennon, Keith Richards and

Mitch Mitchell that came together for The Rolling Stones' TV special. Free Creek was

a band composed of many musical artists, including Eric Clapton, Jeff Beck, Keith

Emerson, for one super-session album. Powerhouse only recorded a few songs, only three

of

which were released on a compilation album. These "bands" are certainly not

on a par with the others in the infobox since they were never intended to exist beyond

their stated purpose.

Perhaps our interpretation of the associatedActs property was incorrect? Further

examination of the DBpedia and Wikipedia documentation pointed us to the

template for the infobox of musicial artists,

shown on the right side of Table 1 in the earlier Wikipedia section.

The template description of associated_acts

from Wikipedia follows. (Numbers have been added for ease of reference and

the text has been reformatted.)

This field is for professional relationships with other musicians or bands

that are significant and notable to this artist's career.

This field can include, for example, any of the following:

a1) For individuals: groups of which he or she has been a member

a2) Other acts with which this act has collaborated on multiple occasions,

or on an album, or toured with as a single collaboration act playing together

a3) Groups which have spun off from this group

a4) A group from which this group has spun off

Separate multiple entries with commas.

The following uses of this field should be avoided:

b1) Association of groups with members' solo careers

b2) Groups with only one member in common

b3) Association of producers, managers, etc. (who are themselves acts)

with other acts (unless the act essentially belongs to the producer,

as in the case of a studio orchestra formed by and working exclusively

with a producer)

b4) One-time collaboration for a single, or on a single song

b5) Groups that are merely similar

Based on (a1), (a2) and (b4), it would seem that Powerhouse and arguably The Dirty

Mac and Free Creek

should be eliminated from the list of groups of which Clapton was a member.

[7]

Further investigation brought us to the numerous

DBpedia Infobox Mappings

and specifically to the ontology mapping for the Infobox for Musical Artists.

The (infobox) template property Associated_acts maps to two ontology properties,

associatedBand and associatedMusicalArtist, meaning that the infobox property

can refer to either a group (case (a3) and (a4) above) or to an individual (cases

(a1) and (a2)).

Therefore we turned our attention to queries involving the ontology properties

associatedBand and associatedMusicalArtist. The following query

asks for the bands in which Clapton was a member.

SELECT DISTINCT ?who WHERE

{<http://dbpedia.org/resource/Eric_Clapton> <http://dbpedia.org/ontology/associatedBand> ?who.

} ORDER BY ?who

The results are shown below. Note that the results are nearly identical to the string

superset

shown earlier. (The only exception is X-sample and a few band name variations.)

The same results are obtained if the predicate is replaced by http://dbpedia.org/ontology/associatedMusicalArtist.

http://dbpedia.org/resource/B.B._King

http://dbpedia.org/resource/Blind_Faith

http://dbpedia.org/resource/Cream_%28band%29

http://dbpedia.org/resource/Delaney,_Bonnie_&_Friends

http://dbpedia.org/resource/Derek_and_the_Dominos

http://dbpedia.org/resource/Dire_Straits

http://dbpedia.org/resource/Eric_Clapton%27s_Powerhouse

http://dbpedia.org/resource/Freddie_King

http://dbpedia.org/resource/Free_Creek_%28band%29

http://dbpedia.org/resource/George_Harrison

http://dbpedia.org/resource/J.J._Cale

http://dbpedia.org/resource/Jeff_Beck

http://dbpedia.org/resource/John_Mayall_&_the_Bluesbreakers

http://dbpedia.org/resource/Paul_McCartney

http://dbpedia.org/resource/Phil_Collins

http://dbpedia.org/resource/Steve_Winwood

http://dbpedia.org/resource/The_Band

http://dbpedia.org/resource/The_Beatles

http://dbpedia.org/resource/The_Dirty_Mac

http://dbpedia.org/resource/The_Plastic_Ono_Band

http://dbpedia.org/resource/The_Yardbirds

http://dbpedia.org/resource/X-sample

When we reverse the order of the subject and object, the results can be interpreted as musicians who have played on Clapton's albums. The query:

SELECT DISTINCT ?who WHERE

{?who <http://dbpedia.org/ontology/associatedBand> <http://dbpedia.org/resource/Eric_Clapton>.

} ORDER BY ?who

yields these results:

http://dbpedia.org/resource/Aashish_Khan

http://dbpedia.org/resource/Alan_Clark_%28keyboardist%29

http://dbpedia.org/resource/Albert_Lee

http://dbpedia.org/resource/Andy_Fairweather_Low

http://dbpedia.org/resource/B.B._King

http://dbpedia.org/resource/Billy_Preston

http://dbpedia.org/resource/Bobby_Keys

http://dbpedia.org/resource/Chris_Stainton

http://dbpedia.org/resource/Chuck_Leavell

http://dbpedia.org/resource/Dave_Carlock

http://dbpedia.org/resource/Dave_Mason

http://dbpedia.org/resource/Doyle_Bramhall_II

http://dbpedia.org/resource/Freddie_King

http://dbpedia.org/resource/Ian_Wallace_%28drummer%29

http://dbpedia.org/resource/Jamie_Oldaker

http://dbpedia.org/resource/Jeff_Beck

http://dbpedia.org/resource/Jesse_Ed_Davis

http://dbpedia.org/resource/Jim_Gordon_%28musician%29

http://dbpedia.org/resource/Leon_Russell

http://dbpedia.org/resource/Mac_and_Katie_Kissoon

http://dbpedia.org/resource/Marc_Benno

http://dbpedia.org/resource/Marcella_Detroit

http://dbpedia.org/resource/Nathan_East

http://dbpedia.org/resource/Otis_Spann

http://dbpedia.org/resource/P._P._Arnold

http://dbpedia.org/resource/Phil_Collins

http://dbpedia.org/resource/Phil_Palmer

http://dbpedia.org/resource/Pino_Palladino

http://dbpedia.org/resource/Plastic_Ono_Band

http://dbpedia.org/resource/Ray_Cooper

http://dbpedia.org/resource/Reverend_Zen

http://dbpedia.org/resource/Richard_Cole

http://dbpedia.org/resource/Rita_Coolidge

http://dbpedia.org/resource/Rob_Fraboni

http://dbpedia.org/resource/Sheryl_Crow

http://dbpedia.org/resource/Steve_Ferrone

http://dbpedia.org/resource/Steve_Jordan_%28musician%29

http://dbpedia.org/resource/Stevie_Ray_Vaughan

http://dbpedia.org/resource/The_Shaun_Murphy_Band

http://dbpedia.org/resource/Yvonne_Elliman

The same results are obtained if the predicate is replaced by http://dbpedia.org/ontology/associatedMusicalArtist.

How are Figure 20: Comparsion of associatedBand, associatedActs and associatedMusicalArtistassociatedBand, associatedMusicalArtist, and associatedActs related?

We believe the relationship varies across performers depending upon how individual

collaborators interpreted the terms.

Consider the following similar queries and their very different results, including

many performers who are

unfamiliar to all of the present authors.

SELECT ?artist (count (?who) as ?count) {

?artist <http://dbpedia.org/ontology/associatedBand> ?who.

} ORDER BY DESC (?count) LIMIT 10

http://dbpedia.org/resource/Stan_Levey 80

http://dbpedia.org/resource/Frank_Fenter 46

http://dbpedia.org/resource/Gary_Kellgren 42

http://dbpedia.org/resource/Norman_Granz 42

http://dbpedia.org/resource/Tim_&_Bob 39

http://dbpedia.org/resource/Frankie_Banali 38

http://dbpedia.org/resource/Neil_Cooper_%28ROIR%29 37

http://dbpedia.org/resource/Ian_Wallace_%28drummer%29 34

http://dbpedia.org/resource/Tha_Dogg_Pound 34

http://dbpedia.org/resource/DonGuralEsko 31

------------------------------------------------------------------------------------

SELECT ?artist (count (?who) as ?count) {

?artist <http://dbpedia.org/property/associatedActs> ?who.

} ORDER BY DESC (?count) LIMIT 10

http://dbpedia.org/resource/Gary_Kellgren 42

http://dbpedia.org/resource/Johnny_Goudie 36

http://dbpedia.org/resource/Even_Steven_Levee 33

http://dbpedia.org/resource/Shelter_%28band%29 29

http://dbpedia.org/resource/Emmylou_Harris 29

http://dbpedia.org/resource/Conny_Plank 27

http://dbpedia.org/resource/Exit-13 27

http://dbpedia.org/resource/K.Will 27

http://dbpedia.org/resource/Warren_Zevon 27

http://dbpedia.org/resource/Damian_LeGassick 25

------------------------------------------------------------------------------------

SELECT ?person (count(?person) as ?count) {

?artist <http://dbpedia.org/ontology/associatedMusicalArtist> ?person.

} ORDER BY DESC (?count) LIMIT 10

http://dbpedia.org/resource/Snoop_Dogg 81

http://dbpedia.org/resource/Ozzy_Osbourne 63

http://dbpedia.org/resource/Wu-Tang_Clan 56

http://dbpedia.org/resource/Dr._Dre 52

http://dbpedia.org/resource/Guns_N%27_Roses 52

http://dbpedia.org/resource/Lil_Wayne 51

http://dbpedia.org/resource/Morning_Musume 50

http://dbpedia.org/resource/Jay-Z 50

http://dbpedia.org/resource/Bob_Dylan 48

http://dbpedia.org/resource/Miles_Davis 48

Bridging the Data Sources

In order to address some of the more difficult questions or compare results between data sources, we needed a way to combine the RDF datasets, or at least to connect musical artist identifiers across DBpedia and MusicBrainz.

We employed the OpenLink Virtuoso Universal Server Virtuoso Universal Server as an RDF store. While there are other very good RDF stores such as 4store, the Virtuoso Universal Server [8] is compelling because it supports reasoning. Backward-chaining reasoning is the ability to derive new information based on existing information at run time. This contrasts with forward chaining reasoning in which derived information is expressed explicitly. An example of forward chaining reasoning would be creating and adding triples that represented new information that was inherent in information the datastore already contained. [9] There are two major costs to leveraging backward chaining reasoning. The first and foremost cost is that derived information is created each time it is needed; it is therefore quite likely that the same information is derived repeatedly at run time. The advantage, however, is that the derived information does not take up any space in the database. The second requirement for leveraging backward chaining reasoning in Virtuoso is the use of specific predicates, none of which were available in the native datasets with which we worked.

Given that the IRI for Eric Clapton was different in DBPedia and MusicBrainz, we used

forward

chaining to express the relatedness between these two IRI’s and thereby facilitating

backward chaining reasoning in queries. We used the SPARQL

INSERT directive to create new statements which made

assertions explicit as a forward-chaining reasoning strategy. Specifically, the

assertions we added leveraged the owl:sameAs[10] predicates

Virtuoso defines to empower

reasoning. The

following query was used to create an assertion stating that the DBpedia Subject IRI

of type

http://dbpedia.org/ontology/MusicalArtist corresponds to the MusicBrainz Subject IRIs of type

http://musicbrainz.org/mm/mm-2.1#Artist whenever the DBpedia

rdf-schema#label matches the MusicBrainz dc:title

exactly.

SPARQL INSERT in GRAPH inference/sameAs>

{?mbiri <http://www.w3.org/2002/07/owl#sameAs> ?s}

WHERE {

?s <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://dbpedia.org/ontology/MusicalArtist>.

?s <http://www.w3.org/2000/01/rdf-schema#label> ?dbpedianame.

?mbiri <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://musicbrainz.org/mm/mm-2.1#Artist>.

?mbiri <http://purl.org/dc/elements/1.1/title> ?mbname.

FILTER (str(?mbname) = str (?dbpedianame)) };

This query resulted in the addition of 16,029 owl:sameAs assertions to our datastore which took

109,692,665 msec (30.47 hours) to complete.

Since neither these assertions nor the DBpedia and MusicBrainz assertions were in

the same NAMED GRAPH, it was necessary to use another unique

capability of the Virtuoso Universal Server called GRAPH GROUPs.

This enabled us to refer to multiple NAMED GRAPHs as if they were one. First we

created a GRAPH GROUP with the following command:

DB.DBA.RDF_GRAPH_GROUP_CREATE ('http://group.dbpedia.inference','1');

Then we placed the previously created owl:sameAs assertions, as well as

the NAMED GRAPHs containing DBpedia assertions and the MusicBrainz assertions

into the GRAPH GROUP:

DB.DBA.RDF_GRAPH_GROUP_INS('http://group.dbpedia.inference' , 'inference/sameAs');

DB.DBA.RDF_GRAPH_GROUP_INS('http://group.dbpedia.inference' , 'http://mytest.com');

DB.DBA.RDF_GRAPH_GROUP_INS('http://group.dbpedia.inference' , 'http://musicbrainz.com');

To demonstrate that the owl:sameAs assertions we are indeed

intersecting the two datasets, consider the following query that determines the properties

connecting Eric Clapton to resources. Compare this to the figure

Clapton's DBpedia Properties - Version 1.

DEFINE input:same-as "yes"

SELECT DISTINCT ?predicate

FROM <http://group.dbpedia.inference> WHERE

{

?s ?predicate <http://dbpedia.org/resource/Eric_Clapton>.

} ORDER BY ?predicate

The result of this query is 30 properties which is 7 more than the result when querying only DBpedia, 6 of which come from MusicBrainz. Compare this result to the previous query results shown in figures Clapton's DBpedia Properties - Version 1 and Clapton's DBpedia Properties - Version 2.

Clapton's Properties Combined: Clapton's Properties in Combined Datastore (DBpedia and MusicBrainz) (30 predicates)

http://dbpedia.org/ontology/artist

http://dbpedia.org/ontology/associatedBand

http://dbpedia.org/ontology/associatedMusicalArtist

http://dbpedia.org/ontology/composer

http://dbpedia.org/ontology/musicComposer

http://dbpedia.org/ontology/musicalArtist

http://dbpedia.org/ontology/musicalBand

http://dbpedia.org/ontology/partner

http://dbpedia.org/ontology/producer

http://dbpedia.org/ontology/spouse

http://dbpedia.org/ontology/starring

http://dbpedia.org/ontology/wikiPageDisambiguates

http://dbpedia.org/ontology/writer

http://dbpedia.org/property/associatedActs

http://dbpedia.org/property/before

http://dbpedia.org/property/currentMembers

http://dbpedia.org/property/music

http://dbpedia.org/property/pastMembers

http://dbpedia.org/property/producer

http://dbpedia.org/property/spouse

http://dbpedia.org/property/starring

http://dbpedia.org/property/writer

http://musicbrainz.org/ar/ar-1.0#composer

http://musicbrainz.org/ar/ar-1.0#instrument

http://musicbrainz.org/ar/ar-1.0#performer

http://musicbrainz.org/ar/ar-1.0#producer

http://musicbrainz.org/ar/ar-1.0#toArtist

http://musicbrainz.org/ar/ar-1.0#vocal

http://purl.org/dc/elements/1.1/creator

http://www.w3.org/2002/07/owl#sameAs

Any queries that benefit from using a single IRI to refer to the same artist in both

datasets

becomes available by adding DEFINE input:same-as "yes" before our result clause.

The same 30 results can be obtained with the UNION query below. However, this query

requires knowledge of the GUID-based MusicBrainz IRI for each musician of interest,

whereas the above query takes advantage of the previously established

correspondence between the methods of artist identification in DBpedia and MusicBrainz.

Therefore, the owl:sameAs approach is clearly the better solution.

SELECT DISTINCT ?predicate WHERE {

{

?s ?predicate <http://dbpedia.org/resource/Eric_Clapton>.

}

UNION

{

?s ?predicate <http://musicbrainz.org/mm-2.1/artist/618b6900-0618-4f1e-b835-bccb17f84294>.

}

} ORDER BY ?predicate

You Say It's Your Birthday?

Given the ability to bridge the two datasets, we can now issue queries that compare data values across the sets. The query below obtains the birthdates for each musical artist in both sources and returns the discrepancies.

DEFINE input:same-as "yes" select ?musician ?DBPdate ?MBdate

{

?s <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://dbpedia.org/ontology/MusicalArtist>.

?s <http://dbpedia.org/ontology/birthDate> ?DBPdate .

?s <http://musicbrainz.org/mm/mm-2.1#beginDate> ?MBdate.

?s <http://www.w3.org/2000/01/rdf-schema#label> ?musician.

FILTER (str(?MBdate) != str (?DBPdate))

}

ORDER BY ?musician

A sampling of the nearly one thousand mismatched

birthdates follows. Typically the differences are one day, one month, or one year,

but note several major differences. We have yet to independently verify all of the

discrepancies, but we note that the birthday of George Harrison is incorrect in

MusicBrainz. In fact, MusicBrainz often uses "00-00" dates indicating only the year

is known. It is probably safe to conjecture that Courtney Love is not 21 and Five

For Fighting (singer-songwriter John Ondrasik) is not 14, so these birthdates are

more likely when their musical careers started. Wikipedia confirms that 1997 is indeed

the first "year active" for Five For Fighting, but Love's initial "year

active" is 1982. Clearly the interpretation of MusicBrainz's beginDate property

varies across artists.

Our tentative conclusion is that DBpedia is more accurate than

MusicBrainz with respect to birthdates.

musician DBPdate MBdate

50 Cent 1975-07-06 1976-07-06

Andy Partridge 1953-11-11 1953-12-11

Astrud Gilberto 1940-03-29 1940-03-30

Blind Willie Johnson 1897-01-22 1902-00-00 [5 year difference]

Blind Willie McTell 1898-05-05 1901-05-05 [3 year difference]

Carl Perkins 1932-04-09 1928-08-16 [4 year difference]

Courtney Love 1964-07-09 1990-00-00 [extremely different!]

David Lee Roth 1954-10-10 1955-10-10

Eddie Van Halen 1955-01-26 1956-01-26

Edgar Winter 1947-12-28 1946-12-28

Five for Fighting 1965-01-07 1997-00-00 [extremely different!]

Frankie Valli 1934-05-03 1937-05-03 [3 year difference]

George Harrison 1943-02-25 1943-02-24

Jennifer Lopez 1969-07-24 1970-07-24

Top Record Labels

One of our original questions was "Is there a predominant record label in the music world?" The following query answers that question.

SELECT ?label (count(?label) as ?count)

{

?artist <http://dbpedia.org/ontology/recordLabel> ?label

}

ORDER BY DESC (?count) LIMIT 20

The results are shown below.

http://dbpedia.org/resource/Columbia_Records 5762

http://dbpedia.org/resource/EMI 4215

http://dbpedia.org/resource/Warner_Bros._Records 3518

http://dbpedia.org/resource/Epic_Records 3509

http://dbpedia.org/resource/Atlantic_Records 3502

http://dbpedia.org/resource/Capitol_Records 3264

http://dbpedia.org/resource/Virgin_Records 2926

http://dbpedia.org/resource/RCA_Records 2590

http://dbpedia.org/resource/MCA_Records 1975

http://dbpedia.org/resource/Mercury_Records 1919

http://dbpedia.org/resource/Island_Records 1800

http://dbpedia.org/resource/A&M_Records 1697

http://dbpedia.org/resource/Sony_BMG 1513

http://dbpedia.org/resource/Elektra_Records 1480

http://dbpedia.org/resource/Reprise_Records 1406

http://dbpedia.org/resource/Arista_Records 1382

http://dbpedia.org/resource/Geffen_Records 1372

http://dbpedia.org/resource/Universal_Music_Group 1339

http://dbpedia.org/resource/Universal_Records 1277

http://dbpedia.org/resource/Interscope_Records 1226

Musical Genres

In order to determine which artist is most influential in rock music, we needed to be able to reliably specify the genre of interest. However, formulating queries involving genres is more difficult than it would seem. DBpedia (and presumably Wikipedia) defines 2,887 musical genres of which 330 contain "rock" in their label. Rock-related genres literally run the gamut from A to Z -- Aboriginal_rock to Zulu_rock (really!). To our surprise, the single concept of "rock and roll music" is represented by 18 distinctly different IRIs, as determined by the following query. [11]

SELECT DISTINCT (?genre)

{

?album <http://dbpedia.org/ontology/genre> ?genre.

?album <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://dbpedia.org/ontology/Album>.

FILTER regex (?genre, "[Rr]ock") .

FILTER regex (?genre, "[Rr]oll") .

}

ORDER BY ?genre

The variants of "rock and roll" are:

http://dbpedia.org/resource/British_Rock_and_Roll

http://dbpedia.org/resource/Real_Rock_and_Roll

http://dbpedia.org/resource/Rock%27n%27Roll

http://dbpedia.org/resource/Rock%27n%27roll

http://dbpedia.org/resource/Rock%27n_roll

http://dbpedia.org/resource/Rock_%27N%27_Roll

http://dbpedia.org/resource/Rock_%27n%27_Roll

http://dbpedia.org/resource/Rock_%27n%27_roll

http://dbpedia.org/resource/Rock_%27n_Roll

http://dbpedia.org/resource/Rock_&_Roll

http://dbpedia.org/resource/Rock_&_roll

http://dbpedia.org/resource/Rock_N%27_Roll

http://dbpedia.org/resource/Rock_and_Roll

http://dbpedia.org/resource/Rock_and_Roll_music

http://dbpedia.org/resource/Rock_and_roll

http://dbpedia.org/resource/Rock_n%27_Roll

http://dbpedia.org/resource/Rock_n_Roll

http://dbpedia.org/resource/Spanish_language_rock_and_roll

The first and last results above could be considered outliers since they are narrowings of the generic rock and roll classification.

Although Clapton is identified by only a few genres on Wikipedia, his albums fall into 18 genres, as determined by the query:

SELECT DISTINCT (?genre) WHERE

{

?s <http://dbpedia.org/ontology/artist> <http://dbpedia.org/resource/Eric_Clapton>.

?s <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://dbpedia.org/ontology/Album>.

?s <http://dbpedia.org/ontology/genre> ?genre

}

ORDER BY ?genre

Genres associated with Clapton's albums follow. Note four variants each for the concepts "blues-rock" and "rock and roll".

http://dbpedia.org/resource/Acoustic_blues

http://dbpedia.org/resource/Blues

http://dbpedia.org/resource/Blues-Rock

http://dbpedia.org/resource/Blues-rock

http://dbpedia.org/resource/Blues_Rock

http://dbpedia.org/resource/Blues_rock

http://dbpedia.org/resource/British_Blues

http://dbpedia.org/resource/Electric_blues

http://dbpedia.org/resource/Folk_music

http://dbpedia.org/resource/Jazz

http://dbpedia.org/resource/Orchestral

http://dbpedia.org/resource/Pop_music

http://dbpedia.org/resource/Reggae

http://dbpedia.org/resource/Rock_%28music%29

http://dbpedia.org/resource/Rock_and_Roll

http://dbpedia.org/resource/Rock_and_roll

http://dbpedia.org/resource/Rock_music

http://dbpedia.org/resource/Soul_blues

For any given album, more than one genre may apply. For example, the 1975 album "There's One in Every Crowd" is classified as both reggae and blues-rock. For those wondering which Clapton album could possibly be considered jazz or orchestral, that distinction belongs to the first "Lethal Weapon" soundtrack, which is also designated as blues. However, the genre query above does not capture all genres associated with Clapton. For example, the genre for "Lethal Weapon 3" soundtrack is simply "soundtrack".

If we wish to display all of Clapton's blues and rock albums, we could use a query such as:

SELECT DISTINCT (?genre) ?album WHERE

{

?album <http://dbpedia.org/ontology/artist> <http://dbpedia.org/resource/Eric_Clapton>.

?album <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://dbpedia.org/ontology/Album>.

?album <http://dbpedia.org/ontology/genre> ?genre .

FILTER ( regex (?genre, "[Rr]ock") || regex (?genre, "[Bb]lues"))

}

ORDER BY ?album

To display all of Clapton's albums and their associated genre(s), we used the following query:

SELECT ?album ?genre WHERE

{

?album <http://dbpedia.org/ontology/artist> <http://dbpedia.org/resource/Eric_Clapton>.

?album <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://dbpedia.org/ontology/Album>.

?album <http://dbpedia.org/ontology/genre> ?genre .

}

ORDER BY ?album

The result including albums with several genres listed follows. (The common portion

of the IRI,

http://dbpedia.org/resource/ has been removed from each resource to make the results

more readable.)

album genre

24_Nights Rock_music

24_Nights Blues

461_Ocean_Boulevard Blues-rock

Another_Ticket Blues-rock

August_%28album%29 Rock_music

August_%28album%29 Pop_music

Back_Home_%28Eric_Clapton_album%29 Blues-Rock

Backless Rock_and_roll

Backtrackin%27 Rock_%28music%29

Behind_the_Sun_%28Eric_Clapton_album%29 Rock_music

Behind_the_Sun_%28Eric_Clapton_album%29 Pop_music

Blues_%28Eric_Clapton_album%29 Blues-rock

Clapton_%282010_album%29 Blues-rock

Clapton_Chronicles:_The_Best_of_Eric_Clapton Rock_and_Roll

Crossroads_%28Eric_Clapton_album%29 Blues-rock

Crossroads_2:_Live_in_the_Seventies Blues-rock

E._C._Was_Here Blues-rock

Eric_Clapton%27s_Rainbow_Concert Blues-rock

Eric_Clapton_%28album%29 Rock_and_Roll

From_the_Cradle Blues

From_the_Cradle Electric_blues

From_the_Cradle Soul_blues

From_the_Cradle British_Blues

Guitar_Boogie Rock_and_roll

Guitar_Boogie Blues-rock

Journeyman_%28album%29 Blues-rock

Just_One_Night_%28album%29 Blues-rock

Lethal_Weapon_%28soundtrack%29 Jazz

Lethal_Weapon_%28soundtrack%29 Blues

Lethal_Weapon_%28soundtrack%29 Orchestral

Live_in_Hyde_Park_%28Eric_Clapton_album%29 Rock_music

Live_in_Hyde_Park_%28Eric_Clapton_album%29 Blues

Live_in_Japan_%28George_Harrison_album%29 Rock_and_roll

Me_and_Mr._Johnson Blues

Money_and_Cigarettes Blues-rock

No_Reason_to_Cry Rock_and_roll

One_More_Car,_One_More_Rider Blues-rock

Pilgrim_%28Eric_Clapton_album%29 Rock_music

Pilgrim_%28Eric_Clapton_album%29 Blues

Pilgrim_%28Eric_Clapton_album%29 Pop_music

Reptile_%28album%29 Rock_music

Reptile_%28album%29 Blues

Riding_with_the_King_%28B._B._King_and_Eric_Clapton_album%29 Blues-rock

Riding_with_the_King_%28B._B._King_and_Eric_Clapton_album%29 Blues_rock

Slowhand Rock_music

Steppin%27_Out_%28Eric_Clapton_album%29 Blues-rock

The_Cream_of_Clapton Rock_music

The_Cream_of_Clapton Blues_Rock

The_Cream_of_Eric_Clapton Rock_music

The_History_of_Eric_Clapton Rock_music

The_History_of_Eric_Clapton Blues

There%27s_One_in_Every_Crowd Reggae

There%27s_One_in_Every_Crowd Blues-rock

Time_Pieces:_The_Best_of_Eric_Clapton Blues_Rock

Unplugged_%28Eric_Clapton_album%29 Folk_music

Unplugged_%28Eric_Clapton_album%29 Acoustic_blues

However, if we omit a reference to genre and simply ask for Clapton's albums using the query:

SELECT ?album WHERE

{

?album <http://dbpedia.org/ontology/artist> <http://dbpedia.org/resource/Eric_Clapton>.

?album <http://www.w3.org/1999/02/22-rdf-syntax-ns#type> <http://dbpedia.org/ontology/Album>.

}

ORDER BY ?album

then the results contain 7 additional albums (below). We have not been able to determine the reason for this disparity since there is genre information for each of them when we dereference their IRIs on the DBpedia site.

Clapton_%281973_album%29 dbpprop:genre Rock

[compilation from 1973]

Complete_Clapton dbpprop:genre Blues

[compilation from 2007]

Edge_of_Darkness_%28soundtrack%29 dbpprop:genre Soundtracks

[18 minute 1985 soundtrack for British TV series]

Eric_Clapton_at_His_Best dbpprop:genre Rock

[compilation from 1972]

Lethal_Weapon_3_%28soundtrack%29 dbpprop:genre Orchestral, Jazz, and Blues

[Wikipedia soundtrack entry shares page with the movie; 2 infoboxes]

Live_from_Madison_Square_Garden_%28Eric_Clapton_and_Steve_Winwood_album%29 dbpprop:genre Blues/Rock

[note the slash]

Rush_%28soundtrack%29 dbpedia-owl:type dbpedia:Soundtrack

[Wikipedia soundtrack entry shares page with the movie; but no album infobox]

A better solution for managing the permutations between the

representations for the genre concept "Rock and Roll" as well as its narrowings would

be to

again use reasoning. The semantically equivalent variant genres could be represented

as

rdfs:subProperties of one

another, thereby enabling a single genre representation to refer to many. [12] This would

require analogous steps to where we created and loaded assertions, defined a graph,

define a graph group, and in this case define a rule_set in Virtuoso. Then, using the

proper syntax we could query using that rule_set to include exactly the genres we

intend to use in our queries. A less elegant approach would be to enumerate them one

by one and construct a

UNION of results.

Limitations and Further Efforts

We recognize several limitations in our work to date:

-

data inconsistencies

-

need for further RDF visualization work

-

need to address more of the original problems

-

constructing queries across datasets

Data Inconsistencies

While we would wish the source RDF data could be regarded as ground truth, we realize there are several problems with our sources.

-

Errors of omission: Since the original data in Wikipedia is community-entered, it is predictable that certain facts will be missing from the data but are in fact true. Some of thes facts may be obscure while others may be more obvious to a subject matter expert for the given topic. For example, what if Eric Clapton were to enter his own data about himself?

-

Poor data curation: In some cases, Wikipedia data may have been present and complete, but there might have been problems in the extraction process from Wikipedia to DBpedia.

-

Erroneous data: Such problems are due to simple data entry errors, unintentional errors in stating what the data entry person considers facts, or possibly intentional falsehoods or unsubstantiated facts.

-

Unclear semantics: The various properties which were of importance to us were generally not defined in the ontology, as discussed in Working Definitions. We struggled to interpret these terms in a consistent manner. It is possible that individuals who care less about the precision of information may have entered relationships not necessarily using the correct semantics. We believe that the ambiguity of the musician-related properties was the most significant problem with the reliability of the data and therefore the biggest challenge in testing our hypothesis.

Further RDF Visualization Work

Since AJAX facilitates updating a web page without reloading the entire page, we plan to insert additional links within the SVG graphic to add more interactivity, invoking additional queries. Links could also be added during the module processing. CSS and XSLT could also be used to enhance the XML presentation.

The current visualization graph is quite wide and long, making it difficult to

view in a web browser without additional panning and zooming capabilities. Useful

visualization of the result set is difficult but we intend to improve the

visualization to facilitate traversing the dataset using hyperlinks. The

hyperlinks are there now, but there is currently a problem with the JavaScript

POST method.

Further Attempts to Address the Original Problems

We initially formulated nearly two dozen questions [see Problems] that we believed we could use the DBpedia and MusicBrainz RDF datasets to answer. As of this July writing, few of these questions have been answered definitively. If properly bounded, the following are the questions we should be able to address in the future.

-

Which recording artist has directly played with the most musicians?

-

Which recording artist has the most connections within six degrees?

-

Which musician has been a session man for the most number of artists?

-

Which recording artist was most active during a particular decade?

-

Among all artists of a particular genre, who has played with the most other musicians?

-

Which rock artist's extended graph has the most other artists in 2 degrees? 3 degrees? 4 degrees?

-

Who has appeared on the most albums?

-

Which musician-related properties are reversible (inverse makes sense)?

-

Who created the most songs?

-

Which song has been recorded the most times by any artists? ("Yesterday" and "White Christmas" are typically cited.)

-

What is the average age of a musician when he/she first joined a band?

Other questions we may potentially be able to address include:

-

If we weight results by the length of time a band stays together, how does that impact other queries?

-

Does total number of songs or album released correlate with other measures of success?

-

Which solo artist has had the longest career?

-

Which band has been together (in some form) the longest time?

-

For bands with changing membership, can we conclude which configuration lasted the longest?

-

What is the "Eric Clapton number" (a la Kevin Bacon number) for various musicians?

Queries Across Datasets

Since we have established a mechanism to refer to a given artist in both datasets using a single IRI, we are prepared to ask queries that span the datasets, including queries that would not be possible without both sources. A few such queries are as follows:

-

Which albums of a given artist occur in one dataset but not the other? Since we are using Virtuoso which does not support the SPARQL 1.1