1. Introduction[1]

In 2018, the Internet Shakespeare Editions (ISE) software and processing pipelines failed. Its

outdated applications, principally Java 7, which had been end-of-life

for

quite some time by then, presented a serious security risk to the host institution.

The

version of Apache Cocoon (2.1.12, released 2014) that served up the ISEʼs webpages

was

incompatible with later versions of Java. Thus one of the oldest and most visited

Shakespeare websites in the world went dark after twenty-three years, at the moment

of

the upgrade to Java 11. The underlying text files remained in a Subversion repository,

however, and were gifted to the University of Victoria in 2019. Linked Early Modern

Drama Online (LEMDO) is now preparing some of these files, in collaboration with the

editors thereof, for republication by the University of Victoria on the LEMDO platform

in a new ISE anthology.

Those files were prepared in a boutique markup language that borrowed

elements from various SGML and XML markup languages—principally the Renaissance

Electronic Texts (RET) tags,[2] COCOA, Dublin Core, TEI, and HTML—and created new elements as needed. IML

never claimed to follow either the SGML specification or the XML specification. In

general, though, the tags used for encoding the primary texts (old-spelling

transcriptions and editorially modernized texts) were roughly SGML. The tags used

for

encoding the apparatus—the annotations and collations—were XML-compliant and could

be validated against a schema. Critical paratexts and born-digital pages were encoded

in

XWiki syntax, although most users of the XWiki platform used the built-in WYSIWYG

interface and never looked at the markdown view. Metadata tagging evolved over the

lifetime of the project; it went from project-specific codes to Dublin Core Metadata

Specification and eventually to something similar to TEI. This diverse collection

of

tags was never given a formal name; the tags were known as ISE tags

in

the Editorial Guidelines. The LEMDO team began calling it IML for convenience, an

acronym for ISE Markup Language

that has since been taken up by former

ISE platform users to talk about their old markup language.[3]

The builders of the LEMDO platform have made a different calculus than the builders

of

the ISE platform had made. LEMDO opts for industry-standard textual encoding; LEMDO

is powered by TEI, the XML markup language of the Text Encoding Initiative (TEI).

Our constrained tagset is fully TEI-compliant and valid against the TEI-all schema.

LEMDO can use the many tools and codebases that work with TEI-XML to produce HTML

and CSS. As founding members of The Endings Project,[4] lead LEMDO developer Martin Holmes and LEMDO Director Janelle Jenstad insisted that

LEMDO produce only static text, serialized as HTML and CSS. With the development of

The Endings Projectʼs Static Search,

[5] by which we preconcordance each anthology site before we release it, we have no need

for any server-side dependencies.

By opting for a boutique SGML-like markup language, the ISE minimized the demand on

editors. However, IML required complex processing, and was, at the end of the day,

entirely dependent on

custom toolchains and dynamic server capabilities. In other words, IML and LEMDOʼs

TEI

are near textbook cases of the tradeoff between SGML and XML described by Norman Walsh

at Balisage 2016: the ISE had reduced the complexity of annotation at

considerable cost in implementation

while LEMDO aims to reduce

implementation cost at the expense of simplicity in annotation

(Walsh 2016). We gain all the affordances of TEI and its capacity

to make complex arguments about texts, but the editors (experts in textual studies

and

early modern drama) and encoders (English and History students) have a steep learning

curve. All the projects that wish to produce an anthology using the LEMDO platform

at UVic—including the new ISE under new leadership—understand this trade-off.

Most of the scholarship on SGML to XML conversion was published in the early 2000s.

Most of the tools and recommendations for converting boutique markup to XML assume

that the original markup was either TEI-SGML (Bauman et al 2004) or SGML-compliant. IMLʼs opportunistic markup hybridity means these protocols and

tools cannot be adopted wholesale. Elisa Beshero-Bondar argues eloquently for improving

and enriching a project by re-encoding it, but also notes that an earlier attempt

to up-transform

the Pennsylvania Electronic Edition (PAEE) of Frankenstein had potentially damaged a cleaner earlier encoding

(Beshero-Bondar 2017). Her article is a salutary reminder to take great care in any process of conversion

to respect the original scholarship. Given the work involved and the potential risks,

we must ask is it worth it to re-encode this project?

In the case of the ISE, even after twenty-three years, the project still offers the

only open-access critical digital editions of Shakespeareʼs plays. With more than

three million site visits per month at last count (in April 2017) and classroom users

around the world, the ISE continues to be an important global resource, whose worth

was proven once again during the pandemic as teachers turned to online resources more

than ever.[6] Furthermore, the project was not finished; only eight of 41 projected digital editions[7] had been completed at the time of the server failure, leaving many editors with no

publication venue for their ongoing work. Finally, the failure of the platform put

us in a different situation than Beshero-Bondar and her collaborators in that the

PAEE still serves up functional pages, whereas the crude staticization of the ISE

site preserved only frozen content without many of the functionalities. We expect

the old ISE site to lose even more functionality over the next ten years because of

its reliance on JQuery libraries that have to be occasionally updated; the most recent

update, in June 2021, altered the way annotations display in editions, for example,

thus changing the user experience. Exigency and urgency meant that we had to build

a new platform for ongoing and new work while converting and remediating old work.

Because the particular conversions and remediations we describe in this paper are

not processes that can be extended to any other conversion process—IML being used

only for early modern printed drama and requiring the custom processes of the now-defunct

ISE software to be processed into anything—we also have to ask is there anything to be learned from a conversion and remediation that is so specific

to one project?

We argue that there are extractable lessons from this prolonged process. The obvious

lesson is that one should not build boutique projects with boutique markup language;

however, because boutique projects continue to fail, the more extensible lessons pertain

to how we might go about saving the intellectual work that went into such legacy projects.

This paper begins by describing IML, which has never before been the subject of critical analysis. Even though the language itself has little value now that it cannot be processed by any currently functional toolchains, analysis of its semantics and intentions may offer a case study both in how a markup language can go awry with the best of intentions and how a markup language can be customized for precise, context-specific research questions. We then describe the conversions we wrote to generate valid TEI from this hybrid language and the challenges we faced in capturing editorial intentions that had been expressed in markup that made different claims than those facilitated by TEI. We articulate our concern about the intensive labour that goes into the largely invisible work of conversion and remediation, especially when students find themselves making intellectually significant editorial contributions. We conclude our paper with some recommendations for late-stage conversion of boutique markup to XML. Our experience of converting a language that was neither XML- nor SGML-compliant may offer some guidance to other scholars and developers trying to save legacy projects encoded in idiosyncratic markup languages.

Our central question is how to do this work in a replicable, principled, efficient,

transferable, and well documented way. It needs to be replicable because some of the

editors who were contributing editions to the old ISE platform are still encoding

the

components of their unfinished editions in IML for us to convert. It needs to be

principled because LEMDO is much more than a conversion project; LEMDO hosts five

anthologies already,[8] two of which never used IML at all and should not be limited to a TEI tagset

that replicates IML. It needs to be efficient because of the sheer scale of this

preservation project; we expect to have converted and remediated more than 1500

documents by the time all the lingering IML files come in. The transferable part of

this

paper is not the mechanics of converting IML to TEI (a skillset we hope will die with

us), but rather the work of thinking through how markup languages make an argument

about

the text. How can we transform the markup language in a way that contributes to the

editorʼs argument without drastically changing it? The classic

validate-transform-validate

pipeline and the subsequent hand

remediation by a trained research assistant are generally not a simple substitution

of

one tag for another; the work is much more like translation between two languages

with

different semantic mechanisms; one has to understand the nuances of both languages

and

the personal idiom of the language user. We are participating in a kind of asynchronous

conversation with the editors, speaking with the dead in a number of cases; so that

future users understand what they said and how we translated it, the work must be

well

documented.

2. IML as Markup Language

Although it never called itself markdown,

IML had similar goals as the

markdown languages that developed from 2004 onward.[9] It was meant to be readable and to require only a plain-text editor. While some tags

were hierarchical, others were non-hierarchical and could cross the boundaries of

dominant tag hierarchy. Sometimes keyboarding was allowed to substitute for encoding.

For incomplete verse lines that were completed by other speakers, editors could use

tabs to indicate the medial and final parts of the initial line fragment. Editors

prepared their editions in a plain-text editor such as

TextWrangler/BBEdit or, more often, in word processing software like Microsoft Word.

Editors typed their content and their tags as they went, with no prompting from a

schema

nor any capacity to validate their markup. Such a practice increases the margin for

error as the markup becomes more complex and less like markdown. Editors dutifully

typed

angle brackets with no real understanding of well-formedness or conformance. The onus

of

producing valid markup was shifted to a senior editor, who spent hours adding closing

tags, turning empty tags into wrappers and vice versa, and finding the logical place

for

misplaced tags. The tidy .txt output had the advantage of being highly readable for

the

editor (see Figure 1); editors could make corrections to the file

without an XML editing program. However, they were rightly frustrated by the requirement

to proofread

the tags in the .txt file returned to them; it is hard to proofread something one

does not fully

understand.

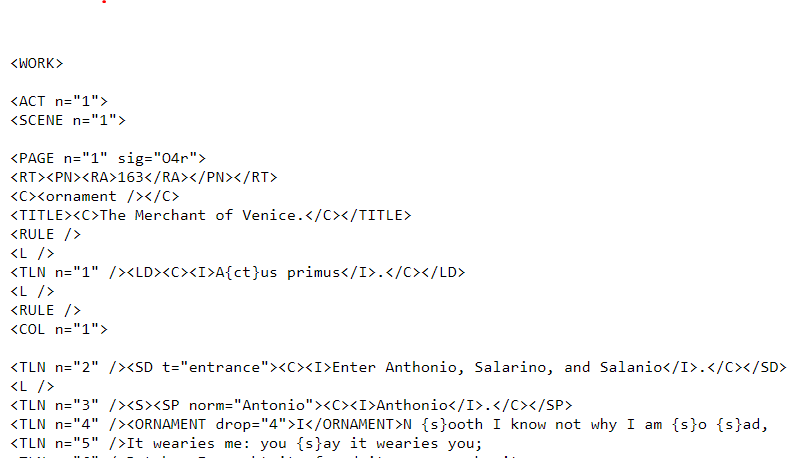

Figure 1

A transcription of the 1623 folio text of The Merchant of Venice, with IML tagging.

IML worked reasonably well for the old-spelling transcriptions, as might be expected

given the origin of IML in the tagset developed by Ian Lancashire for Renaissance Electronic Texts (RET).[10] The markup specification for RET was developed as an alternative to TEI and arose

out of Lancashireʼs belief that SGML and TEI make anachronistic assumptions about text that fly in the face of the

cumulative scholarship of the humanities

(Lancashire 1995). IML had simple elements for capturing features of the typeface and print layout.

IML markup was less successful for the modern critical texts, and even less successful

for the annotations and collations, for which the ISE did eventually create an XML-compliant

tagset and schema (borrowing heavily from TEI). In other words, for the modern text

where editors had to be most argumentative, RET-inspired IML did not have the critical

tagset to capture their arguments fully.

In its final form, IML had at least 87 elements. Additional tags had been created and deprecated over time.[11] These tags could be deployed in eight different contexts, with qualifying attributes and values. Those contexts were:

-

anywhere

-

all primary texts (old spelling and modern)

-

only old-spelling primary texts

-

only modern-spelling primary texts

-

secondary texts

-

all apparatus files (collations and annotations)

-

only annotations

-

only collations

These tags performed various functions and often mixed descriptive and interpretive tagging. To build the conversions, LEMDO had to analyze IML carefully and determine what information it was trying to capture about texts. From LEMDOʼs perspective, IML tags were meant to achieve the following:

-

capture structural features via elements like <TITLE>, <ACT>, <SCENE>, <S> (for speech), <SP> (for speaker), and <SD> (for stage direction).

-

describe the mode of the language via a <MODE> tag (with

@t="verse",@t="prose"), or@t="uncertain") -

identify intertextual borrowings and foreign words via the <SOURCE> and <FOREIGN> elements

-

capture the mise-en-page of the printed book via elements like <PAGE>, <CW> (catchword), and the empty element <RULE/> (ruled line)

-

describe font via elements like <I> (italics), <J> (justified), <SUP> (superscript), and <HW> (hungword, also known more precisely as a turnover or turnunder)

-

expand abbreviations like

yt

tothat

(so that the text could searched) -

mark the beginning of compositorial lines via <TLN> (Through Line Number, a numbering system applied in the twentieth century to the 1623 folio of Shakespeareʼs works)

-

capture the idiosyncracies of typography with special typographic units wrapped in braces thus: {s} for long s, {{s}h} for a ligature sh where the s is a long s, and so on for over 40 common pieces of type in an early modern case

The structural elements were particularly problematic for our conversions of the old-spelling

(OS) texts. For the OS texts, for example, the transcriber/encoder was meant to capture

the typography and mise-en-page in a representational

way, using markup to describe the way the printed page looked. However, the encoder

of the OS texts also performed interpretive work that LEMDO and every other editorial

series (print or digital) defers to a modern-spelling text. Encoders inserted modern

act and scene breaks in the OS text so that the OS text could be linked to the modern

text, even though most early modern plays do not consistently mark act and scene breaks

beyond the first scene or two. Likewise, stage directions are often but not always

italicized in an early modern printed playbook. The transcriber/encoder had to perform

the editorial labour of determing that a string set in italic type was not merely

something to be tagged with <I> but something that required an <SD> tag as well. Original

stage directions are privileged in editions of early modern drama because they offer

us an otherwise inaccessible technical theatrical vocabulary

(Dessen and Thomson 1999) and are thought to capture early staging practices, so it is easy to understand

the temptation to add interpretive tagging at this otherwise descriptive stage. Such

tagging would allow one to generate a database of original stage directions, although

the ISE never did mobilize that tagging. Speech prefixes are also readily identifiable

typographically in an early modern printed playbook, being italicized, followed by

a period, and often idented. Again, however, the encoder of the IML-tagged OS text

went beyond describing the typography and added <SP> tags. Furthermore, the encoder

was required to identify the speaker of each speech, a critical act that requires

interpretation of often ambiguous speech prefixes in the early printed texts, correction

of compositorial error, and critical inference in order to point the <SP> to the right

entity.

The modernized texts used a few of the tags created for old-spelling texts, the structural

divisions in particular, but the aim in tagging these texts was to capture the logical

functions of words and literary divisions. The core tagset for the modern texts was

very small: nine hierarchically nested functionally descriptive tags and one prescriptive

tag: <I> (surrounds words to be italicized

). This latter tag has caused us significant grief in our conversions because we often

cannot tell why the editor wanted to italicize the output. Other non-hierarchical

tags allowed in the modern text did the work of:

-

identifying prose and verse with a <MODE> tag with three possible @type values: verse, prose, or uncertain;

-

including an anchor-like <TLN> tag that connected the modern text to its old-spelling counterpart;

-

marking the beginning of editorial lines with <L> self-closing tags with an @n value and an arabic numeral; and

-

allowing editors to identify props listed in stage directions, implied by the text as being required, or used in their experimental Performance-As-Research (PAR) productions.

IML also borrowed extensively from other markup languages. It used a number of prescriptive

rendering tags from HTML, such as <BLOCKQUOTE>, six levels of headings (<H1> to <H6>),

and others. It originally used some Dublin Core markup for metadata, stored at the

top of the .txt files inside a <META> tag. Late-stage IML included a subset of tags

that were XML-compliant. They are easy to pick out of the list of IML tags because

the element names are lowercase or camelcase (such as <annotations>, <collations>,

<coll>). The apparatus files (annotations and collations) ultimately ended up as XML

files, although the editors generally prepared them in BBEdit or word-processing applications,

typing these XML-compliant IML tags into their file as they did for other components

of the edition. Around 2014, the project moved its metadata into stand-off XML files

and turned the Dublin Core tags into custom TEI-like IML-XML tags to capture metadata,

so that the files could be validated against a RELAX NG schema. Elements included

<iseHeader> (similar to TEIʼs <teiHeader>), <titles> (similar to TEIʼs <titleStmt>) and <resp> (an element similar to TEIʼs <respStmt> but named for TEIʼs @resp attribute. The metadata file also captured dimensions of workflow, with tags like

<editProgress> (with uncontrolled phrases like in progress

and edited

in the text node) and <peerReviewed> (with the controlled phrases false

or true

in the text node). While one could easily argue that moving to XML-conformant tags

was a good move, the addition of IML-XML tags to this mixed markup language removed

editors from their work in two ways:

-

Because editors continued to work mainly in the more SGML-like files, they also lost control over the XML-compliant metadata for their work. It had to be encoded and edited by the ISEʼs own team of RAs. Editors proofed the metadata only when it had been processed and appeared on the beta ISE site.

-

The files were shared with other projects (a dictionary project at Lancaster University, the Penguin Shakespeare project, and others) and are now circulating without attribution or credit to their individual editors in the files themselves.

3. Differences Between IML and TEI

In contrast to the hybridity of IML, TEI began life as an SGML language and transitioned seamlessly and completely to XML compliance.[13] But the main difference between IML and TEI is that IML was designed for a single project, whereas TEI is designed to be used in a range of disciplines that need to mark up texts or text-bearing objects. IML was designed and had value only for one project in one discipline that effectively dealt with one genre in just one of its material manifestations by one author: the early modern printed play by Shakespeare. Even accommodating Shakespeareʼs poems and sonnets, as well as plays by other early modern playwrights—for projects developed by Digital Renaissance Editions and by the Queenʼs Men Editions, which used the IML tagset and published on the ISE platform—was a bit of a stretch for the tagset. It never developed tags at all for manuscript plays (and Shakespeare did have a hand in one play that survives only in manuscript, The Book of Sir Thomas More). There were tagging efficiencies that arose from developing a tagset for such a specific purpose. TEI is not restricted to a single genre, historical period, language, or even field of inquiry; it aims to be open to and accommodating of the various needs of projects within both humanities (languages, literature, classics) and social sciences (linguistics and history). The very capaciousness of TEI can be a drawback in that projects need to constrain and customize the TEI tagset and develop their own project-specific taxonomies. The things one would like to capture about early modern playbooks, even when they persist across hundreds of playbooks, such as the pilcrows that gave way to indentation to mark the beginnings of speeches (Bourne 2020), may still seem idiosyncratic in the larger TEI community and do not merit a TEI element, although one could certainly submit a feature request.

An advantage of having a community of practice is that there are built-in checks that

prevent unsound scholarly practices. The TEI Guidelines evolve with the needs of the community: TEI users can submit feature requests to

the TEI Technical Council, whose members review the request and recommend implementation.

The community is also included in the conversation and people weigh in on TEIʼs Issues

GitHub channel. The TEI community includes many textual critics and editors, with

the consequence that the TEI Guidelines reflect informed best practice in textual studies. The ISE went rogue when it decided

to root primary documents (old-spelling and modern texts) on the <WORK> element. <WORK>

is a highly misleading root element. In textual studies, work

refers to the idea of a literary work that is represented and transmitted by a text or texts

(), text

to the words in a publication of that work, and document

or copy

to a particular material witness. Hamlet is the work—a Platonic ideal manifested in multiple different publications, editions,

adaptations, and performances—but there are three texts of Hamlet: the Q1 text from 1604, the Q2 text from 1605, and the Folio text of 1623. The idea

of transcribing and marking up a work is therefore nonsensical, like drawing design

schematics for Platoʼs concept of a chair. TEI documents, on the other hand, are rooted

on the <TEI> element, which makes a claim only about the markup language rather than the nature

of the file content. The text being encoded is wrapped in a <text> element,[14] which rightly makes a claim only about the nature of the document.

Although both IML and TEI-XML have elements, attributes, and values, there are significant specific differences worth discussing. The first difference between them is syntactical: because IML is specific to one genre, author, and historical moment, it can make first-order claims (via an element) that TEI tends to defer to third-order claims (via the value of the attribute on an element, as set out in a taxonomy). IML is cleaner for the editor in some ways. For example, IMLʼs elements to describe typography and page layout were simple and intuitive. The typeface was captured with simple <BLL> for blackletter or English type,[15] <I> for Italic, and <R> for Roman. Other elements designed to capture typesetting included <LS> (to indicate that letters were spaced out), <J> for justified type, <C> for centered type, and <RA> for right-aligned text. IML borrowed <SUP> and <SUB> from the HTML 3.2 convention. It used the empty <L/> element for blank lines. Because books themselves have overlapping features, none of these elements had to be well-formed in their usage. IML neatly sidestepped the perennial problem of overlapping semantic and compositorial hierarchies. With our move to TEI-XML, encoding these features of the early printed book became significantly more complicated. We have lost the simplicity of these elements, but gained both the ability to validate our encoding in a XML editor and the more powerful analytic capacities of TEI-XML. At the same time, we made a principled decision, in conjunction with the Coordinating Editors of the Digital Renaissance Editions and the New Internet Shakespeare Editions, to stop capturing some of these bibliographic codes. Any researcher seriously interested in typefaces and printing would not turn to a digital edition but would look at extant copies of physical books, we decided. In addition, LEMDO users have access to many digital surrogates of early printed books.[16] Given the shortness of time and the scarcity of funds, we could not replicate every claim made by the IML-encoded texts and decided to jettison features that were intended to facilitate research questions that our project was not best positioned to answer. On this point alone, LEMDO offers less than the original IML files.

Table 1 shows how we translated IMLʼs first-order elements into the values of attributes

Table 1. Where IML says everything between these tags is a scene,

TEI says everything between these tags is a literary division, specifically the particular

type of division that we call a scene.

Likewise, for forme works (the running titles, catchwords, page numbers, and signature

numbers that tend to stay in the forme when the rest of the type is removed to make

way for another set of pages) TEI has the higher-order element <fw> and uses the @type attribute to qualify the <fw> element. LEMDOʼs TEI-XML uses <hi> elements with @rendition attributes to describe the typographical features of a text in those cases where

we decided to retain the arguments made in IML. Overall, IML requires less of the

editor and more of the developer, who has to write scripts that connect <CW> (for

catchword, the word at the bottom of the page that anticipates the first word on the

next page) and <SIG> (for signature number, the number added to the forme by the printing

to help with the assemblage of folded sheets into ordered gatherings) for processing

purposes. TEI requires more of the editor and less of the developer; the trade-off

is that it captures more of the editorʼs implicit thinking about the text, and is

thus more truthful in its claims. In addition, editors and their RAs acquire transferable

skills in TEI, which is useful in a wide variety of contexts (including legislative

and parliamentary proceedings in many countries around the world).

Table 1

IML versus TEI Syntax

| Feature | IML | TEI-XML (LEMDO customization) |

|---|---|---|

| Scene 1 | <SCENE n="1">…</SCENE> | <div type="scene" n="1">…</div> |

| Act 1 | <ACT n="1">… </ACT> | <div type="act" n="1">…</div> |

| Catchword | <CW>catchword</CW> | <fw type="catch">catchword</fw> |

| Signature | <SIG>C2</SIG> | <fw type="sig">C2</fw> |

A second specific way in which IML differs from TEI is that IML relies on a certain

amount of typographical markup (quotation marks, square brackets; see Table 2) and prescriptive formatting that produces a certain screen output. Typographical

markup is ambiguous, however; its multiple potential significations require the user

to determine from context why something is in quotation marks or square brackets.

Square brackets are conventionally used in print editions to signify that material

has been supplied, omitted, or conjectured by the editor. LEMDOʼs TEI customization[17]

does not allow quotation marks or square brackets in the XML files.[18] Table 2 shows that LEMDO requires a <supplied> element around editorial interpolations.[19] The attributes @reason, @resp, and @source allow the markup to record the reason

for supplying material (such as gap-in-inking

), the editor who supplied it, and the authority or source for the emendation (which

may include conjecture

). Instead of wrapping text in quotation marks, LEMDO demands that the editor uses

markup to identify the text as material quoted from a source (<quote>), as a word used literally (<mentioned>), as an article title (<title level="a">), or as a word or phrase from which the speaker or editor wants to establish some

distance (<soCalled>). IML uses an <I> element to prescribe italic output. During the conversion process,

we transformed these <I> to <hi> elements. TEI permits one to identify the function of the italicized text, making

explicit the reason for which a string might be italicized and allows us to process

foreign words, titles, and terms in different ways if style guides change (see Table 3). Depending on how much time we have to put into the remediation, we will replace

the <hi> elements with the more precise tagging that we require of editors creating born-TEI

editions for the LEMDO platform. The <I> tag in IML is just one way in which IML editors

were invited to think more about screen output than about truthful characterization

of the text. Another example is the addition of <L> tags to create white space on

the screen in an effort to suggest something about the structure of the text, relying

on the readerʼs understanding that vertical white space signals a disruption, break,

or shift. In that sense, IML is more concerned with the form and aesthetics of the

text than it is with its semantics.

Table 2

Print Markup versus Semantic Markup

| Supplied material | […] | <supplied reason="…">…</supplied> |

| Quoted material | "…" | <quote>, <mentioned>, <title level="a">, <soCalled> |

Table 3

IML Prescriptive Markup versus TEI Descriptive Markup

| Italics | <I>…</I> | <foreign>, <title level="m">, <term>, <emph> |

A third specific difference lies in the way IML and TEI capture the mode of the language

(Table 4). IML uses unclosed, non-hierarchical <MODE> tags. They function as milestone elements,

signalling that prose (or verse or uncertain mode) start at the point of the tag and

the text remains in that mode until another <MODE> tag signals a change. In the verse

IML mode, editors add <L/> milestone tags to signal the beginning of a new verse line.

TEI has a radically different approach to lineation. It wraps paragraphs in the <p> element and lines in the <l> element (with the optional <lg> to group lines into groups like couplet, quatrain, sonnet, or stanza). For single-line

speeches (e.g., Yes, my lord

or When shall we three meet again?

), editors often did not add a <MODE t="uncertain"> milestone because the screen layout

of lines would not be affected if the prose or verse mode was still in effect. We

cannot determine if they wanted to say that these short lines were in the same mode

as the preceding longer lines, or if they merely omitted the <MODE t="uncertain">

tag because it did not affect the screen layout. In cases where editors did classify

the mode of lines as uncertain, we were able to convert their tagging to TEIʼs <ab>, which avoids the semantic baggage of <p> and <l>. In most cases, we have had to infer the <ab> ourselves from the text of the play and add it in the remediation process. Our current

system does not allow us to say nothing about the mode of the language. Where IML could omit tags, we have to choose between

<p>, <l>, and <ab>.

Table 4

Prose and Verse

| Mode | IML | TEI |

|---|---|---|

| Verse | <MODE t="verse"> <L/>First verse line, <L/>Second verse line. | <l>First verse line,</l>

<l>Second verse line.</l> |

| Prose | <MODE t="prose"> | <p>Words, words, words.</p> |

| Uncertain | <MODE t="uncertain"> | <ab>words, words, words</ab> (can also be used for prose that is not a semantic paragraph)

|

4. Description of the Conversion and Remediation Processes

The entire conversion and remediation cycle consists of two distinct phases: conversion and remediation. Conversion entails a series of programmatic transformations run iteratively by a developer skilled in XSLT but not necessarily knowledgeable about early modern drama. Extensive element-by-element remediation is then done by research assistants who have been trained in TEI and given some knowledge of early modern drama and editorial praxis.

Converting files from IML to TEI is a process that involves a series of Ant application

files and XSL transformation scenarios. Creating the transformation scenarios was

a laborious process that required careful research, design, testing, and implementation.

The first transformations were written in 2015 by Martin Holmes (Humanities Computing

and Media Centre, University of Victoria) as a trial balloon piloted by a small working

group called emODDern.

Although we did not then know that the ISE software would eventually fail, we were

all ISE editors and experienced users of both IML and TEI who wanted to experiment

with re-encoding our own in-progress editions in TEI. We wondered how much of our

own IML tagging could be converted to TEI programmatically. Our intention was to create

a TEI ODD file specifically for early modern drama, a goal that has come to fruition

in LEMDOʼs ODD file.[20] The conversions were rewritten as Ant files in 2018 by Joey Takeda (a grant-funded

developer who has since moved to Simon Fraser University) as soon as we learned in

July that planned institutional server upgrades would cause the ISE software to fail

in December. They have been revised again by Tracey El Hajj, who completed the conversion

of all the IML files that had been ingested into the ISE system.

The conversions are as follows:

-

Build a TEI-XML apparatus file (collations or annotations) from IML-XML. We use TEIʼs double end-point attachment method (TEI Guidelines12.2.2) with anchors on either side of the lemma in the main text and pointers in stand-off apparatus files. The ISE relied on a fragile dynamic string matching system, whereas we use string matching only for the conversion process to help us place the anchors in the right places.

-

Add links to facsimiles. We use

<pb>with an@facsattribute to pull facsimiles into the text. The ISE used a stand-off linking system that pulled text into the facsmiles, which did have the advantage that one could link to a corrected diplomatic transcription from multiple facsimiles although it sometimes produced strange results if the transcription did not match the uncorrected sheets in a particular facsimile. LEMDO does copy-text transcriptions of a single facsimile of a single copy.[21] -

Convert SGML-like IML files (.txt files of old-spelling and modern texts) to TEI-XML.

-

Convert XWiki markdown to TEI markup (a conversion that we have now retired because no one have been able create files in XWiki markdown since the ISE software failed).

We continue to tweak all but the last of these conversion scenarios as new IML files come in. We offered editors on the three legacy projects (ISE, DRE, and QME) three options: finish your work in IML for one-time conversion when you are done (and pay us or someone else to enter your final copyedits and proofing corrections), submit your incomplete IML for conversion and then continue to work in TEI, or learn TEI and begin again. Because some chose the first option, we have a steady trickle of new IML-encoded files coming in, none of which were corrected for processing by the ISE software, which means that we have to do quite a bit of preliminary remediation to produce the valid IML that our conversions expect, including reversing the proleptic use of TEI tags by editors who know a little bit of TEI and want to help us. Idiosyncratic editorial markup habits that we have not yet seen can often be accommodated by a tweak to the conversion process. More often, we write a new regular expression and add it to our library of regular expressions to run at various points in the conversion process.

The Ant conversions are built into our XML project file (.xpr) so that we can run them within Oxygen. Oxygen makes it possible for LEMDO team members who are not advanced programmers to run the conversions. Any of the junior developers can handle this work and some of the humanities RAs can be trained to do it. To convert an IML file, the conversion editor copies the text files from the ISE repository into the relevant directory in the LEMDO repository (an independent Subversion repository that has no relationship to the old ISEʼs Subversion repository, other than the fact that both are hosted at UVic). Each conversion has its own directory so that the conversion and product thereof are contained temporarily in the same directory and relative output file paths are easily editable by the less skilled members of the team.

The first step is to create valid IML. The ISE had a browser-based IML Validator

, written in JAVA by Michael Joyce. The IML Validator was a complex series of diagnostics

rather than formal validation against a schema. With Joyceʼs permission, Joey Takeda

repurposed the code and turned it into a playable tool within Oxygen. It cannot correct

the IML, but it does generate a report on the errors, which we can then correct manually

in the IML file. We run the IML Validator again after correcting the errors, each

time correcting newly revealed errors.[22]

Once we have what we think is valid IML (and it never is fully valid), we convert

IML tags to crude XML tags. This first pass usually reveals errors that the IML Validator

cannot, and we iteratively correct the IML (parsing the error messages from the failed

conversion) and run the transformation scenario until it produces an output file.

We then process these crude tags into LEMDO-compliant TEI tags. Finally, we validate

the output against the LEMDO schema and make any further corrections. Because we know

that we cannot produce fully valid LEMDO TEI by the conversion process, we have a

preliminary file status (@status="IML-TEI") that switches off certain rules in the schema, such as the rules against square

brackets or against elements like <hi> that we need to use in this interim phase of the full conversion-remediation process.

Once the remediators begin their work, they change the file status to @status="IML-TEI_INP" to access the full schema and to use the error messages as a guide to their work.

The process looks different with the various types of files. With some files, it is more efficient to validate the IML before proceeding with the first attempt at conversion; with others, it is easier to work on the conversion and adjust the IML by following the error messages provided in the various phases of the TEI outputs. LEMDO will publish the technical details of this process on its forthcoming website at the following links:

The TEI validation, which is the last step before committing the file to the LEMDO

repository, is recorded in a <change> element in the <revisionDesc>. Once the TEI file is valid against our schema, the conversion editor can then add

the file to the play-specific portfolio (i.e., directory) in the repository. The conversion

editors run a local build that replicates the LEMDO site build process (i.e., runs

the XML file through the processing pipeline that converts the file to an HTML page)

before committing, because there are some issues that might appear only at the HTML

level, as can beset TEI projects that publish HTML pages.

Then the encoder-remediators and remediating editors begin their work. This stage is when the arguments of the editors are captured as fully as possible in TEI. This complex process rapidly shades into editorial labor as students surmise why the original editor wrapped text in quotation marks or square brackets or used <I> elements (Table 2 and Table 3). Remediation is overseen by the senior scholar on the project (Jenstad) and the output checked by leads of the anthology projects as well as individual editors if they are available. The anthology leads will be able to take some responsibility for the remediation process as soon as we have refined and fully documented the remediation processes.[23] Two editors have already hired their own RAs to undertake the remediations under their and my joint supervision; their RAs are remote guest members of the UVic team and have a cohort of virtual peers in the local LEMDO team. LEMDO has its own team of General Textual Editors[24] to aid the project directors[25] in overseeing student work and particularly in helping out if an editor has passed away.

Thus far we have converted all of the files that were originally published on the old ISE platform. All the old-spelling texts, modern texts, collations, annotations, critical paratexts, supplementary materials, bibliographies, character lists, and all associated metadata files for all the editions in the legacy DRE, ISE, and QME anthologies have been converted from the ISE’s boutique markup language into industry-standard TEI-XML. We converted about 1200 IML files to about 1000 XML files. The difference arises from the ingestion of metadata into the headers of files where it should have been all along. We have fully remediated only six editions, with six additional editions underway right now. We continue to look for efficiencies in the process but expect to make some hard decisions about which texts will undergo full remediation. In some cases, such as the very early editions, it may make more intellectual and editorial sense to commission a new born-LEMDO edition from a new editor who is willing to work in TEI.

5. Challenges in the IML-TEI Conversion

As anyone who has translated between any two spoken languages can attest, translation is an art, not a mechanical process. The validation process itself is tedious, and the converter has to go back and forth between the IML and the TEI outputs, making corrections and adjustments to the IML to facilitate a successful conversion to TEI. This work cannot be recognized and credited with the TEI mechanisms that are available to us. The conversion editorʼs process and most of their labor happens before the TEI output is achieved. The conversion editor needs to adjust the IML (and delete the stray TEI tags that editors have added in hopes of being helpful) so that it matches the input our conversion processes are expecting. This process requires iterative and repetitive changes that often apply to the entirety of the IML document. From fixing improperly closed tags, to moving elements around, to adjusting typos and case errors in the element names, these changes have to be done manually, and addressed one by one. Find-and-replace processes or regular expression searches have not been effective in most cases because these errors tend not to follow into patterns. While using the IML Validator is sometimes more efficient, there are some errors that are valid in IML but still interrupt the conversion. In that case, building the file and evaluating errors is the more efficient approach. The back and forth between fixing and attempting to build is time consuming; some conversions take an hour but others can take a full day, depending on the length of the file and the variety of errors therein. However, the more IML files the converter works on, the more familiar they become with the errors, and the more efficient they become in the conversion process.

The examples in Table 1 are easy to convert; although the languages express the concepts differently, there is a 1:1 correlation between IML and TEI in those cases. The <MODE> tags (Table 4) are converted to <l>, <p>, and <ab> tags easily if the <MODE> tags have been correctly deployed in IML; the conversion stops if the editor has added <L> tags in a passage that is not preceded by a <MODE t="verse"> tag or if a passage governed by a <MODE t="verse"> does not have the expected <L> tags.

Our conversion code assumes that the IML tagging was either right or wrong. If it is right (i.e., matches what our conversion processes expect), we convert it. If it is wrong, we correct it and then convert it. But there are other factors to consider.

-

Editors encoded for output rather than input.

-

Sometimes editors confused wrappers and empty or non-closing elements.

-

Sometimes editors used markup in playful and ingenious ways.

The combination of descriptive and prescriptive markup in IML encouraged editors to think about the ultimate screen interface instead of truthful description of the text. For example, the marking of new verse lines with the <L/> tag is causing ongoing trouble for our remediators because ISE editors added extra unnumbered <L/> elements to force the processor to add non-semantic white space; we cannot always determine if the editor forgot to add a number value, forgot the text that was supposed to appear on that line, or if the empty <L/> element is a hacky attempt to control the way the edition looks on the screen.

The <MODE> tag offers examples of the last two factors. Editors tended to use the

<MODE> tag in inconsistent ways: sometimes it was used as a wrapper around passages

of verse or prose; other times it was used as a non-closing element, as if the editor

was saying this is verse until I tell you that itʼs something else.

The ISE toolchain seems to have accommodated both editorial styles (wrappers or unclosed

elements), perhaps because editors used the <MODE> tags in these different ways. Whatever the reason, allowing

it meant that editors could interpret the function of <MODE> in these different ways.

Utterances of less than one line invited what could be editorial lassitude or could be editorial playfulness; the challenge for us as remediators is that we cannot tell the difference between lassitude that needs to be corrected and playfulness that needs to be honored. Early modern verse is highly patterned (ten syllables for predominantly iambic pentameter verse and eight for trochaic tetrameter, just to name the two most common verse patterns). A line of fewer than ten syllables does not present much data for the editor to parse, and often the mode is genuinely unclear. For text whose mode is unclear, IML provided a value: <MODE="uncertain">. But because the ultimate single-line output is entirely unaffected on the screen (itʼs not long enough to wrap even if the mode were prose, except on the smallest mobile devices perhaps), some editors encoding with an eye on the screen interface did not bother changing the mode to uncertain.

ISE editors used IML creatively to make editorial arguments. Again, the <MODE> element offers a compelling example of editorial logic that we cannot easily convert with our processes. In the following example, the editor was trying to use the IML markup as they understood it to say that the scene was in prose with some irruptions of verse, using the <MODE> tag as a wrapper with a child wrapper <MODE> tag:

<MODE t="prose">Words, words, words in prose. <MODE t="verse">A few words in verse.</MODE> <MODE t="prose">More words in prose. </MODE>

<MODE t="prose">Words, words, words in prose.</MODE> <MODE t="verse">A few words in verse.</MODE> <MODE t="prose">More words in prose.</MODE>

<p>Words, words, words in prose.</p><l>A line of verse.</l><p>More words in prose.</p>

The conversion editor may come to know the particular argumentative and markup habits of an individual play editor and may be able to make a good guess about the editorʼs intentions, but some editors (particularly those who, we suspect, worked on their editions sporadically and at long intervals) were highly variable in their deployment of the markup and had no discernable habits. Consistently wrong encoding is easier to correct than inconsistently right encoding. It also became clear to us that many editors did not understand the basic principles of markup and that they were confused by the coexistence of empty elements (the self-closing IML <L> element, for example) with wrapping elements (the <ACT> element, for example). Why did they need to mark the end of an act when they did not need to mark the end of a verse line? Jenstad knows from having worked with many ISE editors that they often thought of opening tags as rhetorical introductions. To explain the concept of opening and closing tags, she sometimes pointed out that an essay needs a conclusion as well as an introduction. Some ISE editors seem to have assumed that the tags were meant for human readers rather than processors; the human reader does not need to be told where an act ends because the beginning of a new act makes it obvious that the previous act is finished. Indeed, it is hard to fault the ISE editorʼs logic in this case, given that SGMLʼs OMITTAG—permitting the omission of some start or end tags—is based on precisely the same logic, namely inference of elements from the presence of other elements.

6. Editorial Consequences

While there are huge advantages to adopting a standard used by thousands of projects

around the world, the shift to TEI has editorial consequences. Encoding is a form

of highly granular editing that captures the editorʼs convictions about the structure

of the text, the mode of the language, who says what to whom, to what a particular

entity refers, and so on. The encoding language itself makes arguments about what

matters in a text, and that language can evolve over time as scholarly interests change.[26] If we accept that encoding is a form of micro-editing, then re-encoding the text

via conversion and remediation inevitably strays into editorial terrain. Because of

the technological complexity of the conversion, we have people not trained as textual

scholars or even as Shakespeareans essentially editing the text by fixing the markup

in order to run the conversions through the classic three-step pipeline: validate, transform, validate

(Cowan 2013). We use the terms conversion editor

and remediation editor

in conscious acknowledgement that these LEMDO team members are engaging, of necessity,

in editorial work, but we are also aware that most team members are not, in fact,

trained editors.

Because the languages are structured differently, IML and TEI markup facilitate different

arguments about the text. Every tag makes a micro claim about the text, but if TEI

does not accommodate a claim by the editor in IML, or if TEI permits new arguments,

we find ourselves starting to make new micro claims at the level of word, line, and

speech. We make corrections to the original encoding in order to make the conversions

work. At this point, the developer (until recently El Hajj) begins to examine the

text in order to correct the IML so that the conversion can proceed. Without being

an editor herself—for it is rare to have mastered XSLT and have editorial training—she

completes and corrects the IML tagging of the original editor. The remediation editors

check citations because the LEMDO requires various canonical numbers, shelfmarks,

control numbers, and digital object identifiers for linked data purposes (captured

in <idno>). We often correct erroneous information in the process, thus starting to function

as copyeditors. We agonize over relineating as we change the IMLʼs <MODE> tags to TEIʼs <l> and <p> tags and nail down what was left intentionally or accidentally ambiguous in IML.

We second-guess what an editor meant by ambiguous typographical markup, such as the

quotation marks that we intially convert to <q> but want to remediate to the more precise TEI markup enabled by the LEMDO schema

(and required of editors new to LEMDO): should it be <quote>, <soCalled>, <term>, or <mentioned>, or should we leave it as <q>? It is a challenge to infer what the editor might have claimed had they had a more

complex markup system at their disposal; a LEMDO text encoded in TEI is a more useful

edition, but there are risks in moving from a simpler argument to a more complex argument,

most notably the risk that our more precise and granular tagging may not be what the

editor would have done. Translation of encoding from IML to TEI necessarily changes

the claims the editor is making. To borrow a mathematical metaphor, if the editor

were trying to express a number, they might have wanted to say 1.7

but could only use whole numbers and therefore rounded up to 2

; if the LEMDO team cannot consult the original editor (and some of those editors

are now beyond the reach of email[27]) and substitutes the more precise 2.2,

we have compounded the original inaccuracy and further misrepresented the editorʼs

thinking. With its lack of precision, IML could be ambiguous; if an utterance was

less than one line long the editor did not have to decide if it were prose or verse,

and sometimes the ambiguity of the IML helpfully reflectly the ambiguity of the mode

of the language. The more precise TEI generated by our conversions and remediations

has different encoding protocols for prose and verse, so we have to make a judgement

call that the original editor did not have to make. The editor may not approve of

or welcome this kind of up-translation,

as the 2017 Balisage conference theme put it (see Beshero-Bondar 2017 especially).

7. Recommendations for Late-Stage Conversion of Boutique Markup to XML

These conversions and remediations have taken nearly three years of effort from a

team consisting of three to five part-time team members. While we also have other

LEMDO-related work beyond conversion and remediation, this effort is still a considerable

and costly percentage of our time. Because many of our legacy editors

continue to work on their editions in .txt files and to type IML tags into those

.txt files, our work—with all of its frustrations, surprises, and challenges—will

continue for some years to come, even as we train up a new generation of editors in

TEI. Given our experience, we offer the following recommendations to other teams who

may be trying to save projects encoded in boutique markup languages.

-

Preserve the old files if you can. If we have misinterpreted the editorʼs intentions, we can consult the original .txt files encoded in IML and the .xml metadata, annotations, and collations files. In addition, as one of the reviewers of this paper helpfully pointed out, versions of markup are like digital palimpsests and are themselves worthy of study. Recognizing their historical value (to digital humanists) and editorial value (to future interpreters of text), we have preserved the IML files in multiple copies. They are still under version control in the old ISE repository, which, unlike the software, has not failed. We have also batch-downloaded the files to several different storage spaces so that we have lots of copies keeping stuff safe (the LOCKSS principle).

-

Maintain the custom values of the original project as much as possible. The taxonomy of values is where a project makes its arguments about what matters to the projectʼs research questions. For us, it was important to keep the original taxonomy of types of stage directions, for example:

entrance | exit | setting | sound | delivery | whoto | action | other | optional | uncertain

are values that made sense for early modern drama. -

Freeze production of content in the old system while developing the conversions and converting the legacy files. We began our trial conversions in 2015 without freezing content because we did not then have administrative control over the ISE as a project and UVic did not yet own the project assets. In our case, the freeze was abruptly imposed on editors in late 2018 by the failure of the ISE server. We could no longer publish any new content using the old processes. We asked continuing editors to pause their work for two years while we built a new platform for editing and encoding early modern plays. Some editors ceased their work and have waited for us to complete our work on the LEMDO platform. Others have continued to work in IML in .txt and .doc or .docx files. Even after converting all the files that were in the old ISE repository, we are still receiving a steady trickle of IML files that need to be converted and remediated for LEMDO. Our solution has been to offer a one-time conversion to the editors working on the legacy projects; once we have converted their IML, they must make all further changes to their work in the TEI files in the LEMDO repository. This boundary has meant that some editors will never learn TEI, preferring to do all of their work in IML and submit their final files to us. There are also historical contractual conditions that mean we cannot require any legacy editor to learn TEI.

-

Follow conversion with remediation. If markup were a mechanical rather than an editorial process, we would not need remediators. However, we have shown in this paper that even programmatic conversion is not a mechanical process and requires iterative tidying, converting, and validating. The remediators continue the work of the conversion editors by retracing the original editorʼs processes. Not only does remediation require aligning a file with a new schema, the remediators in our case also have to respect LEMDO and new ISE project requirements that lie beyond validation.

-

Work with a team of people. In our case, the team consisted of developers who wrote the conversions, junior developers with technical expertise to run the conversions, student research assistants to do the bulk of the remediation, an expert in early modern drama and TEI to oversee the students, and a trio of General Textual Editors help us make sound scholarly decisions when the remediation pathway was not clear. If the editor was alive and willing to help, the original editor became part of the team. In addition, anthology leads can supplement or stand in for the editorʼs voice.

-

Document conversion and remediation processes in both technical terms and non-technical terms. Future developers need technical documentation to replicate and improve the processes. Editors and project users need non-technical documentation to understand how the texts have changed.

-

Part of the documentation should be a data crosswalk. Our data crosswalk takes the form of four-column tables, in which we list the IML tag or ISE typographical markup (such as quotation marks or italics) in the first column, the TEI element in the second, the TEI attributes and values in the third, and notes in the fourth. The table is tidiest where we have been able to make a simple substitution of an IML element for a TEI element. But the messiness of other parts of the table offer a visualization of the increased affordances of TEI. And the blank cells in our table tellingly demonstrate where IML tagging has no equivalent in TEI. We consciously chose not to use IML tags anywhere else in our documentation, and we located this data crosswalk in an appendix at the very end of our documentation, on the grounds that we did not want to introduce any IML tagging into the consciousness of editors learning TEI.[28]

-

Preserve any comments left in the original files, if there are any, converting them to XML comments if they are not already in that form. These comments help us reconstruct metadata, understand the editorʼs intentions, and make us aware of the arguments made by the editors that are not captured in their IML tagging.

-

Provide a mechanism for encoders, conversion editors, and remediators to leave notes about the converted XML. We left XML comments in the new files to explain any challenges in the conversion, any decisions made by the conversion editor, and any outstanding questions. The conversion editor leaves XML comments for the remediating editors to take up. And the remediating editors likewise need to leave comments describing decisions they made and flagging lingering questions. Some of these comments can be deleted when the issues they flag have been resolved. Others may well need to remain in the file indefinitely as part of the complete history of the edition. We also keep records in our project management software about challenges we face and the decisions we make.

-

Finally, do not use valuable intellectual labor merely to maintain a legacy project. Make sure the work is designed to improve the project and facilitate long-term preservation. A remediated legacy project should not be another fragile, time-limited, boutique project that will require further remediation in the future. LEMDO remediations are

Endings-compliant

; that is, our remediated products are static, flat HTML pages with no server side dependencies, no reliance on external libraries (such as JQuery), and no need for a live internet connection (except to access links to resources outside the project). These static pages can be archived at any point and will continue to be available as webpages as long as the founding technologies of the web—HTML and CSS—are processable by machines. We are hedging our bets by archiving various flavors of our XML as well; these files offer another type of accessibility, can be shared, and potentially even reused in new ways.

8. Conclusion

We did not create the ISEʼs toolchains and never used them ourselves, but we know that they were extraordinarily complex in order to process all of these various types of markup in various, overlapping contexts. In the early days of LEMDO (2015), we had hoped to convert the IML tagging to TEI, revise the processing, and continue using the ISEʼs Subversion repository, server, software, and web interface. In 2017, we even paid the former ISE developer to document the ISE software. As things have fallen out, it is likely a blessing in disguise that the software failed when the server was upgraded because we were then completely liberated from every aspect of a boutique project. The developers in the Humanities Computing and Media Centre (Martin Holmes, principally) bought us some time by staticizing the final output of the ISE server before it went dark. Holmes saved 1.43 million HTML files through his staticization process and created a site that looks very much like the old ISE site, posting it to the same URL (https://internetshakespeare.uvic.ca). It is such a good capture of the final server output than most users have no idea the site is not dynamic, although it will gradually degrade over time. We cannot add new material, however. Making minor corrections to existing material is laborious and the dynamic functionalities no longer work, but the static site preserves an image of what was. The new LEMDO platform is now fully built with processing and handling in place for all of our markup, our development server is functional, and our first release of content will happen in Summer 2021. We are gradually introducing editors and their research assistants to LEMDOʼs TEI-XML customization and teaching them to access our centralized repository, edit their work in Oxygen XML editor, and use SVN commands in the terminal to commit their work. The work of conversion and remediation continues, however, with the ongoing challenges and perplexities that we have described, as long as we have legacy editors encoding in IML and sending us their files.

References

[Bauman et al 2004] Bauman, Syd, Alejandro Bia, Lou Burnard, Tomaž Erjavec, Christine Ruotolo, and Susan

Schreibman. Migrating Language Resources from SGML to XML: The Text Encoding Initiative Recommendations.

Proceedings of the Fourth International Conference on Language Resources and Evaluation, 2004. 139–142. http://www.lrec-conf.org/proceedings/lrec2004/pdf/504.pdf

[Beshero-Bondar 2017] Beshero-Bondar, Elisa Eileen. Rebuilding a Digital Frankenstein by 2018: Reflections toward a Theory of Losses and

Gains in Up-Translation.

Presented at Up-Translation and Up-Transformation: Tasks, Challenges, and Solutions,

Washington, DC, July 31, 2017. In Proceedings of Up-Translation and Up-Transformation: Tasks, Challenges, and Solutions. Balisage Series on Markup Technologies, vol. 20 (2017). doi:https://doi.org/10.4242/BalisageVol20.Beshero-Bondar01. https://www.balisage.net/Proceedings/vol20/html/Beshero-Bondar01/BalisageVol20-Beshero-Bondar01.html.

[Bourne 2020] Bourne, Claire M.L. Typographies of Performance in Early Modern England. Oxford University Press, 2020.

[Cowan 2013] Cowan, John. Transforming schemas: Architectural Forms for the 21st Century.

Presented at Balisage: The Markup Conference 2013, Montréal, Canada, August 6–9,

2013. In Proceedings of Balisage: The Markup Conference 2013. Balisage Series on Markup Technologies, vol. 10 (2013). doi:https://doi.org/10.4242/BalisageVol10.Cowan01. https://www.balisage.net/Proceedings/vol10/html/Cowan01/BalisageVol10-Cowan01.html.

[Dessen and Thomson 1999] Dessen, Alan C., and Leslie Thomson. A Dictionary of Stage Directions in English Drama 1580–1642. Cambridge University Press, 1999.

[Galey 2015] Galey, Alan. Encoding as Editing as Reading.

Shakespeare and Textual Studies. Ed. Margaret Jane Kidnie and Sonia Massai. Cambridge University Press, 2015. 196-211.

[Holmes and Takeda 2019] Holmes, Martin, and Joey Takeda. Beyond Validation: Using Programmed Diagnostics to Learn About, Monitor, and Successfully

Complete your DH Project.

Digital Scholarship in the Humanities 34.1 (2019). doi:https://doi.org/10.1093/llc/fqz011. https://academic.oup.com/dsh/article/34/Supplement_1/i100/5381103.

[Lancashire 1995] Lancashire, Ian. Early Books, RET Encoding Guidelines, and the Trouble with SGML.

1995. https://people.ucalgary.ca/~scriptor/papers/lanc.html.

[Pichler and Bruvik 2014] Pichler, Alois, and Tone Merete Bruvik. Digital Critical Editing: Separating Encoding from Presentation.

Digital Critical Editions. Ed Daniel Apollon, Claire Bélisle, and Philippe Régnier. University of Illinois

Press, 2014. 179–199.

[TEI Guidelines] TEI Consortium, eds. TEI P5: Guidelines for Electronic Text Encoding and Interchange. Version 4.2.2. 2021-04-09. TEI Consortium. http://www.tei-c.org/Guidelines/P5/.

[Walsh 2016] Walsh, Norman. Marking up and marking down.

Presented at Balisage: The Markup Conference 2016, Washington, DC, August 2–5, 2016.

In Proceedings of Balisage: The Markup Conference 2016. Balisage Series on Markup Technologies, vol. 17 (2016). doi:https://doi.org/10.4242/BalisageVol17.Walsh01. https://www.balisage.net/Proceedings/vol17/html/Walsh01/BalisageVol17-Walsh01.html

[Williams and Abbott 2009] Williams, William Proctor, and Craig S. Abbott. An Introduction to Bibliographical and Textual Studies. 4th edition. Modern Language Association, 2009.

[1] Jenstad contributed 60% and El Hajj contributed 40% to the work of this paper.

[2] The specification for RET is set out in Lancashire 1995.

[3] The contract programmers—UVic undergraduate and graduate computer science

students—called it SGMLvish,

nodding to Tolkienʼs constructed

language.

[4] The Endings Project is a SSHRC-funded collaboration between three developers (including Holmes), three humanities scholars (including Jenstad), and three librarians at the University of Victoria. The teamʼs goal is to create tools, pipelines, and best practices for creating digital projects that are from their inception ready for long-term deposit in a library. The Endings Project recommends the creation of static sites entirely output in HTML and CSS (regardless of the production mechanisms). See The Endings Projectʼs website (https://endings.uvic.ca/) for more information.

[5] The Static Search

function developed by Martin Holmes and Joey Takeda gives such sites dynamic search

capabilities without any server-side dependencies. The Static Search

codebase is in The Endings Projectʼs GitHub repository

(https://github.com/projectEndings/staticSearch).

[6] UVic no longer runs analytics on the staticized ISE site, but social media posts and anecdotal reports suggested that many Shakespeare instructors rely on the ISE and were recommending it to other teachers and professors during the early and sudden pivot to online teaching and learning.

[7] The 38 canonical plays, Edward III, the narrative poems, and the Sonnets.

[8] LEMDO hosts three legacy anthologies: in addition to the ISE, the Queenʼs Men Editions (QME) and the Digital Renaissance Editions (DRE) projects also used the ISE platform and lost their publishing home when the ISE software failed. All three projects have editors still preparing files in IML. The MoEML Mayoral Shows (MoMS) anthology has only ever used LEMDOʼs TEI customization, as will a new project to edit the plays of John Day.

[9] The critical paratexts and site pages were eventually migrated to an XWiki platform that used XWikiʼs markdown-like custom syntax. We had to convert these files also (using different XSLT conversion processes than the ones we describe in this paper), but we are not discussing them here. XWiki syntax was not IML, but rather a tagging syntax that some editors learned in addition to IML. Those few editors who used XWiki generally used the WYSIWYG interface and treated it as a word processor.

[10] This short-lived series (1994–1998) produced five editions and a dictionary. The editions are still viewable at https://onesearch.library.utoronto.ca/sites/default/files/ret/ret.html. Lancashire developed the dictionary into the much better known and widely used Lexicons of Early Modern English (LEME) project (accessible at https://leme.library.utoronto.ca/).

[11] It is not possible to reconstruct a full history of the language because early files were not under version control and the final form of the documentation was, by its own admission, out of step with actual practice. The last list of IML tags is still accessible on the staticized version of the ISE serverʼs final output at https://internetshakespeare.uvic.ca/Foyer/guidelines/appendixTags/index.html.

[12] To further complicate matters, the ISE had various PostgreSQL databases for user data

and theatrical production metadata, plus repositories of images and digital objects

to which editions could link via what it called the ilink

protocol, which was basically a symlink.

[13] The history of the TEI is described on the TEI-Cʼs website: https://tei-c.org/about/history/#TEI-TEI

[14] LEMDO has chosen not to use the <sourceDoc> content model, on the grounds that our OS texts are semi-diplomatic transcriptions

to which we apply a generic playbook styling. We can override the generic playbook

styling by using document-level CSS or inline CSS, but editors are encouraged to remember

that we also offer digital surrogates of the playbooks.

[15] Forthcoming scholarship by Lori Humphrey Newcomb shows that blackletter

is an anachronistic bibliographical term for a type known universally by early modern

printers as English type.

Had we not retired this tag, we would certainly have had to rename it.

[16] The Shakespeare Census Project gives links to high-resolution images provided by libraries around the world. See https://shakespearecensus.org/.

[17] LEMDOʼs current RELAX NG schema can be found at this address: https://jenkins.hcmc.uvic.ca/job/LEMDO/lastSuccessfulBuild/artifact/products/lemdo-dev/site/xml/sch/lemdo.rng. This link always points to the latest schema, which allows our editors working remotely to have access to the latest rules even if they are not working directly in our Oxygen Project. We also have an extensive diagnostics system (see Holmes and Takeda 2019).

[18] Pichler and Bruvik 2014 articulate the necessity of separating the processes of markup and rendering.

[19] LEMDO takes its editorial direction from DRE, which sets the bar for recording editorial

interventions and conjectures. For LEMDO and DRE, record

means tag in such a way that the intervention can be reversed.

Silently emend

means change but do not tag.

[20] The most active members of the working group were Jennifer Drouin (McGill University), Diane Jakacki (Bucknell University), and Jenstad.

[21] Each copy of an early modern publication is unique because of stop-press corrections and variations in the handpress printing process.

[22] See Joyceʼs code at https://github.com/ubermichael).

[23] LEMDOʼs documentation, currently viewable on a development site (URL available by request), will be published at https:lemdo.uvic.ca/documentation_index.html. The final chapter is entirely about remediation: https://lemdo.uvic.ca/job/learn_remediations.html.

[24] Brett Greatley-Hirsch, James Mardock, and Sarah Neville (who, with Jenstad, are also the Coordinating Editors of DRE).

[25] Janelle Jenstad is the Director of LEMDO and PI on the current SSHRC funding. Mark Kaethler is the Assistant Project Director

[26] In the small but rich body of literature on encoding as editorial praxis, Alan Galey

presents the best argument for how digital text encoding, like the more traditional activities of textual criticism and

editing, leads back to granular engagements with the texts that resist, challenge,

and instruct us

(Galey 2015).

[27] To mangle a passage from Andrew Marvell, The graveʼs a fine and private place,

beyond the email interface.

[28] Our IML-to-TEI crosswalk, the IML Conversion Table

will be published at https://lemdo.uvic.ca/learn_IML-TEI_table