From 10,000 ft

This paper is about converting huge volumes of Rich Text Format (RTF) legal commentary to XML. For those of you in the know, this is one of the most painful things an XML geek will ever experience; it is always about infinite pain and constant regret. RTF is by many seen as a bug, and for good reason.

On the other hand, the project had its upsides. It is sometimes immensely satisfying to run a conversion pipeline of several dozens of steps over 104 RTF titles comprising tens of megabytes each, knowing the process will take hours—sometimes days—and yet end up in valid and well-structured XML. IF that happens.

The Sources

The sources are legal documents, so-called commentary. Much of this text concerns the standard text for legal commentary in England, Halsbury's Laws of England (see id-halsbury), published by LexisNexis, but some of the discussion also includes its sister publication for Scottish lawyers, Stair Memorial Encyclopaedia, also known simply as STAIR.

Halsbury consists of 104 titles

, each divided

into volumes

that in turn consist of several physical files. A

listing of the files in a single title might look like this:

-rw-r--r-- 1 arino 197609 975K Sep 2 2016 09_Children_01(1-92).rtf -rw-r--r-- 1 arino 197609 1.2M Sep 2 2016 09_Children_02(93-168).rtf -rw-r--r-- 1 arino 197609 920K Sep 2 2016 09_Children_03(169-212).rtf -rw-r--r-- 1 arino 197609 1.1M Sep 2 2016 09_Children_04(213-263).rtf -rw-r--r-- 1 arino 197609 985K Sep 9 2016 09_Children_05(264-299).rtf -rw-r--r-- 1 arino 197609 1.1M Sep 9 2016 09_Children_06(300-351).rtf -rw-r--r-- 1 arino 197609 1.1M Sep 2 2016 09_Children_07(352-412).rtf -rw-r--r-- 1 arino 197609 982K Sep 2 2016 09_Children_08(413-464).rtf -rw-r--r-- 1 arino 197609 1.3M Sep 2 2016 09_Children_09(465-520).rtf -rw-r--r-- 1 arino 197609 1.1M Sep 2 2016 09_Children_10(521-571).rtf -rw-r--r-- 1 arino 197609 1.5M Sep 9 2016 09_Children_11(572-634).rtf -rw-r--r-- 1 arino 197609 1.5M Sep 2 2016 09_Children_12(635-704).rtf -rw-r--r-- 1 arino 197609 1015K Sep 2 2016 10_Children_01(705-760).rtf -rw-r--r-- 1 arino 197609 1.1M Sep 2 2016 10_Children_02(761-806).rtf -rw-r--r-- 1 arino 197609 1012K Sep 2 2016 10_Children_03(807-857).rtf -rw-r--r-- 1 arino 197609 1.2M Sep 2 2016 10_Children_04(858-924).rtf -rw-r--r-- 1 arino 197609 953K Sep 2 2016 10_Children_05(925-973).rtf -rw-r--r-- 1 arino 197609 1.5M Sep 2 2016 10_Children_06(974-1043).rtf -rw-r--r-- 1 arino 197609 813K Sep 2 2016 10_Children_07(1044-1089).rtf -rw-r--r-- 1 arino 197609 1.2M Sep 2 2016 10_Children_08(1090-1149).rtf -rw-r--r-- 1 arino 197609 1.1M Sep 2 2016 10_Children_09(1150-1207).rtf -rw-r--r-- 1 arino 197609 1.2M Sep 2 2016 10_Children_10(1208-1243).rtf -rw-r--r-- 1 arino 197609 1.6M Sep 2 2016 10_Children_11(1244-1336).rtf

Here, the initial number is the volume. It is followed by the name of the title, an ordinal number for the physical file, and finally the range of volume paragraphs contained within that particular part. Yes, the filenames follow a very specific format, necessary to keep the titles apart and enable merging together the RTF files when publishing them on paper or online in multiple systems.

Each title covers what is known as a practice area

, divided into

volume paragraphs

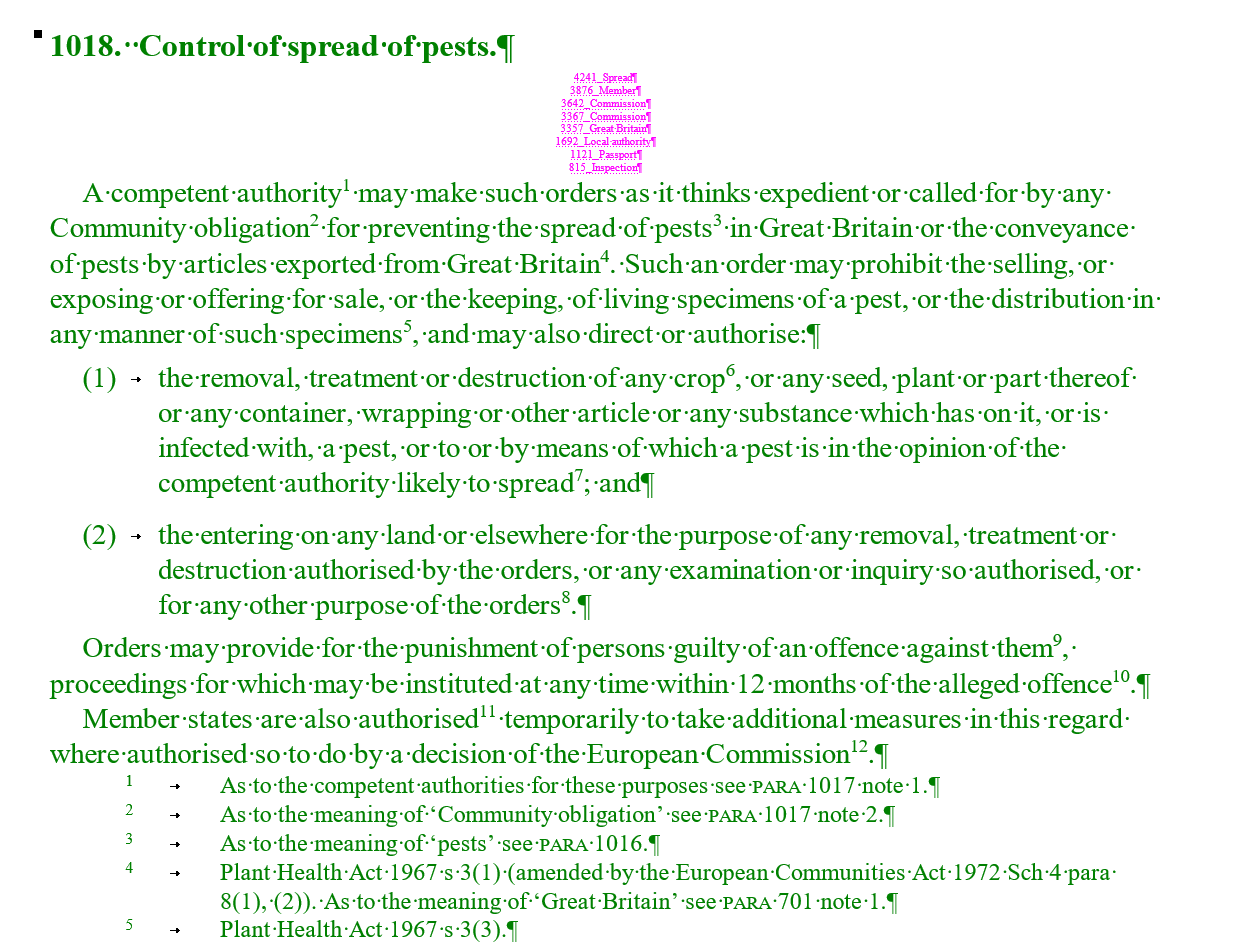

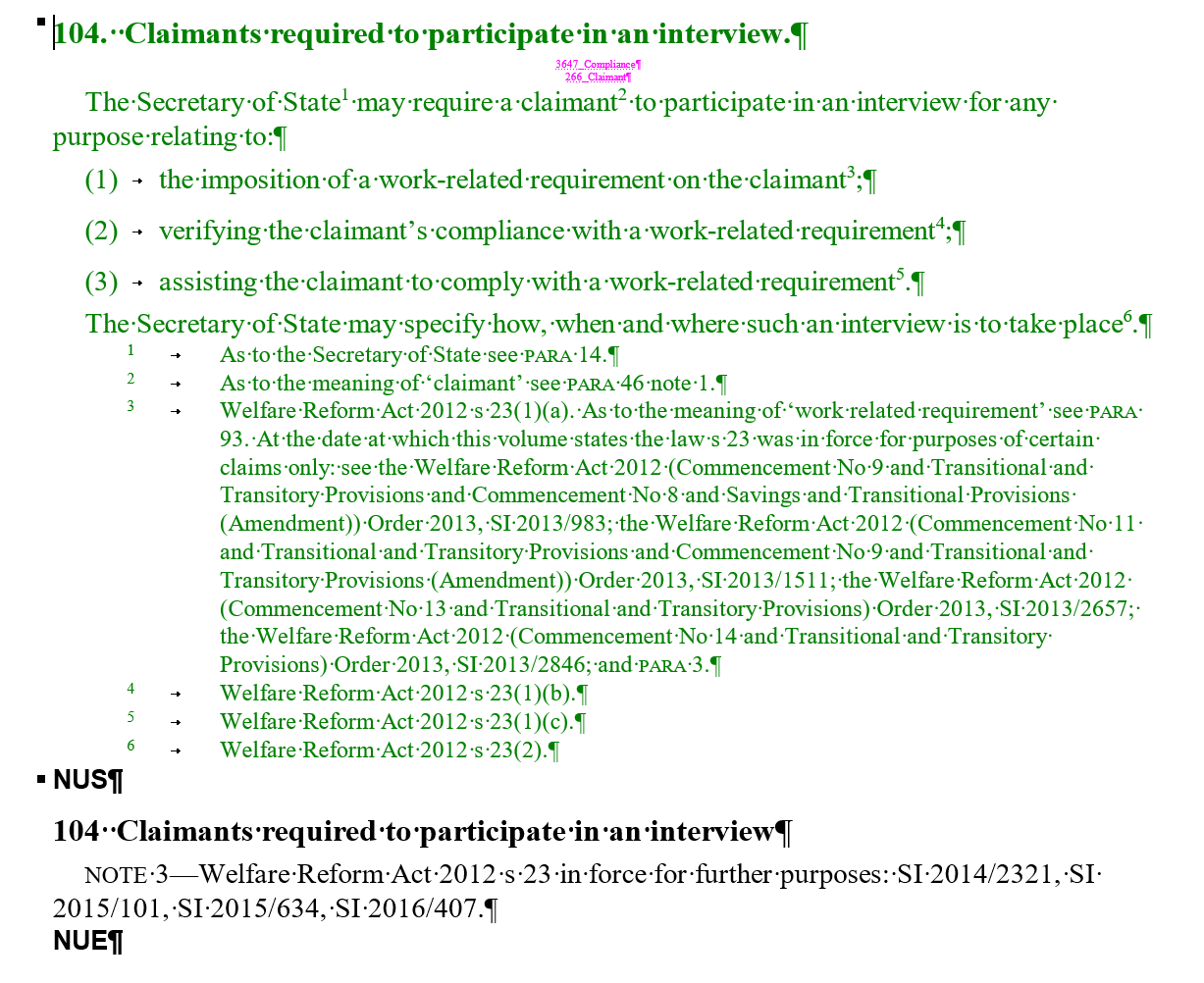

, numbered units much like sections[1], each covering a topic within the area. A topic might look like

this:

Figure 1: A Volume Paragraph

The volparas, as they are usually known, are used by lawyers to assist in their work, ranging from drafting wills and arguing tax law to arguing cases in court. They suggest precedents, highlight legal interpretations and generally offer guidance, and as such, are littered with references to relevant caselaw or legislation, sometimes in footnotes, sometimes inline.

When the legislation changes or when new caselaw emerges—which is often—the

commentary needs to change, too. This is done in several ways over a year: there are

online updates, so called supplements, which are also edited

and published on paper commonly known as looseleafs

[2] or noterups.

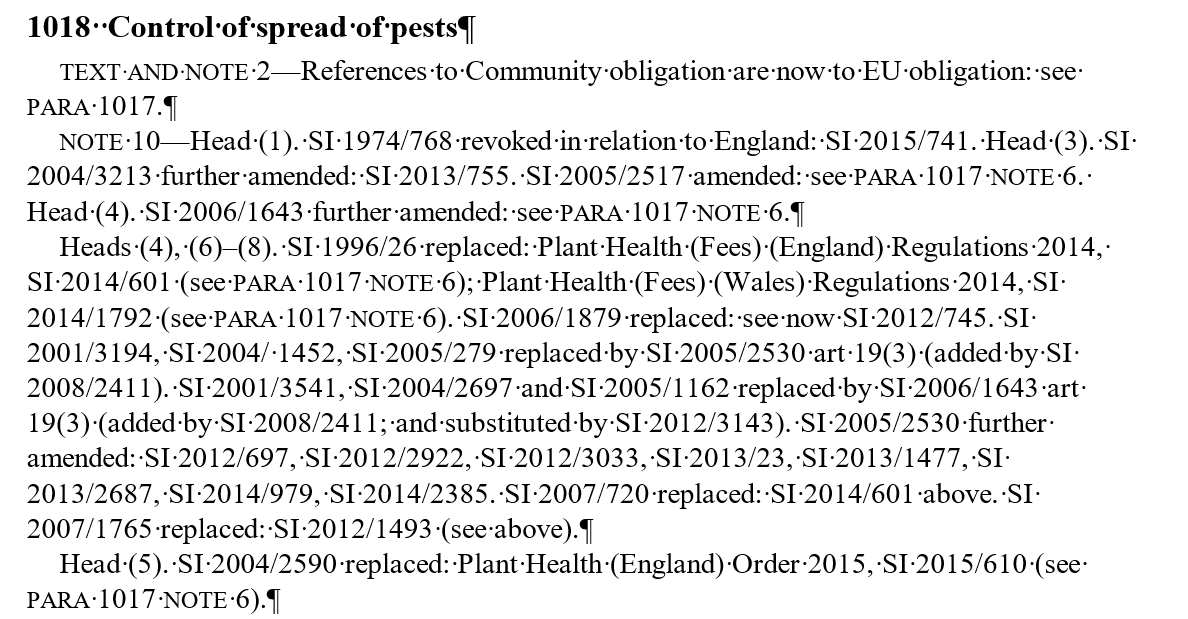

The terminology is more complicated than the actual concept. A volume paragraph that changes gets a supplement, added below the main text body of the para. For example, this supplements the above volume paragraph:

Figure 2: A Supplement Paragraph

The supplements amend the original text, add new references to caselaw and

legislation, and sometimes delete content that is no longer applicable or correct.

Sometimes, the changes are big enough to result in the addition of new volume

paragraphs. These new volume paragraphs inherit the parent vol paras number followed

by a letter, very much in line with the looseleaf

way of thinking.

Called A paras

, they are published online and in the looseleaf

supplements on paper[3]. And once a year, the titles are edited to include the supplemental

information. The A paras are renumbered and made into ordinary

vol

paras, and a new year of new supplements begins.

So?

The commentary titles have been produced from the RTF sources for decades, first to paper and later to paper and several online systems, with increasingly clever—and convoluted, and error-prone—publishing macros, each new requirement resulting in further complications. Somewhere along the line, it was decided to migrate the commentary, along with huge numbers of other documentation, to XML.

Some of the company's content has been authored in XML for years, with new content constantly migrated to XML from various sources. The setup is what I'd label as highly distributed, with no central source or point of origin, just an increasing number of satellite systems. Similarly, there are a number of target publishing systems.

Requirements

LexisNexis, of course, have been publishing from a number of formats for years. XML, therefore, is not in any way new for them. The requirements, then, were surprisingly clear:

-

The target schema is an established, proprietary XML DTD controlled by LexisNexis.

-

The target system is a customisation on top of an established, proprietary CMS, Contenta.

-

As we've seen, the source titles consist of multiple files. The target XML, on the other hand, needs to be one single file per commentary title. There were a number of reasons for this, with perhaps the most important being that the target CMS has a chunking solution of its own, one with sizes and composition that greatly differs from the RTF files[4].

-

As the number of sources is huge and the conversion project was expected to take a significant amount of time and effort, a roundtrip back to RTF was required for the duration of the project[5]. An existing XML to RTF conversion was already in place but is in the process of being extended to handle the new content.

An all-important requirement was a substantial QA on all aspects of the content, from

the obvious is everything there?

[6] to did the upconversion produce the desired semantics?

and

beyond. This implies:

-

A pipelined conversion comprising multiple conversion steps, isolating concerns and so being able to focus on isolated tasks per step.

-

Testing the pipeline, both for individual steps and for making sure that the input matched the output, sometimes dozens of conversion steps later.

-

Validation of the output. DTD validation, obviously, but also Schmatron validation, both for development use and for highlighting possible problems to the subject matter experts.

-

Generated HTML files listing possible issues. Here, footnotes provide a good example as the source RTF markup was sometimes poor, resulting in

orphaned footnotes

, that is, footnotes lackcing a reference or footnote references lacking a target. -

And, of course, manual reviews of a conversion of a representative subset, both by technical and legal experts, frequently aided by the above validation reports.

Pipeline

Thankfully, rather than having to write an RTF parser from scratch, commercial software is available to convert RTF to a structured format better suited for further conversion, namely WordML. LexisNexis have been using Aspose.Words for past conversions, so using it was a given. Aspose was run using Ant macros, with the Ant script also in charge of the pipeline that followed.

The basic idea is this:

-

Convert RTF to WordML.

-

Convert WordML to

flat

XHTML5.Note

As RTF and WordML are both essentially event-based formats where any structure is implied, this is replicated in an XHTML5 consisting of

pelements with an attribute stating the name of theoriginal RTF style. -

Use a number of subsequent upconversion steps to produce a more structured version of the XHTML5, for example by adding nested

sectionelements as implied by the RTF style names that identify headings, and so on. -

With a sufficiently enriched XHTML5, add a number of steps that first convert the XHTML5 to the target XML format and then enrich it, until done.

A recent addition was the realisation that some of the titles contain equations, resulting in several further steps. See section “Equations”.

Pipeline Mechanics

The pipeline consists of a series of XSLT stylesheets, each transforming a

specific subset of the document; one step might convert inline elements while

another wrap list items into list elements. The XSLTs are run by an

XProc script (see id-nicg-xproc-tools) that determines which XSLTs to run and in which

order by reading a manifest file:

<?xml version="1.0" encoding="UTF-8"?>

<?xml-model href="../../../../Content%20Development%20Tools/DEV/DataModelling/Physical/Schemata/RelaxNG/production/pipelines/manifest.rng" type="application/xml" schematypens="http://relaxng.org/ns/structure/1.0"?>

<manifest

xmlns="http://www.corbas.co.uk/ns/transforms/data"

xml:id="migration.p1.p2"

description="migration.p1.p2"

xml:base="."

version="1.0">

<group

xml:id="p12p2.conversion"

description="p12p2.conversion"

xml:base="."

enabled="true">

<item

href="p2_structure.xsl"

description="Do some basic structural stuff"/>

<item

href="p2_orphan-supps.xsl"

description="Handle orphaned supps"/>

<item

href="p2_trintro.xsl"

description="Handle tr:intros"/>

<item

href="p2_volbreaks.xsl"

description="Generate HALS volume break PIs"/>

<item

href="p2_para-grp.xsl"

description="Produce vol paras and supp paras"/>

<item

href="p2_blockpara.xsl"

description="Add display attrs to supp blockparasw.

Add print-only supp blockparas."/>

<item

href="p2_ftnotes.xsl"

description="Move footnotes inline"/>

<item

href="p2_orphan-ftnotes.xsl"

description="Convert orphaned footnotes in supps to

paras starting with the footnote label"/>

<item

href="p2_removecaseinfo.xsl"

description="Remove metadata in case refs"/>

<item

href="p2_xpp-pi.xsl"

description="Generate XPP PIs"/>

<item

href="p2_xref-cleanup.xsl"

description="Removes leading and trailing whitespace from xrefs"/>

<item

href="p2_cleanup.xsl"

description="Clean up the XML, including namespaces"/>

</group>

</manifest>

Each step can also save its output in a debug folder, which is extremely useful when debugging[7]:

-rw-r--r-- 1 arino 197609 6.6M Apr 3 12:05 1-p2_structure.xsl.xml -rw-r--r-- 1 arino 197609 9.2M Apr 3 12:05 2-p2_orphan-supps.xsl.xml -rw-r--r-- 1 arino 197609 8.8M Apr 3 12:05 3-p2_trintro.xsl.xml -rw-r--r-- 1 arino 197609 8.8M Apr 3 12:05 4-p2_volbreaks.xsl.xml -rw-r--r-- 1 arino 197609 8.0M Apr 3 12:05 5-p2_para-grp.xsl.xml -rw-r--r-- 1 arino 197609 8.0M Apr 3 12:05 6-p2_blockpara.xsl.xml -rw-r--r-- 1 arino 197609 7.3M Apr 3 12:05 7-p2_ftnotes.xsl.xml -rw-r--r-- 1 arino 197609 7.3M Apr 3 12:05 8-p2_orphan-ftnotes.xsl.xml -rw-r--r-- 1 arino 197609 7.3M Apr 3 12:05 9-p2_removecaseinfo.xsl.xml -rw-r--r-- 1 arino 197609 7.3M Apr 3 12:05 10-p2_xpp-pi.xsl.xml -rw-r--r-- 1 arino 197609 7.3M Apr 3 12:05 11-p2_xref-cleanup.xsl.xml -rw-r--r-- 1 arino 197609 6.3M Apr 3 12:05 12-p2_cleanup.xsl.xml

The above pipeline is relatively short, as it transforms an intermediate XML format to the target XML format. The main pipeline for converting Halsbury Laws of England RTF to XML (the aforementioned intermediate XML format) currently contains 39 steps.

The XProc is run using a configurable Ant build script[8] that also runs the initial Aspose RTF to WordML conversion, validates the results against the DTD and any Schematrons, and runs the XSpec descriptions testing the pipeline steps, among other things.

The pipeline code, including the XProc and its auxiliary XSLTs and manifest file schema, is based on Nic Gibson's XProc Tools (see id-nicg-xproc-tools) but customised over time to fit the evolving conversion requirements at LexisNexis.

Note on ID Transforms

Any pipeline that wishes to only change a subset of the input will have to carry over anything outside that subset unchanged so a later step can then take care of the unchanged content at an appropriate time. This transform, known as the identity, or ID, transform, will copy over anything not in scope:

<xsl:template

match="node()"

mode="#all">

<xsl:copy copy-namespaces="no">

<xsl:copy-of select="@*"/>

<xsl:apply-templates

select="node()"

mode="#current"/>

</xsl:copy>

</xsl:template>The subset is then processed by defining a mode for anything in scope, with the

document element always starting unmoded

:

<xsl:template match="/">

<xsl:apply-templates select="node()" mode="MY_SUBSET"/>

</xsl:template>Anything outside the subset (mode="MY_SUBSET") is copied over using

the same ID transform, above, while anything in scope uses moded templates:

<xsl:template

match="para"

mode="MY_SUBSET">

<xsl:copy copy-namespaces="no">

<xsl:copy-of select="@*"/>

<xsl:attribute name="needs-review">

<xsl:value-of

select="if (parent::*[@pub='supp'])

then ('yes')

else ('no')"/>

</xsl:attribute>

<xsl:apply-templates

select="node()"

mode="MY_SUBSET"/>

</xsl:copy>

</xsl:template>This simple design pattern, used by every step in the pipeline, makes it very easy to focus on specific tasks, be they to add a single attribute (such as the example above) to handling inline semantics.

The Fun Stuff

From a markup geek point of view, the conversion is actually a fascinating mix of methods and tools, the horrors of the RTF format notwithstanding. This section attempts to highlight some of the more notable ones.

Merging Title Files

The many RTF files comprising the volumes that in turn comprised a single title

needed to be converted and merged (stitched together

) into a single

output XML file. The earlier publishing system had Word macros do this, but running

the macro was error-prone and half manual work; it was unsuitable for an automated

batch conversion of the entire set of commentary titles.

Instead, this approach emerged:

-

Convert all of the individual RTFs to matching raw XHTML files where the actual content were all

panddivelements inside the XHTMLbodyelement. -

Stitch together the files per commentary title[9] by adding together the contents of the XHTML

bodyelements into one big file.Merging together files per title would have been far more difficult without a filename convention used by the editors (also see the listing in section “The Sources”):

09_Children_12(635-704).xml

This was expressed in a regular expression[10] (actually three, owing to how the file stitcher works):

<xsl:param name="base-pattern" select="'[()a-zA-Z0-9_\s%]+'"/> <xsl:param name="numparas-pattern" select="'_[0-9]{2}\([0-9]+[A-Z]*[\-][0-9]+[A-Z]*\)'"/> <xsl:param name="suffix-pattern" select="'\.xml'"/>An XProc pipeline listed all the source XHTML in a folder and any subfolders, called an XSLT that did the actual work. It grouped the files per title, naming each title according to an agreed-upon set of conventions, merged each title contents, saving the merged file in a secondary output, fed back a list of the original files that were then deleted, leaving behind the merged XHTML.

Implicit to Explicit Structure

The raw XHTML produced by the first step from the WordML is a lot like the RTF;

whatever structure there is, is implicit. Every block-level component is actually

a

p element, with the RTF style given in

data-lexisnexis-word-style attributes. Here, for example, is a

level two section heading followed by a volume paragraph with a heading, some

paragraphs and list items:

<p data-lexisnexis-word-style="vol-H2">

<span class="bold">(1) THE BENEFITS</span>

</p>

<p data-lexisnexis-word-style="vol-PH">

<span class="bold">1. The benefits.</span>

</p>

<p data-lexisnexis-word-style="vol-Para">Following a review of the social security benefits

system<sup>1</sup>, the government introduced universal credit, a new single payment for

persons looking for work or on a low income<sup>2</sup>.</p>

<p data-lexisnexis-word-style="vol-Para">Universal credit is being phased in<sup>3</sup> and

will replace income-based jobseeker’s allowance<sup>4</sup>, income-related employment and

support allowance<sup>5</sup>, income support<sup>6</sup>, housing benefit<sup>7</sup>,

child tax credit and working tax credits<sup>8</sup>.</p>

<p data-lexisnexis-word-style="vol-Para">Council tax benefit has been abolished and replaced by

council tax reduction schemes<sup>9</sup>.</p>

<p data-lexisnexis-word-style="vol-Para">In this title, welfare benefits are considered under

the following headings:</p>

<p data-lexisnexis-word-style="vol-L1">(1)<span class="tab"/>entitlement to universal

credit<sup>10</sup>;</p>

<p data-lexisnexis-word-style="vol-L1">(2)<span class="tab"/>claimant responsibilities,

including work related requirements<sup>11</sup>;</p>

<p data-lexisnexis-word-style="vol-L1">(3)<span class="tab"/>non-contributory benefits,

including carer’s allowance, personal independence payment, disability living allowance,

attendance allowance, guardian’s allowance, child benefit, industrial injuries benefit, the

social fund, state pension credit, age-related payments and income related benefits that are

to be abolished<sup>12</sup>;</p>

<p data-lexisnexis-word-style="vol-L1">(4)<span class="tab"/>contributions<sup>13</sup>;</p>

<p data-lexisnexis-word-style="vol-L1">(5)<span class="tab"/>contributory benefits, including

jobseeker’s allowance, employment and support allowance, incapacity benefit, state maternity

allowance and bereavement payments<sup>14</sup>;</p>

<p data-lexisnexis-word-style="vol-L1">(6)<span class="tab"/>state retirement

pensions<sup>15</sup>;</p>

<p data-lexisnexis-word-style="vol-L1">(7)<span class="tab"/>administration<sup>16</sup>;

and</p>

<p data-lexisnexis-word-style="vol-L1">(8)<span class="tab"/>European law<sup>17</sup>.</p>

<p data-lexisnexis-word-style="vol-PH">

<span class="bold">2. Overhaul of benefits.</span>

</p>

<p data-lexisnexis-word-style="vol-Para">In July 2010 the government published its consultation

paper <span class="italic">21</span>st<span class="italic"> Century Welfare</span> setting

out problems of poor work incentives and complexity in the existing benefits and tax credits

systems<sup>1</sup>. The paper considered the following five options for reform: (1)

universal credit<sup>2</sup>; (2) a single unified taper<sup>3</sup>; (3) a single working

age benefit<sup>4</sup>; (4) the Mirrlees model<sup>5</sup>; and (5) a single

benefit/negative income tax model<sup>6</sup>.</p>The implied structure (a level two section containing a volume paragraph that in turn contains a heading, a few paragraphs and a list) is made explicit using a series of steps.

Inline Spans

RTF, as mentioned earlier, is a non-enforceable, event-based, flat format. It

lists things to do with the content in the order in which the instructions appear,

with little regard to any structure, implied or otherwise. The instructions happen

when the author inserts a style, either where the marker is or on a selected range

of text. This can be done as often as desired, of course, and will simply add to

existing RTF style instructions, which means that an instruction such as use

bold

might be applied multiple times on the same, or mostly the same,

content.

The resulting raw XHTML converted from WordML might then look like this (indentatiton added for clarity):

<p data-lexisnexis-word-style="vol-PHa">

<span class="bold">2.<span class="tab"/>O</span>

<span class="bold">pen</span>

<span class="bold">ing</span>

<span class="bold"> a childcare account</span>

</p>Simply mapping and converting this to a target XML format will not result in what

was intended (i.e. <core:para><core:emph>2. Opening a childcare

account</core:emph></core:para>) but instead a huge mess, so

cleanup steps are required before the actual conversion, merging spans, eliminating

nested spans, etc.

With just one intended semantics such as mapping bold to an emphasis tag, the

cleanup can be relatively uncomplicated. When more than one style is present in the

sources, however[11], the raw XHTML is anything but straight-forward. Heading labels (see

section “Labels in Headings, List Items, and Footnotes”),

cross-references and case citations (see section “Cross-references and Citations”) all have

problems in part caused by the inline span elements.

Labels in Headings, List Items, and Footnotes

The span elements cause havoc in headings and any kind of ordered

list, as the heading and list item labels use many different types of numbering in

legal commentary. A volpara sometimes includes half a dozen ordered lists, each of

which must use a different type of label (numbered, lower alpha, upper alpha, lower

roman, ...) so the items can be referenced later without risking confusion.

Here, for example, is a level one list item using small caps alphanumeric:

<p data-lexisnexis-word-style="vol-L1">(<span class="smallcaps">a</span>)<span class="tab"/>the

allowable losses accruing to the transferor are set off against the chargeable gains so accruing

and the transfer is treated as giving rise to a single chargeable gain equal to the aggregate of

the gains less the aggregate of the losses<sup>22</sup>;</p>Note the tab character, mapped to a span[@class='tab'] element in the

XHTML, separating the label from the list contents, but also the parentheses

wrapping the smallcaps

span. The code used to extract the list item contents, determine the

list type used, and extract the labels must take into account a number of

variations.

The source RTF list items all follow the same pattern, a list item label followed by a tab and the item contents:

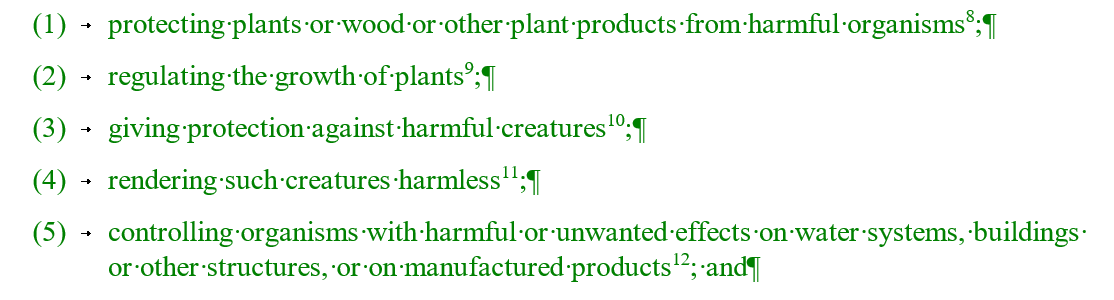

Figure 3: List Items

In the XHTML, the result is this:

<p data-lexisnexis-word-style="vol-L1">(1)<span class="tab"/>protecting plants or wood or other

plant products from harmful organisms<sup>8</sup>;</p>

<p data-lexisnexis-word-style="vol-L1">(2)<span class="tab"/>regulating the growth of

plants<sup>9</sup>;</p>

<p data-lexisnexis-word-style="vol-L1">(3)<span class="tab"/>giving protection against harmful

creatures<sup>10</sup>;</p>

<p data-lexisnexis-word-style="vol-L1">(4)<span class="tab"/>rendering such creatures

harmless<sup>11</sup>;</p>

<p data-lexisnexis-word-style="vol-L1">(5)<span class="tab"/>controlling organisms with harmful

or unwanted effects on water systems, buildings or other structures, or on manufactured

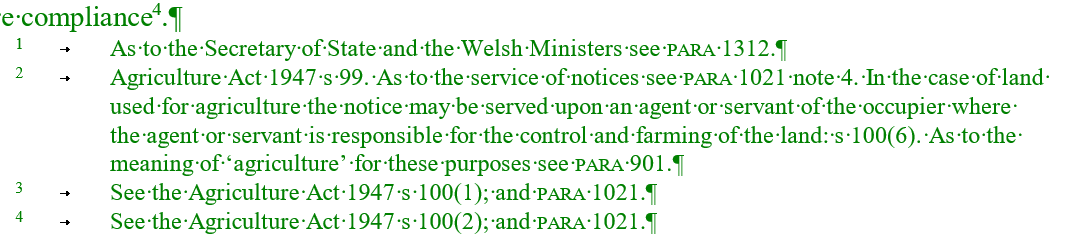

products<sup>12</sup>; and</p>Footnotes use a similar construct, separating the label from the contents with a tab character:

Figure 4: Footnotes

In both cases, the XSLT essentially attempts to determine the type of list by

analysing the content before

span[@class="tab"] to create a list item element with the list type

information placed in @type, and then includes everything

after the span as list item contents:

<?xml version="1.0" encoding="UTF-8"?>

<xsl:when

test="@data-lexisnexis-word-style=('L1', 'vol-L1', 'vol-L1CL', 'vol-L1P', 'sup-L1', 'sup-L1CL')">

<!-- Note that the if test is needed to parse lists where the number and tab are in italics or similar -->

<!-- the span must be non-empty since the editors sometimes use a new list item but then remove the

numbering and leave the tab (span class=tab) to make it look as if it was part of the immediately

preceding list item -->

<xsl:element

name="core:listitem">

<xsl:attribute

name="type">

<xsl:choose>

<xsl:when

test="span[1][@class='smallcaps' and

matches(.,'\(?[a-z]+\)?')]">

<xsl:analyze-string

select="span[1]"

regex="^(\(?[a-z]+\)?)$">

<xsl:matching-substring>

<xsl:choose>

<xsl:when

test="regex-group(1)!=''">upper-alpha</xsl:when>

</xsl:choose>

</xsl:matching-substring>

</xsl:analyze-string>

</xsl:when>

<xsl:otherwise>

<xsl:analyze-string

select="if (node()[1][self::span and .!=''])

then (span[1]/text()[1])

else (text()[1])"

regex="^(\(([0-9]+)\)[\s]?)|

(\(([ivx]+)\)?[\s]?)|

(\(([A-Z]+)\))|

(\(([a-z]+)\))$">

<xsl:matching-substring>

<xsl:choose>

<xsl:when

test="regex-group(1)!=''">number</xsl:when>

<xsl:when

test="regex-group(3)!=''">lower-roman</xsl:when>

<xsl:when

test="regex-group(5)!=''">upper-alpha</xsl:when>

<xsl:when

test="regex-group(7)!=''">lower-alpha</xsl:when>

</xsl:choose>

</xsl:matching-substring>

<xsl:non-matching-substring>

<xsl:value-of

select="'plain'"/>

</xsl:non-matching-substring>

</xsl:analyze-string>

</xsl:otherwise>

</xsl:choose>

</xsl:attribute>

<xsl:element name="core:para">

<xsl:copy-of

select="@*"/>

<!-- This does not remove the numbering of list items where the numbers

are inside spans (for example, in italics); that we handle later -->

<xsl:apply-templates

select="node()[not(following-sibling::span[@class='tab'])]"

mode="KEPLER_STRUCTURE"/>

</xsl:element>

</xsl:element>

</xsl:when>

The xsl:choose handles two cases. The first handles a case where the

list item label was in a small caps RTF style (here translated to

span[@class="smallcaps"] in a previous step), the second deals with

all remaining types of list item labels. The key in both cases is a regular

expression that relies on the original author writing a list item in the same way,

every time[12]. I've added line breaks in the above example to make the regex easier to

read; essentially, the different cases simply replicate the allowed list

types.

The overall quality of the RTF (list and footnote) sources was surprisingly good, but since the labels were manually entered, this would sometimes break the conversion.

Headings are somewhat different. Here is a level four heading:

<p data-lexisnexis-word-style="vol-H4"><span class="smallcaps">c. housing costs</span></p>

There is no tab character separating the label from the heading contents, so we

are relying on whitespace rather than a mapped span element to separate

the label and the heading contents from each other. The basic heading label

recognition mechanism still relies on pattern-matching the label, however. The

difficulties here would usually involve the editor using a bold or smallcaps RTF

style to select the label, but accidentially marking up the space that followed,

necessitating

Note

Here, the contents are in lower case only. The RTF vol-H4

style automatically provided the small caps formatting, so editors would simply

enter the text without bothering to use title caps. This resulted in a

conversion step that, given an input string, would convert that string to

heading caps, leaving prepositions in lower case and

adding all caps to a predefined list of keywords such as UK

or

EU

.

The code to identify list item, footnote, and heading labels evolved over time, recognising most variations in RTF style usage, but nevertheless, some problems were only spotted in the QA that followed (see section “QA”).

Wrapping List Items in Lists

List items in RTF have no structure, of course. They are merely paragraphs with style instructions that make them look like lists by adding a label before the actual contents, separating the two with a tab character as seen in the previous section.

That step does not wrap the list items together, it merely identifies the list

types and constructs list item elements. A later step adds list wrapper elements by

using xsl:for-each-group instructions such as this:

<xsl:template

match="*[core:listitem[core:para/@data-lexisnexis-word-style=('L1','L2','L3',

'vol-L1','vol-L1CL','vol-L2','vol-L3', 'sup-L1', 'sup-L1CL', 'sup-L2',

'sup-L3', 'term-ref', 'vol-FL1', 'vol-FL2')]]"

mode="KEPLER_LISTS"

priority="1">

<xsl:copy copy-namespaces="no">

<xsl:copy-of select="@*"/>

<xsl:for-each-group

select="*"

group-adjacent="boolean(self::core:listitem[core:*/@data-lexisnexis-word-style=('L1','L2','L3',

'vol-L1', 'vol-L1CL', 'vol-L1P', 'vol-Quote', 'vol-L2','vol-L3',

'sup-L1', 'sup-L1CL', 'sup-L2', 'sup-L3', 'term-ref',

'vol-QuoteL1', 'vol-FL1', 'vol-FL2')])">

<xsl:choose>

<xsl:when test="current-grouping-key()">

<xsl:element name="core:list">

<xsl:call-template name="restart-attr"/>

<xsl:attribute name="type" select="@type"/>

<xsl:for-each-group

select="current-group()"

group-adjacent="boolean(self::core:listitem[core:*/@data-lexisnexis-word-style=('L2','L3',

'vol-L1P', 'vol-Quote','vol-L2','vol-L3',

'sup-L2', 'sup-L3','term-ref', 'vol-FL2')])">

<xsl:choose>

<xsl:when test="current-grouping-key()">

<xsl:element name="core:list">

<xsl:attribute

name="type"

select="@type"/>

<xsl:apply-templates

select="current-group()" mode="KEPLER_LISTS"/>

</xsl:element>

</xsl:when>

<xsl:otherwise>

<xsl:apply-templates

select="current-group()"

mode="KEPLER_LISTS"/>

</xsl:otherwise>

</xsl:choose>

</xsl:for-each-group>

</xsl:element>

</xsl:when>

<xsl:otherwise>

<xsl:apply-templates

select="current-group()"

mode="KEPLER_LISTS"/>

</xsl:otherwise>

</xsl:choose>

</xsl:for-each-group>

</xsl:copy>

</xsl:template>Note the many different RTF styles taken into account; these do not all do

different things, they are actually duplicates or near duplicates, the result of the

non-enforceable nature of RTF. Also note the boolean() expression in

@group-adjacent. The expression checks for matching attribute

values in the children of the list items, as these will still

have the style information from the RTFs.

The xsl:for-each-group instruction is frequently used in the pipeline

steps as it is perfect when grouping a flat content model to make any implied

hierarchies in it explicit.

Volume Paragraphs

The volume paragraphs provide another implicit sction grouping. They are essentially a series of block-level elements that always start with a numbered title (see Figure 1). The raw XHTML looks something like this:

<p data-lexisnexis-word-style="vol-PH">

<span class="bold">104. Claimants required to participate in an interview.</span>

</p>

<p data-lexisnexis-word-style="vol-Para">...</p>

<p data-lexisnexis-word-style="vol-L1">...</p>

<p data-lexisnexis-word-style="vol-L1">...</p>

<p data-lexisnexis-word-style="vol-L1">...</p>

<p data-lexisnexis-word-style="vol-Para">....</p>

<p data-lexisnexis-word-style="sup-PH">

<span class="bold">104 </span>

<span class="bold">Claimants required to participate in an interview</span>

</p>

<p data-lexisnexis-word-style="sup-Para">...</p>Using the kind of upconversions outlined above, the result is a reasonably structured sequence of block-level elements:

<core:para edpnum-start="104">

<core:emph typestyle="bf">Claimants required to participate in an interview.</core:emph>

</core:para>

<core:para>...</core:para>

<core:list type="number">

<core:listitem type="number">

<core:para data-lexisnexis-word-style="vol-L1">...</core:para>

</core:listitem>

<core:listitem type="number">

...

</core:listitem>

...

</core:list>

<core:para>...</core:para>With longer volume paragraphs, frequently with supplements added, processing them becomes difficult and unwieldy.

Figure 5: Supplement Added

We added semantics to the DTD to make later publishing and processing easier, wrapping the volume paragraphs and the supplements inside them:

<core:para-grp>

<core:desig value="104">104.</core:desig>

<core:title>Claimants required to participate in an interview.</core:title>

<core:para>...</core:para>

<core:list type="number">

<core:listitem>

<core:para>...</core:para>

</core:listitem>

<core:listitem>

...

</core:listitem>

...

</core:list>

<core:para>...</core:para>

<su:supp pub="supp">

<core:no-title/>

<su:body>

<su:para-grp>

<core:desig value="104">104</core:desig>

<core:title>Claimants required to participate in an interview</core:title>

<core:para>...</core:para>

</su:para-grp>

</su:body>

</su:supp>

</core:para-grp>

This was achieved using a two-stage transform where the first template, matching

volume paragraph headings (para[@edpnum-start] elements) only, would

add content along the following-sibling axis until (but not including)

the next volume paragraph heading[13]:

<!-- Common template for following-sibling axis -->

<xsl:template name="following-sibling-blocks">

<xsl:param name="num"/>

<xsl:apply-templates

select="following-sibling::*[(local-name(.)='para' or

local-name(.)='list' or

local-name(.)='blockquote' or

local-name(.)='figure' or

local-name(.)='comment' or

local-name(.)='legislation' or

local-name(.)='endnotes' or

local-name(.)='supp' or

local-name(.)='generic-hd' or

local-name(.)='q-a' or

local-name(.)='digest-grp' or

local-name(.)='form' or

local-name(.)='address' or

local-name(.)='table' or

local-name(.)='block-wrapper') and

not(@edpnum-start) and

preceding-sibling::core:para[@edpnum-start][1][@edpnum-start=$num]]"

mode="P2_INSIDE_PARA-GRP"/>

</xsl:template>This, of course, created duplicates of every block-level sibling in what

essentially is a top-down transform, so a second pattern was needed to eliminate the

duplicates in a matching child axis template:

<xsl:template

match="core:list|

core:para[not(@edpnum-start)]|

core:blockquote|

table|

core:figure|

lnb-leg:legislation|

fn:endnotes|

form:form|

core:comment|

core:q-a|

core:generic-hd|

lnbdig-case:digest-grp|

su:supp|

su:block-wrapper"

mode="P2_PARA-GRP"

priority="1"/>The supplements were enriched using a similar pattern, including along the

following-sibling axis and deleting the resulting duplicates along

the descendant axis.

Cross-references and Citations

Perhaps the most significant case of upconversion came with cross-references and citations (to statutes, cases, and so on).

Cross-references

A cross-reference in the RTFs would always be manually entered in the RTF sources[14]:

Figure 6: Cross-reference to a Volume Paragraph in the Current Title

The cross-reference here is the keyword para followed by a (volume paragraph) number. The problem here is that the only identifiable omponent was the para (or paras, in case of multiple volume paragraph references) keyword:

As to the meaning of allowable losses see <span class="smallcaps">para</span> 609.

In some cases, the editor had used the small caps style on the number in addition to the keyword, causing additional complications.

The reference might be to a combined list of numbers and ranges of numbers:

<span class="smallcaps">paras</span> 10–21, 51, 72–74

These were handled in a regular expression that would locate the keyword and attempt to match characters in the first following-sibling text node[15]:

<xsl:when

test="matches(following-sibling::text()[1],

'^[\s]*[0-9]+[A-Z]*([–][0-9]+[A-Z]*)?(,\s+[0-9]+[A-Z]*([–][0-9]+[A-Z]*)?)*')">

<xsl:analyze-string

select="following-sibling::text()[1]"

regex="^[\s]*([0-9]+[A-Z]*([–][0-9]+[A-Z]*)?(,\s+[0-9]+[A-Z]*([–][0-9]+[A-Z]*)?)*)(.+)$">

<xsl:matching-substring>

<xsl:text> </xsl:text>

<xsl:for-each

select="tokenize(regex-group(1),',')">

<lnci:paragraph>

<xsl:attribute

name="num"

select="if (matches(.,'–'))

then (normalize-space(substring-before(.,'–')))

else normalize-space(.)"/>

<xsl:if test="matches(.,'–')">

<xsl:attribute name="lastnum">

<xsl:value-of

select="normalize-space(substring-after(.,'–'))"/>

</xsl:attribute>

</xsl:if>

<xsl:value-of

select="normalize-space(.)"/>

</lnci:paragraph>

<xsl:if test="position()!=last()">

<xsl:text>, </xsl:text>

</xsl:if>

</xsl:for-each>

<xsl:value-of select="regex-group(5)"/>

</xsl:matching-substring>

</xsl:analyze-string>

</xsl:when>Note

The xsl:when shown here covers the case where the reference

follows after the keyword.

The regular expression includes letters after the numbers to accommodate

the so-called A paras

.

This needed to be combined with a kill template

for the same

text node but on a descendant axis. In other words, something like this:

<xsl:template

match="node()[self::text() and

preceding-sibling::*[1][self::*:span and @class='smallcaps' and

matches(.,'^para[s]?[\s]*$')]]"

mode="KEPLER_CONSTRUCT-REFS"/>The end result would be something like this (indentation added for readability):

see the Taxation of Chargeable Gains Act 1992 s 21(1); and

<core:emph typestyle="smcaps">para</core:emph>

<lnci:cite type="paragraph-ref">

<lnci:book>

<lnci:bookref>

<lnci:paragraph num="613"/>

</lnci:bookref>

</lnci:book>

<lnci:content>613</lnci:content>

</lnci:cite>. If the reference was given to a list, each list item would be tagged in a

separate lnci:cite element, while a range would instead add a

lastnum attribute to the lnci:cite.

The following-sibling axis to match content, paired with a

descendant axis to delete duplicates is, as we have seen,

frequently used in the pipeline.

In some cases, the cross-reference would point to a volume paragraph in a different title:

Figure 7: Cross-reference to a Different Title

Here, we'd have the target title name styled in an *xtitle RTF style, here in purple, followed by text-only volume number information, the para keyword, and the target volume paragraph number. This was handled much like the above, the difference being an additional step to match the title in a separate step and combine the title with the cross-reference markup in yet another step.

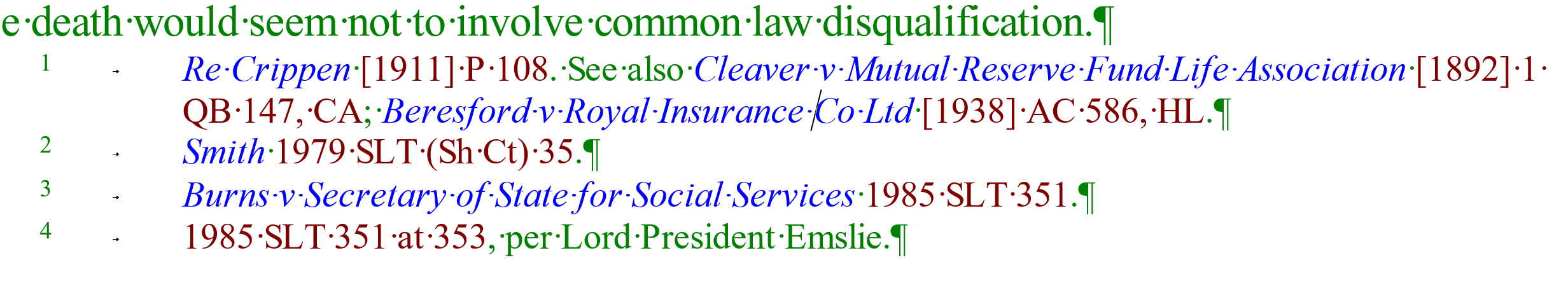

Citations

Halsbury's Laws of England contain huge numbers of citations, but very few of them have any kind of RTF styling and were thus mostly unidentifiable in the conversion. Instead, they will be handled later, when the XML is uploaded into the target CMS, by using a cite pattern-matching tool developed specifically for the purpose.

The sister publication for Scotland, on the other hand, had plenty of case citations, most of which would look like this:

Figure 8: Case Citations

Here, the blue text indicates the case name and uses the *case RTF style, while the brown(-ish) text is the actual formal citation and uses the RTF style *citation. The citation markup we want looks like this:

<lnci:cite>

<lnci:case>

<lnci:caseinfo>

<lnci:casename>

<lnci:text

txt="Bushell v Faith"/>

</lnci:casename>

</lnci:caseinfo>

<lnci:caseref

normcite="[1970] AC 1099[1970]1All ER 53, HL"

spanref="spd93039e7444"/>

</lnci:case>

<lnci:content>

<core:emph typestyle="it">Bushell v Faith</core:emph>

<lnci:span

spanid="spd93039e7444"

statuscode="citation">[1970] AC 1099,

[1970], 1, All ER 53, HL</lnci:span></lnci:content>

</lnci:cite>Essentially, the citation consists of two parts, one formal part where the

machine-readable citation (in the normcite attribute) lives, along

with the case name, and another, referenced by the formal part (the

spanref/spanid is an ID/IDREF pair, in case you

didn't spot it), where the content visible to the end user lives.

My first approach was to convert the casename and citation parts in one step, then merge the two and add the wrapper markup when done in another. Unfortunately, there were several problems:

-

Neither the casename nor the citation was always present. Sometimes, a case would be referred to only by its citation. Sometimes, a previously referred case would be referred to again using only its name.

-

Multiple case citations might occur in a single paragraph, sometimes in a single sentence.

-

Sometimes, there woul be other markup between the casename and its matching citation.

-

As the RTF style application was done manually, there were plenty of edge cases where not all of the name or citation had been selected and marked up. In quite a few, the unmarked text was then selected and marked up separately, resulting in additional

spanelements in the raw XHTML.

This resulted in the citation construction being divided into three separate

steps, beginning with a cleanup to fnd and merge span elements, a

second to handle the casenames and citations, and a third to construct the

wrapper markup with the two citation parts and the ID/IDREF pairs.

This sounds simple enough, but consider the following: In a paragraph containing

multiple citations, how does one know what span belongs to what

citation? How does ne express that in an XSLT template? Here is a relatively

simple one:

<span data-lexisnexis-word-style="case">Secretary of State for Business,

Enterprise and Regulatory Reform v UK Bankruptcy Ltd</span>

<core:emph typestyle="it"> </core:emph>

<span data-lexisnexis-word-style="citation">[2010] CSIH 80</span>,

<span data-lexisnexis-word-style="citation">2011 SC 115</span>,

<span data-lexisnexis-word-style="citation">2010 SCLR 801</span>,

<span data-lexisnexis-word-style="citation">2010 SLT 1242</span>,

<span data-lexisnexis-word-style="citation">[2011] BCC 568</span>.Do all citations belong to the same casename? Only the first? Here is another one (note that it's all in a single sentence):

<span data-lexisnexis-word-style="case">Bushell v Faith</span>

<span data-lexisnexis-word-style="citation">[1970] AC 1099</span>,

<span data-lexisnexis-word-style="citation">[1970] </span>

<span data-lexisnexis-word-style="citation">1 </span>

<span data-lexisnexis-word-style="citation">All ER 53, HL</span>;

<span data-lexisnexis-word-style="case">Cumbrian Newspapers Group Ltd v

Cumberland and Westmorland Newspapers and Printing Co Ltd</span>

<span data-lexisnexis-word-style="citation">[1987] Ch 1</span>,

<span data-lexisnexis-word-style="citation">[1986] 2 All ER 816</span>. Note the fragmentation of spans and the comma and semicolon separators,

respectively. When looking ahead along the following-sibling axis,

how far should we look? Would the semicolon be a good separator? The

comma?

The decision was a combination of asking the editors to update some of the more ambiguous RTF citations and a relatively conservative approach where situations like the above resulted in multiple case citation markup. There was no way to programmatically make sure that a preceding case name is actually part of the same citation.

Symbols

An unexpected problem was with missing characters: en dashes (U+2013) and em

dashes (U+2014) would mysteriously disappear in the conversion. After looking at the

debug output of the early steps, I realised that the characters were actually

symbols, inserted using Insert Symbol in Microsoft Word. In

WordML, the symbols were mapped to w:sym elements, but these were then

discarded.

When looking at the extent of the problem, it turned out that the affected documents were all old, meaning an older version of Microsoft Word and implying that the problem with symbols was fixed in later versions, inserting Unicode caracters rather than (presumably) CP1252 characters. Furthermore, only two symbols were used from the symbol map, the en and em dashes. This fixed the problem:

<xsl:param name="charmap">

<symbols>

<symbol>

<wchar>F02D</wchar>

<ucode>–</ucode>

</symbol>

<symbol>

<wchar>F0BE</wchar>

<ucode>—</ucode>

</symbol>

</symbols>

</xsl:param>

<xsl:template

match="w:sym[@w:char and @w:font='Symbol']">

<xsl:param

name="wchar"

select="@w:char"/>

<xsl:value-of

select="$charmap//symbol[wchar=$wchar]/ucode/text()"/>

</xsl:template>Equations

A very recent issue, two weeks old as I write this, is the fact that a few of the titles contain equations created in Microsoft Equation 3.0. The equations would quietly disappear during our test conversions without me noticing, until one of the editors had the good sense to check. What happened was that Aspose converted the equtions to uuencoded and gzipped Windows Meta Files and embedded them in a binary object elements that were then discarded.

Unfortunately for me, the requirements extended beyond equations as images, which required me to rethink the process. What I'm doing now is this:

-

Add placeholder processing instructions in an early step to mark where to (re-)insert equations later. Finish converting the title to XML.

-

Convert the RTF to LaTeX. It turns out that there are quite a few converters available open source, including some that handle Microsoft Equation 3.0. What I've decided on for now is rtf2latex2e (see id-rtf2latex), as it is very simple to run from an Ant script and provides reasonable-looking TeX, meaning that the equations are handled. The process can also be customised, mapping RTF styles to LaTeX macros so some of the hidden styles I need to identify title metadata are kept intact.

-

Convert the LaTeX to XHTML+MathML. Again, it turns out that there are quite a few options available. I chose a converter called TtM (see id-ttm). It produces some very basic and very ugly XHTML, but the equations are pure presentation MathML.

-

Extract the equations per title, in document order, and reinsert them in the converted XML titles where the PIs are located.

This process is surprisingly uncomplicated and very fast. There are a few niggles as I write this, most to do with the fact that I need to stitch together the XHTML+MathML result files to match the converted XML, but I expect to have completed the work within days.

QA

With a conversion as big as the Halsbury titles migration, quality assurance is vital, both when developing the pipeline steps and after running them. Here are some of the more important QA steps taken:

-

Most of the individual XSLT steps were developed using XSpec tests for unit testing to make sure that the templates did what they were supposed to.

-

We also used XSpec tests to validate the content for key steps in the pipeline. Typically, an XSpec test might perform node counts before and after a certain step, making sure that nothing was being systematically lost.

-

Headings, list items and footnotes were particularly prone to problems, as the initial identification of content as being a labelled content type rather than, say, an ordinary paragraph relied solely on pattern matching (see section “Labels in Headings, List Items, and Footnotes”). A failed list item conversion would usually result in an ordinary paragraph (a

core:paraelement) with a procesing attribute (@data-lexisnexis-word-style) attached, hinting at where the problem occurred and what the nature of the problem was (the contents of the processing attribute giving the name of the original RTF style). -

Obviously, DTD validation was part of the final QA.

-

The resulting XML was also validated against Schematron rules, some of which were intended for developers and others for the subject matter experts going through the converted material. For example, a number of the rules highlighted possible issues with citations and cross-references, due to the many possible problems the pipeline might encounter because of source issues (see section “Cross-references and Citations”).

Other schematron rules provided sanity checks, for example, that heading and list item labels were in sequence and were being extracted correctly.

-

Some conversion steps were particularly error-prone because of the many variations in the sources, so these steps included debug information inside XML comments. These were then used to generate reports for the SME review.

Footnotes, for example, would sometimes have a broken footnote reference due to a missing target or a wrongly applied superscript style or simply the wrong number. All these cases would generate a debug comment that would then be included in an HTML report to the SME review.

XSpec for Pipeline Transformations

XSpec, of course, is a testing framework for single transformations, meaning one XSLT applied to input producing output, not a testing framework for testing a pipeline comprising several XSLTs with multiple inputs and outputs. Our early XSpec scenarios were therefore used for developing the individual steps, not for comparing pipeline input and output, which initially severely limited the usability of the framework in our transformations.

To overcome this limitation, I wrote a series of XSLT transforms and Ant macros to

define a way to use XSpecs on a pipeline. While still not directly comparing

pipeline input and output, the Ant macro, run-xspecs, accepted an XSpec

manifest file (compare this to the XSLT manifest briefly described in section “Pipeline Mechanics”)

that declared on which pipeline steps to apply which XSpec tests and produce

concatenated reports. Here's a short XSpec manifest file:

<?xml version="1.0" encoding="UTF-8"?>

<tests

xmlns="http://www.sgmlguru.org/ns/xproc/steps"

manifest="xslt/manifest-stair-p1-to-p2.xml"

xml:base="file:/c:/Users/nordstax/repos/ca-hsd/stair">

<!-- Use paths relative to /tests/@xml:base for pipeline manifest, XSLT and XSpec -->

<test

xslt="xslt/p2_structure.xsl"

xspec="xspec/p2_structure.xspec"

focus="batch"/>

<test

xslt="xslt/p2_para-grp.xsl"

xspec="xspec/p2_para-grp.xspec"

focus="batch"/>

<test

xslt="xslt/p2_ftnotes.xsl"

xspec="xspec/p2_ftnotes.xspec"

focus="batch"/>

</tests>

The assumption is here that the pipeline produces debug step output (see section “Pipeline Mechanics”) so

the XSpec tests can be applied on the step debug inputs/outputs. The

run-xspecs macro includes a helper XSLT that takes the basic XSpecs

(three of them in the above example) and transforms them into XSpec instances for

each input and output XML file to be tested[16]. The Ant build script then runs each generated XSpec test and generates

XSpec test reports.

The run-xspecs code, while still in development and rather lacking in

any features we don't currently need, works beautifully and has significantly eased

the QA process.

End Notes

Some Notes on Conversion Mechanics

Some notes on the conversion meachanics:

-

The conversions were run by Ant build scripts that ran all of the various tasks, from Aspose RTF to WordML, the pipeline(s), validation, XSpec tests and reporting.

-

The volumes were huge. We are talking about several gigabytes of data.

-

The conversions, all of them in batch, were done on a file system. While I don't recommend this approach (I would gladly have done conversion in an XML database), it does work.

-

The pipeline enabled a very iterative approach.

It should be noted that while I keep talking about a pipeline, several similar data migrations were actually done in parallell with other products, each with similar pipelines and similar challenges. The techniques discussed here apply to those other pipelines, of course, but they all had their unique challenges. While the pipelines all had a common ancestor, a first pipeline developed to handle forms publication, they were developed at different times by different people on different continents.

Even so, yours truly did refactor, merge and rewrite two of his pipelines for two separate legal commentary products into a single one where a simple reconfiguration of the pipeline using build script properties was all that was needed to switch between the two products.

Note

An alternative way of doing pipelines is explored in a 2017 Balisage paper I

had the good fortune to review before the conference,

micropipelining. This paper, Patterns and

antipatterns in XSLT micropipelining by David J. Birnbaum, explores micropipelining

, a

pipelining method where a pipeline in a single XSLT is constructed by adding a

series of variables, each of them a step doing something to the input.

We used these techniques in some of our steps, essentially creating pipelines within pipelines. Describing them would bring up the size of this paper to that of a novel, so I recommend you to read David's paper instead.

Preprocessing?

One of the reviewers of this paper wanted to know if preprocessing the content would have helped. While his or her question was specifically made in the context of processing list items, footnotes and headings, the answer I wish to provide should apply to everything in this paper:

First, yes, preprocessing helps! We did, and we do, a lot of that. Consider that the RTFs were (and still are, at the time of this writing) being used for publishing in print and online, and some of the problems encountered when migrating are equally problematic when publishing. There are numerous Microsoft Word macros in place to check, and frequently correct, various aspects of the content before publication. To pick but one example, there is a macro that converts every footnote created using Microsoft functionality to an inline superscripted label matching its footnote body placed elsewhere in the document, as the MS-style footnotes will break the publishing (and migration) process.

Second, what is the difference between a preprocess and a pipeline step? If it's

simply that the former is something done to the RTFs, before the pipelined

conversion domain, the line is already somewhat blurred. The initial conversion

takes the RTF to first docx and then to XHTML, but I would argue that the XHTML is

a

reasonably faithful reproduction of the RTF's event-based semantics and so equally

well-suited for preprocessing

, unless the problem we want to solve is

the lack of semantics, neatly bringing me to my final point.

Third, the kind of problem that cannot be solved by preprocessing is an authoring mistake, typically ranging from not using a style to using the wrong one. It is the very lack of semantics that is the problem. If the style used was the wrong one, the usual consequence is a processing attribute left behind and discovered during QA. This can be detected but it cannot be automatically fixed.

That said, we did sometimes preprocess the RTF rather than adding a pipeline step, mostly because there was already a macro to process the RTFs that did what we wanted, not because the macro did something the pipeline couldn't.

Conclusions

Some conclusions I am willing to back up:

-

A conversion from RTF to XML is error-prone but quite possible to automate.

-

The slightest errors in the sources will cause problems but they can be minimised and controlled. At the very least, it is possible to develop workarounds with relative ease.

-

A pipeline with a multitude of steps is the way to go, every step doing one thing and one thing only.

It is easy for a developer to defocus ever so slightly and add more to a step than intended (

I'll just fix this problem here so I don't have to do another step

). This is bad. Remain focussed and your colleagues will thank you.

Lastly

Here's where I thank my colleagues at LexisNexis, past and present, without whom I would most certainly be writing about something else. Special thanks must go to Shely Bhogal and Mark Shellenberger, my fellow Content Architects in the project, but also to Nic Gibson who designed and wrote much of the underlying pipeline mechanics.

Also, thanks to Fiona Prowting and Edoardo Nolfo, my Project Manager and Line Manager, respectively, who sometimes believe in me more than I do.

References

[id-halsbury] Halsbury's Laws of England http://www.lexisnexis.co.uk/en-uk/products/halsburys-laws-of-england.page#

[id-nicg-xproc-tools] XProc Tools https://github.com/Corbas/xproc-tools

[id-rtf2latex] rtf2latex2e http://rtf2latex2e.sourceforge.net/

[id-ttm] TtM, a TeX to MathML translator http://hutchinson.belmont.ma.us/tth/mml/

[1] And seen as such; the terminology may sometimes be confusing for structure nazis like yours truly.

[2] Originally referring to literally loose leafs to be added to binders.

[3] Sometimes the changes warrant whole new chapters or sections containing

the new A paras

. These chapters and sections will then follow

the number-letter numbering conventions.

[4] Another being that the paper publishing system, SDL XPP, a proprietary print solution for XML used by LexisNexis, appears to require a single file as input.

[5] Another early motivation for the roundtrip was to have the in-house editors perform QA on the converted files—by first converting them back to RTF. Thankfully, we were able to show the client that there are better ways to perform the QA.

[6] A surprisingly difficult question to answer when discussing several gigabytes of data.

[7] Enabling the developer to run a step against the previous step's output.

[8] Options include debug output, stitch patterns, validation, and much more.

[9] There were more than a hundred titles, meaning more than a thousand physical files.

[10] What's shown here are the default patterns in the file stitcher XSLT. In reality, as several different commentary title sets were converted, the calling XProc pipeline would add other patterns.

[11] Legal documents tend to add small caps to their formatting, just to pick one example.

[12] While the RTF template includes a number of basic list styles, the labels in an ordered list are usually entered manually; a single volpara will have a well-defined progression of allowed ordered list types so that each list item can be referenced in the text.

[13] Or the last following sibling, if there were no more volume paragraphs to add.

[14] That is, there was no actual linking support to be had.

[15] Or inside the span, or a combination of both.

[16] Most of our conversions ran in batch, sometimes with dozens or