How to cite this paper

Turner, Matt. “Entity Services in Action with NISO STS.” Presented at Balisage: The Markup Conference 2017, Washington, DC, August 1 - 4, 2017. In Proceedings of Balisage: The Markup Conference 2017. Balisage Series on Markup Technologies, vol. 19 (2017). https://doi.org/10.4242/BalisageVol19.Turner01.

Balisage: The Markup Conference 2017

August 1 - 4, 2017

Balisage Paper: Entity Services in Action with NISO STS

Matt Turner

CTO Media & Entertainment

MarkLogic Corporation

Matt Turner is the CTO, Media and Entertainment at MarkLogic where he develops

strategy and solutions for the Media, Publishing, Entertainment and Information Provider

markets and works with customers and prospects to create leading edge information

and

digital content applications with MarkLogic¹s Enterprise NoSQL database. Matt has

worked

closely with MarkLogic customers NBC, Warner Bros., LexisNexis, McGraw-Hill Finance,

Dow

Jones and more. Before joining MarkLogic, Matt was at Sony Music and PC World pioneering

the use of XML and developing innovative publishing and asset delivery

applications.

Copyright © 2017 MarkLogic Corporation

Abstract

Standards impact nearly every industry and government process, and standards

organizations like BSI and ISO have been leading a change in how to provide a variety

of

audiences not just with the standards documents themselves but with valuable data

about

standards and the process of standardization. Now, there is a new data standard for

standards called NISO STS (National Information Standards Organization Standards Tag

Set).

Working with ISO and sample content from ISO, MarkLogic has created a demonstration

of NISO

STS using MarkLogic 9's new Entity Services feature. This reviews the industry impact

of

standardization of data and how Entity Services can help leverage these standards.

Table of Contents

- Introduction

- The Impact of Definition

- Data Definition

- MarkLogic Entity Services

- NISO STS Standard

- Entity Services NISO STS Demo

-

- Getting Started

- Putting the Model into Action

- Summary

- References

- Thank You

Introduction

Across every industry, efforts to define processes and materials have resulted in

dramatic

improvements in productivity and efficiency. These technical and business process

definitions

are often expressed as standards that are created with leaders in the industry and

international standards organizations and adopted by the industry.

Like industry standards, data definitions could also have an industry impact to enable

collaboration and efficiency in the creation, management and delivery of critical

information.

However, the management of data definitions and the creation of data standards are

still

largely separate from the applications and processes that use those definitions. In

fact,

these processes often separately define data models for each purpose.

This paper will cover two developments in the space of defining data standards and

data

models: the NISO STS standard to define a data model for standards documents and MarkLogic

Entity Services to put data models into action in the database.

The Impact of Definition

Both standards and data models benefit their constituencies by providing common

definitions that enable interoperability and efficiency between the organizations

that adopt

the common standards and models.

Definition enables:

-

Interoperability – the ability for multiple processes and applications to make use

of

the definition

-

Specialized roles – relying on the standard and model, groups can specialize their

efforts and focus expertise and resources optimizing their role

-

Universal application – wide adoption and multiple uses creating benefits across the

industry

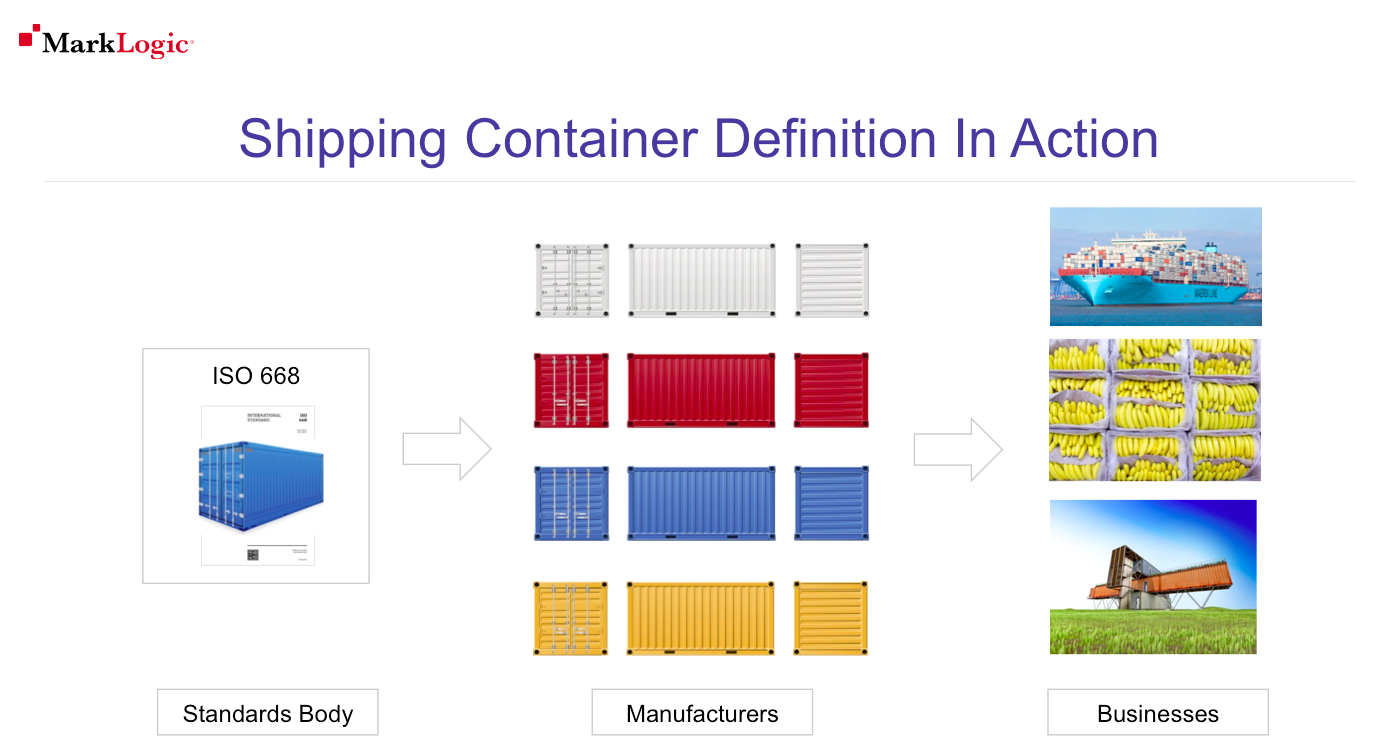

One example of definition in action is the impact of the ISO freight container standards.

Developed in the 1960s, these standards have dramatically changed the shipping industry

and

are one of the biggest factors in the economic globalization that has changed the

world in the

last 60 years.

Prior to the adoption of the definition of the freight container described in the

standards, the industry moved break bulk cargo. This freight came in many different

shapes and

sizes and required each step in the shipping process to be unique and custom for every

shipment. The freight container standard brought dramatic efficiencies. Time in port

was

reduced from 4-5 days to overnight while increasing the capacity of ships and standardizing

and optimizing every step of the shipping process.

The result has been a dramatic change in the cost of shipping. Prior to the adoption

of

the freight container standards, shipping was up to 20% of the total cost of goods.

With the

adoption of the container, this now a fraction of a percent. (Ninety

Percent of Everything, Rose, 2017)

To gain these benefits, the industry put the definition of the freight container into

action. The definition itself is the standard—a precisely worded document. The manufacturers

that make the containers use that definition to create the containers. Everyone else

in the

shipping industry can then use those standard containers for whatever purposes they

want. The

container ship operators or crane designers don’t have to know how to make the containers;

they can rely on the containers having a specific size and fittings as defined in

the standard

and specialize in making their part of the process as efficient as possible. This

is

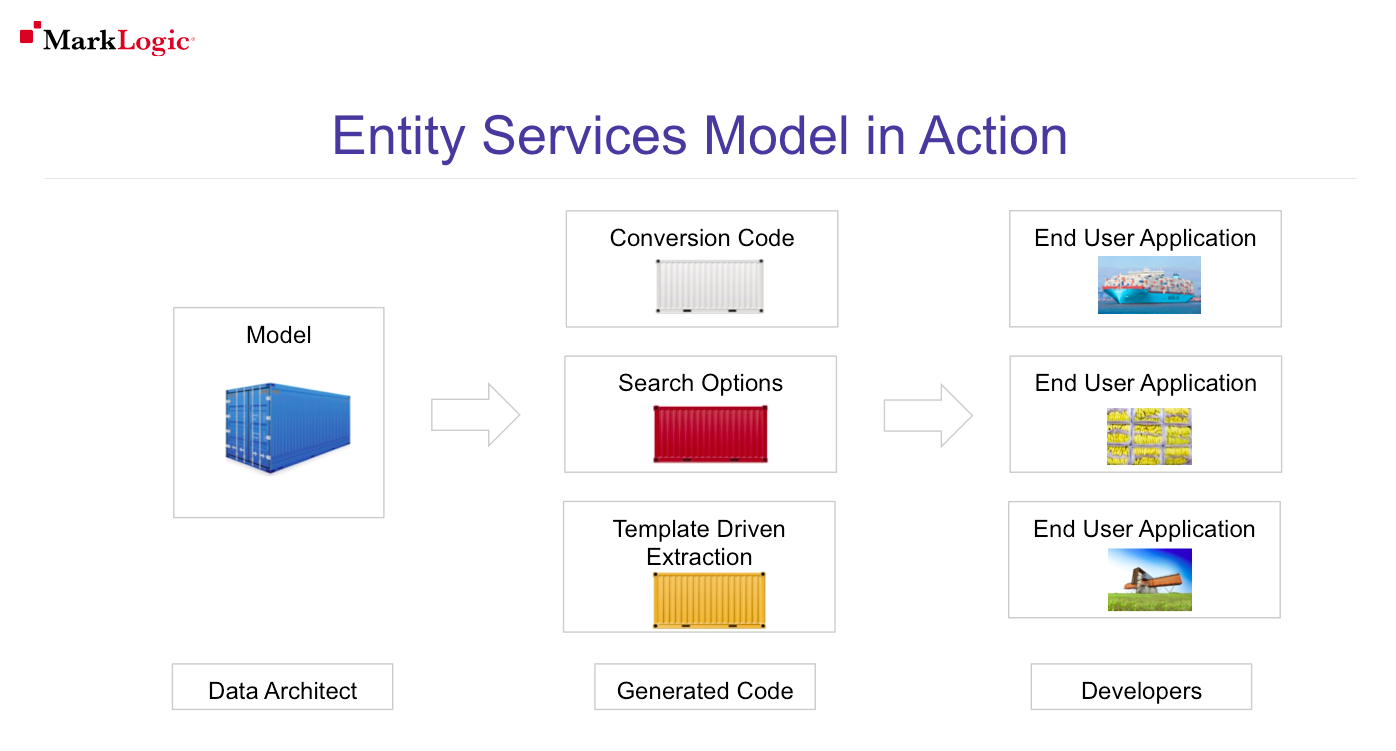

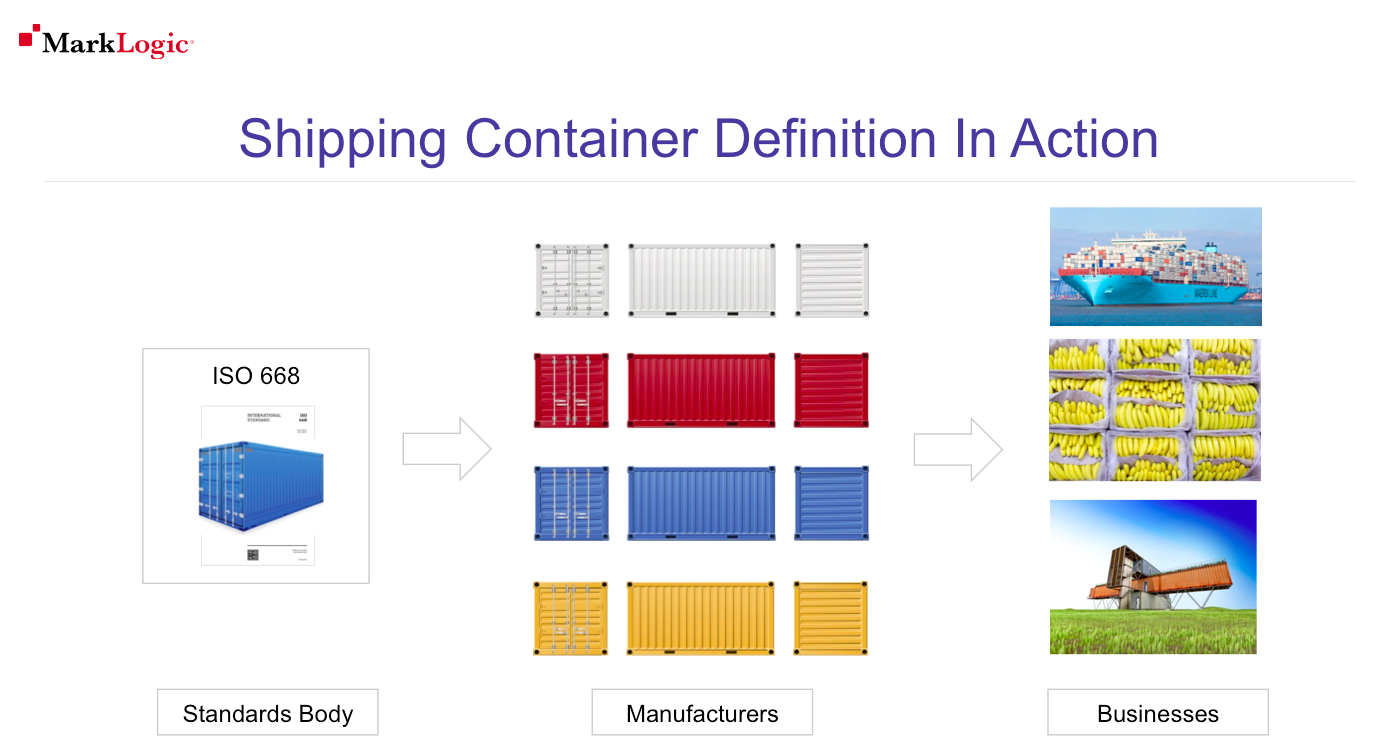

illustrated in figure 1.

Figure 1:

This is an example of the benefits of definition. Because the freight container is

defined, it is interoperable with containers moving easily between uses, it enables

each part

of the industry to specialize, and it is universally applied with many uses and adaptations

across the industry.

Data Definition

Today, data is defined in many ways, but these approaches haven’t yet delivered the

impacts of the wide adoption of definition and standards. This is because the way

data is

defined is not put into action in the same way that definitions and standards are

applied to

other industries.

Instead, data is defined in many places and with many different tools. This

includes:

-

Database schemas that have requirements and optimizations for specific uses of the

data

-

Application code that interpret the data and create functionality to create, query

and

access the data

-

ETL (extract, transform and load) code that processes, transforms and moves the data

from one system to another

All of these processes may work with the same data, but they all, independently, define

that data in many different ways and with many different variations.

There may be an overall definition of this data— an entity model that describes the

data,

but this model is usually only interpreted and referenced by each process that needs

to work

with the data. There is no direct connection to the actual schemas, application code

or ETL

configurations that process and handle the data. Instead, each of these tools and

the many

more that can be part of a project, creates and updates its own independent data models

suited

to the outcome of only that process.

This lack of a definition or model that is put into action means that technology teams

can

struggle with interoperability of code and data models, have issues creating specialization

as

each resource working on a process has to understand every other application’s definitions.

As

a result, there is seldom universal adoption of data models and code across the

industry.

MarkLogic Entity Services

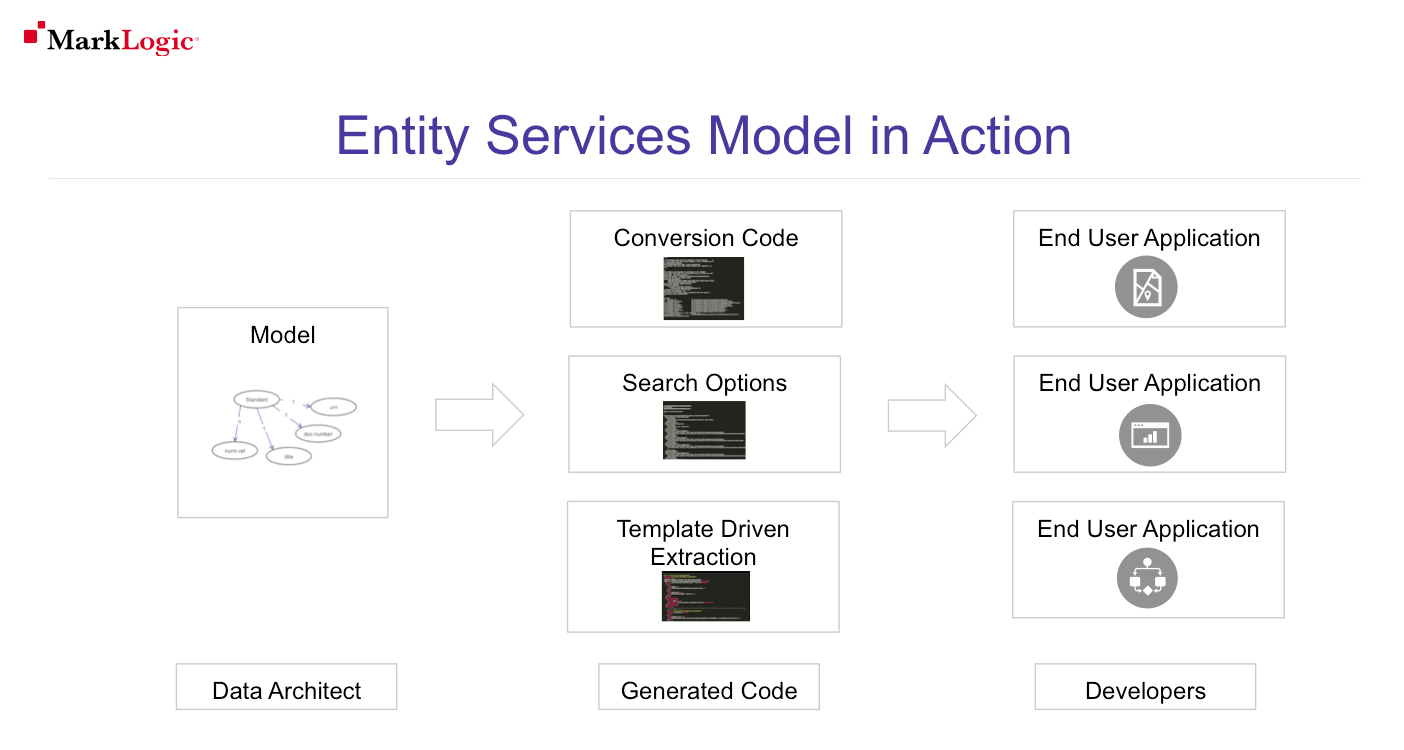

The goal of MarkLogic Entity Services is to define functionality and processes that

put

the entity model, the description of the data in its truest and most universal form,

into

action.

The MarkLogic Entity Services is designed to:

-

Describe real-world entities, properties, and relationships in a Semantic model

-

Automatically derive services, transformations, configuration from the model

-

Enable users to govern context and data together

-

Enable users to take an iterative and evolutionary approach to use only as much as

you

need of the data model for each iteration to adapt to changes instead of setting the

entire model in place at the start

The starting point to use Entity Services is to create an entity model. This model

describes the data in its most complete form and includes the following elements:

-

Entities – the domain objects that this data is about

-

Properties – characteristics of that entity

-

Relationships – how entities fit together

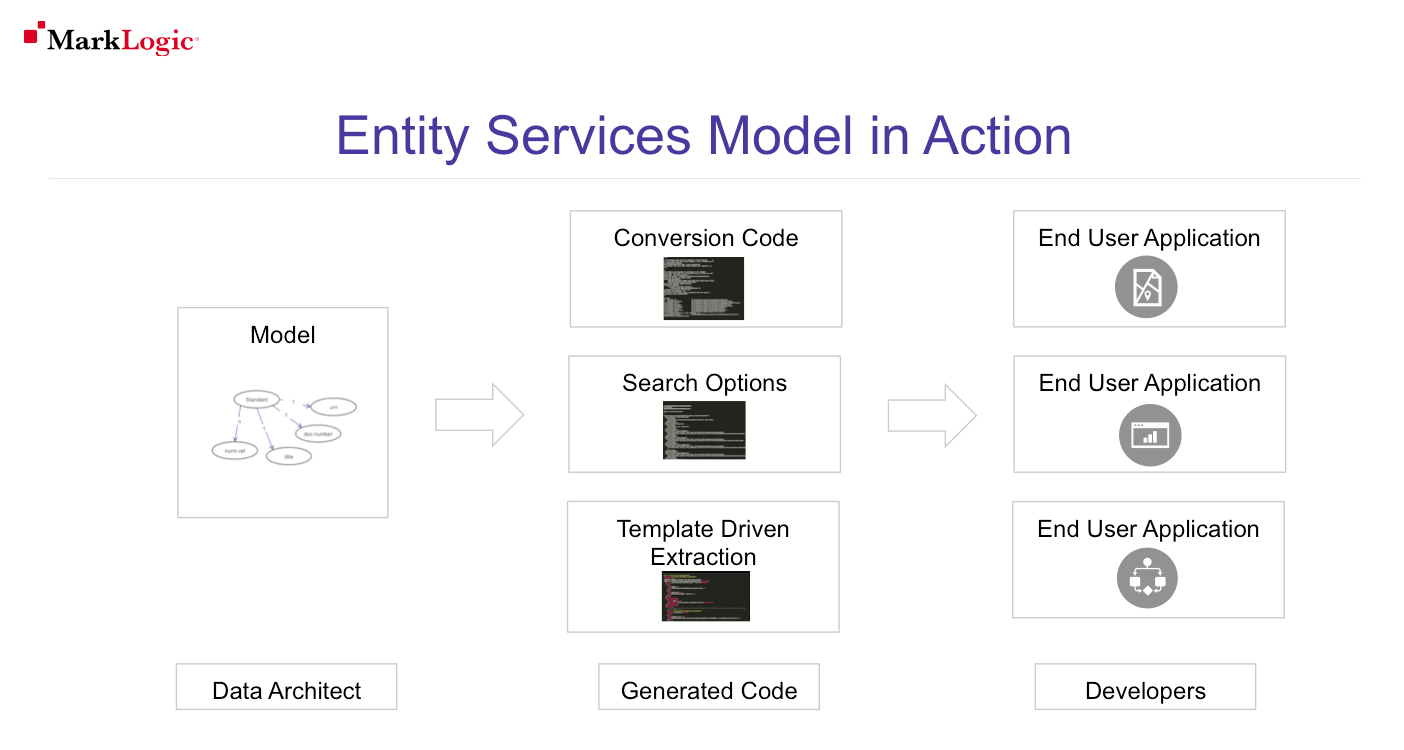

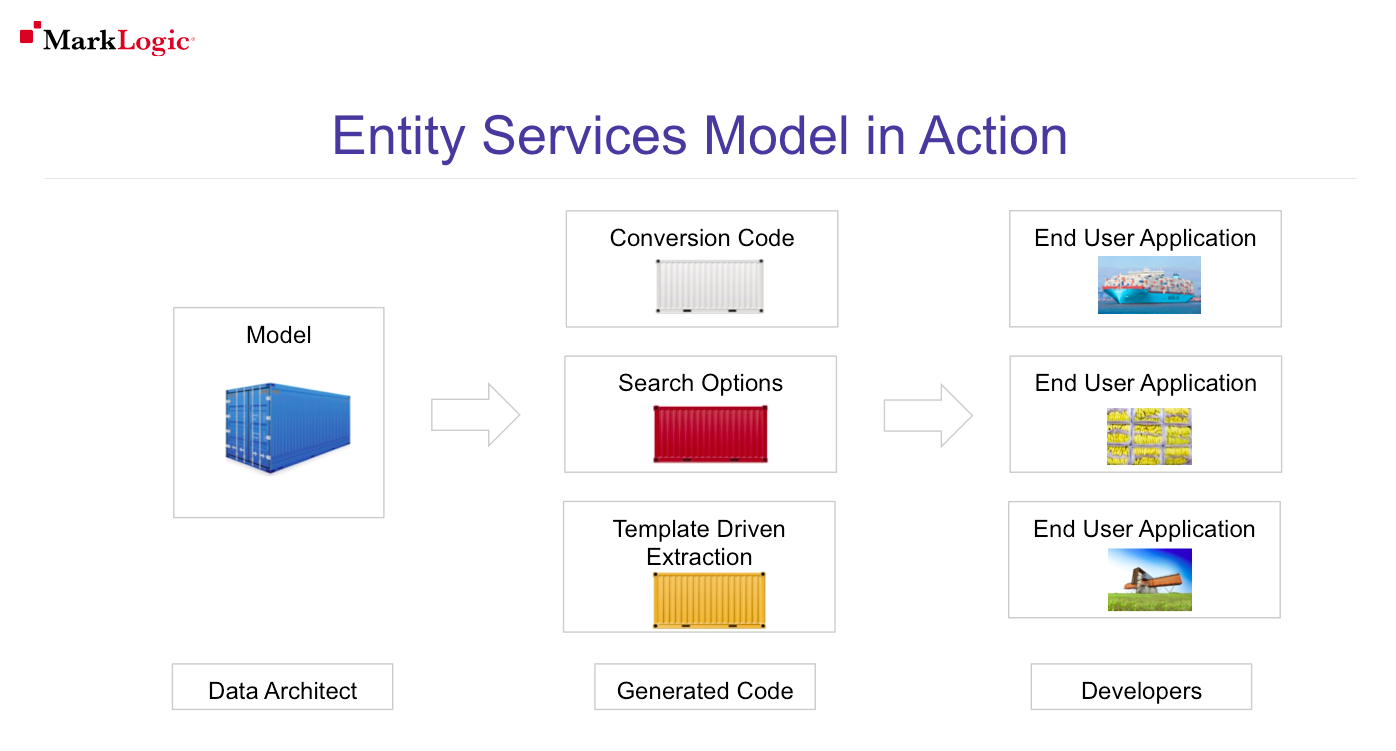

Once the entity model is created, it is put it into action with services that use

the

model to update data and generate code that developers use in their projects.

These artifacts are generated by the entity services code modules, can be customized

by

the developers, and then installed in the MarkLogic database to enable the functionality

based

on the entity model. This is shown in Figure 2.

Figure 2:

With this pattern, the MarkLogic Entity Services can allow technology teams to gain

the

benefits of definition. These benefits include:

-

Interoperability – everyone can refer to the model

-

Specialization – developers don’t need to know how to model data – they can just use

data, just like the ship and crane operators don’t need to know how to build boxes

-

Universal Adoption – this data model can be used for multiple purposes in many

applications

NISO STS Standard

To show MarkLogic Entity Services in action and demonstrate the value of putting the

definition into action to technology teams, MarkLogic selected the newly proposed

data

standard for standards, NISO STS as the basis of a demonstration application.

This initiative from NISO brought together the leaders for the world’s standards bodies

to

define a data standard that would bring the many benefits of standardization to the

standards

themselves.

The current draft of the standard was released in April 2017. It is based on ANSI/JATS

and

the ISO STS format. The community recognized the potential value of wider adoption

of this

data standard and created the NISO STS initiative to define the tag set standard for

standards.

The goals of the initiative are:

-

Ease publication of standards

-

Increase interoperability of standards

-

Aid distribution of standards

-

Improve the future of standards publishing

Full information on the NISO STS standard and full documentation are available at:

http://www.niso.org/workrooms/sts/

Entity Services NISO STS Demo

Working with the International for Standardization (ISO), one of the main sponsors

of the

NISO STS initiative, MarkLogic created a demonstration of the Entity Services features

using

content samples from the ISO Freight Container standards. These data samples were

in closely

related ISO STS format as NISO STS is just in the recommendation phase.

The demonstration highlighted the major features of MarkLogic Entity services to create

a

data model and put that model into action with generated code and artifacts as well

as the

overall impact of putting the data definition into action.

Getting Started

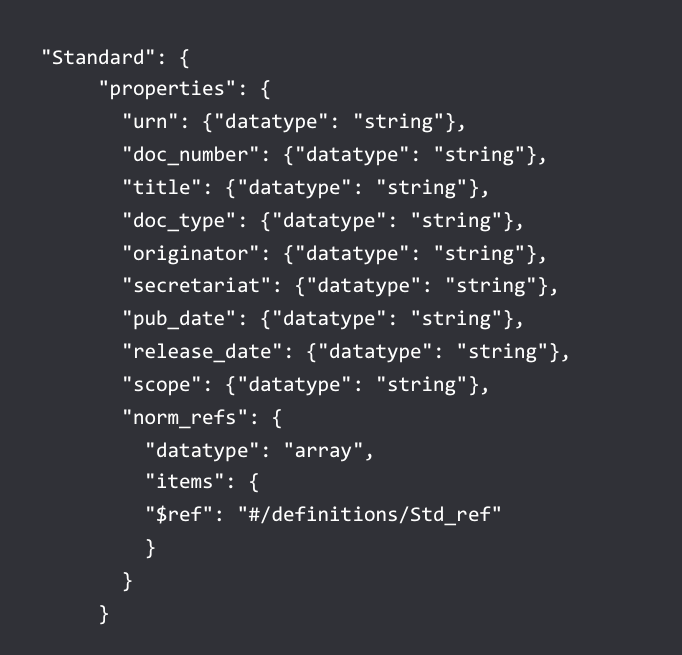

The demonstration first creates the building blocks that data modelers use to generate

the universal artifacts that are later used in the actual application code.

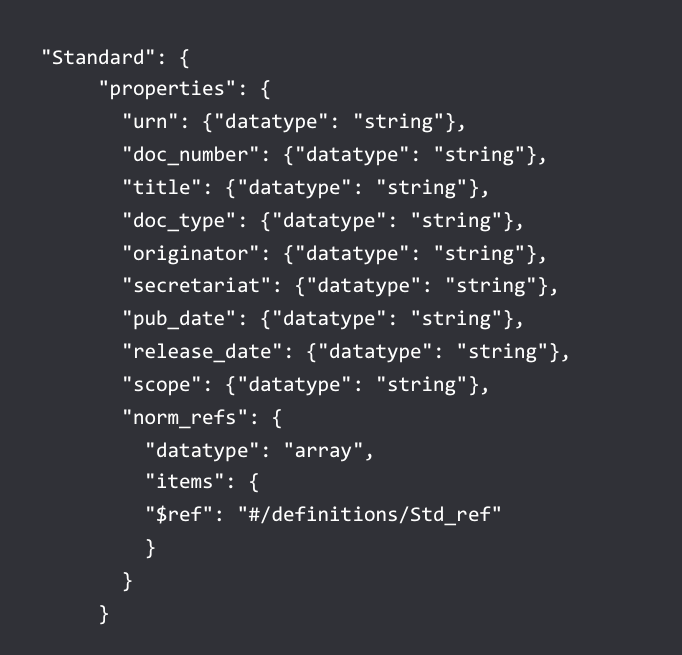

Entity Model: a model of the key data elements in the

NISO standard that included, for the demo, URN, doc number, title, doc type, originator,

secretariat, pub date, release date, scope and normative references. The model is

designed

to be used iteratively and these data elements were all that were needed for the

demonstration. The model is created in JSON and uploaded into the database where it

is

persisted as semantic triples. The model can then be queried and linked to other entity

data

to provide additional context, such as business definitions or security data, to

applications using and governing the data. The Entity Model for this demonstration

is shown

in Figure 3.

Figure 3;

Instance Converter: Code to map the source data in the

data in the STS format to the entity model. The exact paths to the elements in the

source

documents are input the converter file enabling all the other Entity Services functions

to

access data from the source documents in structure of the entity model.

Instance Generator: To put the mode into action, the

entity services use the converter and the entity model to create new documents in

the

database. These documents use the envelope pattern that captures the entity data and

other

metadata in an envelope around the original source document. This enables developers

and

subsequent entity services functions to easily access the entity data while also making

the

original source data available.

Putting the Model into Action

Using these foundational pieces, data molders can then generate code and developers

put

that code into action based on the shared and defined data model.

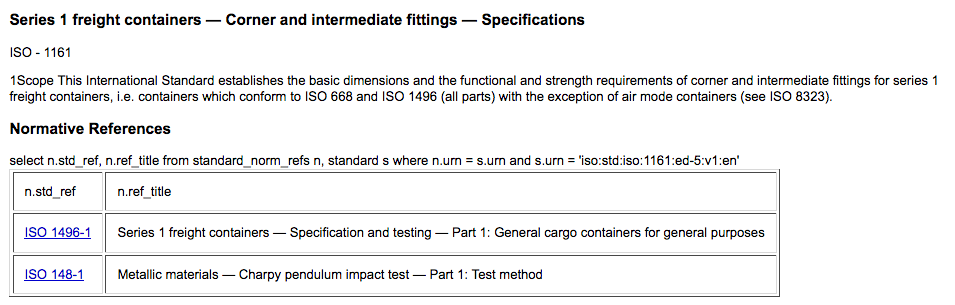

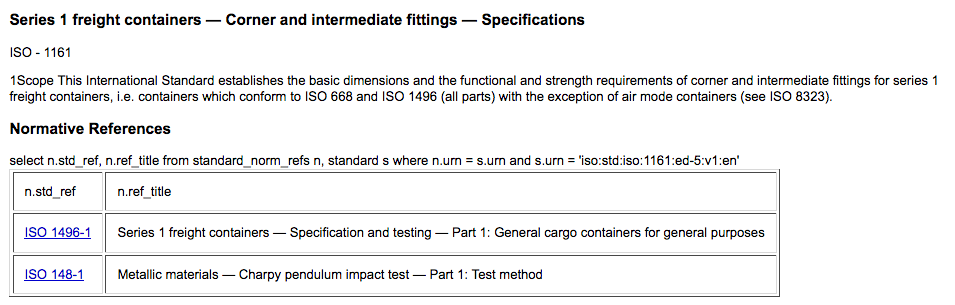

Search Options: model driven code to generate a

MarkLogic Search API options node. This includes the specification for search constraints

and facets with the typed data defined in the entity model.

Template Based Extraction (TDE)

/ SQL Query: TDE is a new feature new of MarkLogic 9 that

enables a view of data to be generated based on a template that describes the source

of the

data in the database and structure of the view. Once the template is loaded into the

database, data supporting the view is automatically created (in triple format) and

made

available for query using MarkLogic’s SQL and Optic API functionality. MarkLogic Entity

Services features automatically generate this template based on entity model enabling

developers to immediately use the TDE features. For the demo, this was used to run

SQL

against the data elements defined in the entity model.

Custom Application: to demonstrate the entity model in

action, the demonstration included a custom application that used the search options

to

access and search the standards with facets for originator, secretariat, pub date

and

release date. The application also featured normative reference SQL queries when displaying

a standard to show the related standards and explore the additional freight container

standards that also relied on those standards. Normative references SQL query is shown

in

Figure 4:

Figure 4:

In addition to these features, the Entity Services functionality includes these features

not explored in the demonstration:

The features of MarkLogic Entity Services define a pattern that enables the entity

model

to be put into action and for technology teams to gain the benefits of definition.

Summary

The benefits of definition can have an impact on industries and processes that are

able to

put definitions into action. These results can be dramatic and world changing as in

the case

of the shipping industry and the ISO freight container standards.

Bringing these benefits to data and information technology has, to date, been challenging

as data definitions and standards are often separated from the processes that use

the

data.

The demonstration using NISO STS, a standard for standards, and MarkLogic Entity Services,

the pattern to put the data model into action, shows that these benefits can be within

reach

for information technology. These include interoperability as data models can be used

for

multiple purposes, specialization of roles as developers and data architects can focus

on just

their tasks and universal adoption as everyone, including external and industry organizations,

can rely on and use the data model. This is illustrated in Figure 5.

Figure 5:

As these processes and standards are adopted, information technology teams can see

the

benefits that other industries have seen by putting definitions and standards into

action.

References

The following references were used to create this paper and the demonstration application:

Thank You

The author would like to thank the following people for their support to create this

paper

and demonstration application:

-

Stephane Chatelet, Director Information Technologies, ISO

-

Holger Apel, Software Manager, ISO

-

Bruce Rosenblum, CEO Inera Systems and Chairman of NISO STS working group

-

Charles Greer, Lead Software Engineer, MarkLogic

-

Justin Makeig, Product Manager, MarkLogic